It's a promise that seems almost too good to be true: super-fast internet that's cheap, and free of the contracts and hassles that come with major service providers.

That's not a pipe dream for Brian Hall, it's his goal.

The lead volunteer behind the community group NYC Mesh aims to bring affordable internet with lightning-quick downloads to everyone in New York, one building at a time.

"Our typical speeds are 80 to 110 megabits a second," Hall says, pointing out that streaming something like Netflix only requires about 5 Mbps.

Brian Hall, the lead volunteer behind the community group NYC Mesh, installs an antenna on a member's building. Due to the nature of a mesh network, adding buildings helps expand the group's overall wireless coverage area.(CBC)CBC News joined him one afternoon on a roof in the Brooklyn neighbourhood of Greenpoint. Hall was installing the latest addition to the mesh network that will deliver his vision.

The worksite is one of the group's latest customers, a converted warehouse that houses a video production company. The regular commercial internet providers were going to charge tens of thousands of dollars to get them online.

NYC Mesh took on the job for a small installation fee of a few hundred dollars and a monthly donation.

Mesh networks explained

So what is a mesh network?

Picture a spiderweb of wireless connections. The main signal originates from what's called the Supernode. It's a direct plugin to the internet, via an internet exchange point — the same place Internet Service Providers get their connection.

The signal from the supernode, sent out wirelessly via an antenna, covers an area of several kilometres.

From there, a mesh of smaller antennas spread out on rooftops or balconies receive that signal. They're connected to Wi-Fi access points that allow people to use the internet.

This is a quick description of the workings of a wireless mesh network that provides broadband internet service to New Yorkers using wi-fi.0:26

Each supernode can connect thousands of users.

And the access points talk to the others around them, so if one goes down for some reason the rest still work.

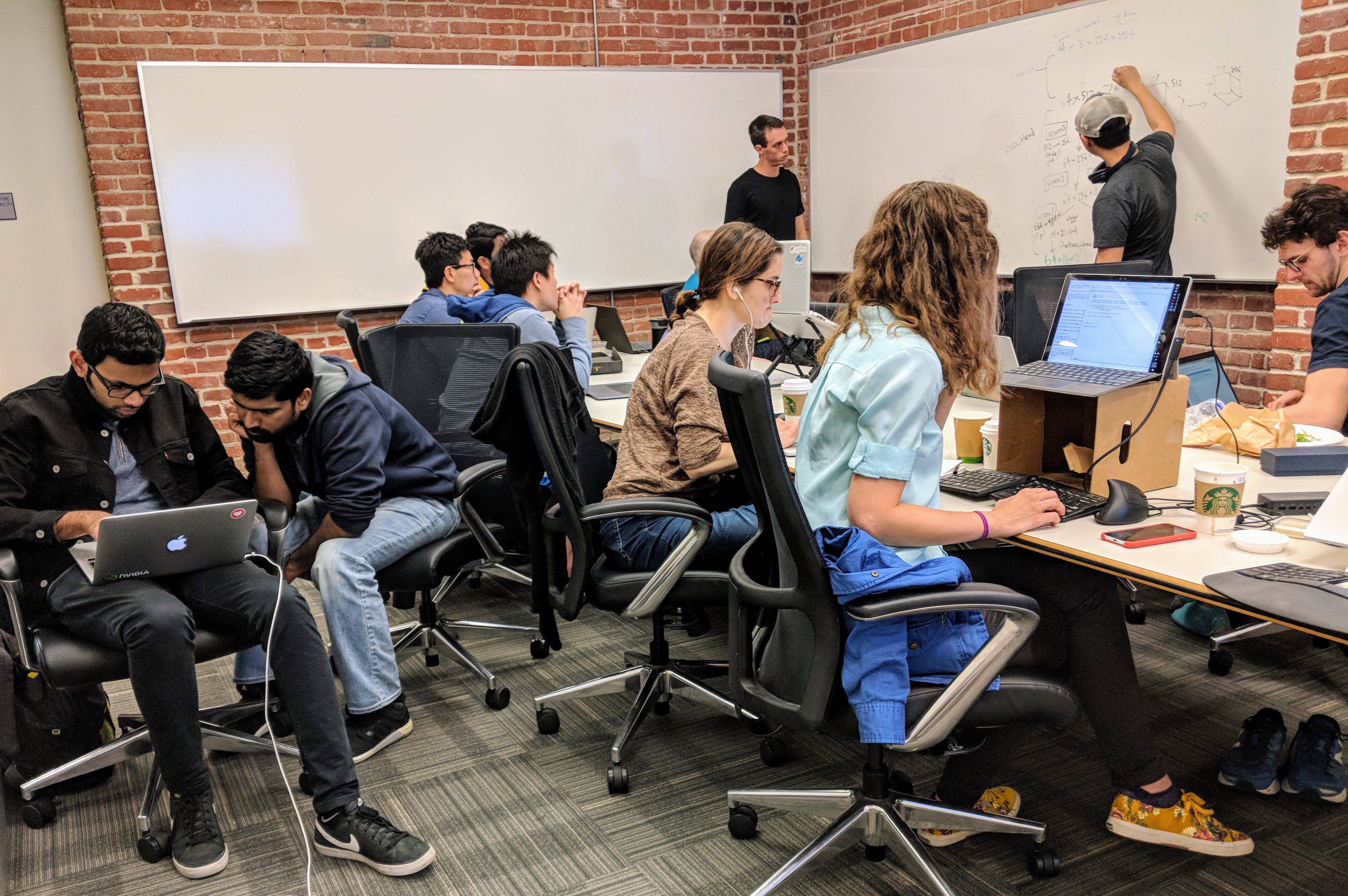

"Mesh networks are an alternative to standard ISP hookups. You're not provided with an internet connection through their cable, but through — in our case —Wi-Fi networks," says Jason Howard, a programmer and actor who's helping with the latest installation.

NYC Mesh bought an industrial-strength connection to the internet right at an Internet Exchange Point (IXP), in this case a futuristic-looking tower in downtown Manhattan. It's the same place that internet service providers (ISPs) like Verizon and Spectrum connect to the internet, accessing massive amounts of wired bandwidth.

Jason Howard, a programmer and actor, volunteers with NYC Mesh. Here he helps with the group's installation at a converted warehouse in New York City.(CBC)NYC Mesh then installed an antenna on the roof of the IXP. That became the supernode, the heart of its mesh network.

From there it beams out and receives Wi-Fi signals, connecting to receivers on rooftops spread through the East Village and Chinatown, and across the river into parts of Brooklyn.

Myth of the ISP

Zach Giles is one of the brains behind the network and one its busiest volunteers. When he's not working his day job in finance, he's maintaining the supernode. The rooftop has become his second office.

He's a mesh network evangelist who says most people don't realize they don't need to rely on traditional ISPs to get online.

"That's the myth of the ISP," Giles says in between installing another antenna.

Zach Giles is one of the technical brains behind the NYC Mesh network and one its busiest volunteers. When he's not working at his day job in finance, he's maintaining the group's primary supernode, seen here in the background. (CBC)"The internet doesn't really cost you anything, it's just the connection [that has a fee]. So however you can get plugged in — then you're on the internet. Nobody owns the internet, there's no one to pay."

Staring out over a city of millions with so many potential users, Giles says he wishes he could shout out that message for everyone to hear that there are other — and cheaper — ways to connect to the internet than corporate ISPs.

One person who has heard the message is Jessica Marshall. A mechanical engineer, she's been watching NYC Mesh's growth for a while.

Giles says most people don't realize there are cheaper ways to connect to the internet than relying on corporate internet service providers. (CBC)On the day CBC News joined Hall and Howard, Marshall tagged along as well, ready to take a more hands-on role. Like Giles and the other volunteers, she sees the work as a mission.

Marshall says she's driven by, "the fact that I didn't have to rely on a gigantic company that's headquartered somewhere else — that's run by people who don't care about me or the internet necessarily, but profits."

She adds that, "You can build your own internet [connection] and have control over it."

Net neutrality

Since 2013, NYC Mesh has installed 154 antennas around New York, offering service to thousands of people.

When net neutrality rules in the U.S. were repealed in December, interest in NYC Mesh spiked dramatically. The group went from 500 requests for installation all of last year to 1,300 so far this year.

The fear drawing some new users to NYC Mesh is that, with net neutrality rules gone — the Federal Communications Commission in the U.S. took them off the books on Monday — ISPs have the ability to block or slow down access to various websites or potentially charge for access to certain sites.

Jessica Marshall, a mechanical engineer, is one of the latest volunteers with NYC Mesh. She joined partly because she likes the idea of having some control over how she accesses the internet.(CBC)The new FCC rules do require ISPs to disclose any throttling, as well as when they prioritize the speed of some content over others. But for many users, the end of net neutrality goes against the spirit of the internet as something that should be open and accessible to all.

NYC Mesh promises they won't slow down internet speeds or limit access to sites, and will never store, track or monitor personal data.

The ability to get around the big internet providers gives a Robin Hood-esque feel to the volunteers at NYC Mesh, many of whom, like Howard, admit to a rebellious streak.

Howard says he doesn't see himself as a revolutionary — "maybe just anti-authority," he adds with a smile.

"The big companies would have you think that there's no option than them, especially in New York City," Howard says. "It's so refreshing to come across this ability to do something else as an alternative."

Still niche

But for all its growth, NYC Mesh is still very much in its infancy, says Motherboard science writer Kaleigh Rogers.

"It's still such a small sort of niche community."

She says mesh networks challenge the public's sense of how the internet operates.

Kaleigh Rogers, a science writer with Motherboard, says while NYC Mesh is growing quickly, the understanding and adoption of mesh networks by U.S. consumers as a whole is still in its infancy.(CBC)"We are so used to the internet being this other thing, run by private businesses. But there's no reason why it has to be. You know, the core infrastructure that rigs up the whole planet with internet, anyone can connect to it," she says, echoing Gile's point.

Rogers does point out, however, that one of the barriers to entry for mesh customers can be the technical requirements.

Unlike signing up with a commercial ISP, which just involves a phone call to a major provider, a mesh network requires customers to invest a bit more time and effort.

Jason Howard, left, Jessica Marshall and Brian Hall install wifi equipment on the rooftop of a new member's building. They offer to do the work for new customers at a fraction of the price charged by commercial ISPs. (CBC)

"You have to understand a little bit about the technical aspects of it," Rogers says.

"So I think people are a little intimidated. And it's just not as widely known — we don't have any really good 'use' cases here in North America that show how active and how nice [mesh] can be if you actually have enough users."

While there are mesh networks dotting the U.S., she says the best working example of what mesh technology can do is in Spain. Guifi.net has more than 34,000 nodes covering an area of roughly 50,000 square kilometres across the Catalonia region.

Inside the mesh

Back in New York, most of NYC Mesh's users are clustered around the first supernode in downtown Manhattan, in Chinatown and the Lower East Side. The surge in interest has allowed the group to build a second supernode in Brooklyn, expanding coverage there.

Linda Justice has been using the network for about a year and a half. She read about the project in a local newspaper and was instantly drawn to the idea of a community-driven network.

NYC Mesh holds public information nights to tell people how the technology works, and how they plan to expand service in New York.(Steven D'Souza/CBC)"I love the idea of communities coming together and supporting each other. I think that's very good, because if it wasn't for them I wouldn't even have Wi-Fi, I'd have to go down to the park and sit out there," she says.

She adds that the difference in cost is remarkable. She gives NYC Mesh a donation of $20 a month, when she can. Justice was paying close to $100 a month with her old provider.

New York resident Linda Justice says that if she didn't have affordable access to NYC Mesh service in her building, she'd have to go to a nearby park to get wifi in order to work.(CBC)Justice admits she's not the most tech-savvy person, and doesn't always understand what Brian Hall and the other volunteers are saying. What she does know is that her speeds are a bit slower at times because her signal is being bounced through various nodes to get to her, but that's an acceptable tradeoff.

"It's worth it to take the time and learn about it," Justice says.

Bridging the digital divide

Affordability is one feature of mesh networks, another is resiliency.

Since the routers are interconnected, if one node goes down, the others can pick up the slack. So even if the main connection to the internet is lost during a power outage, the mesh network can maintain connectivity among its access points for basic functions like text messaging.

Clayton Banks, head of community tech group Silicon Harlem, is building a mesh network in his community with funding help from the government.(CBC)During Superstorm Sandy in 2012, a mesh network in Red Hook in Brooklyn managed to stay up, even when power and other utilities shut down. With limited service and a small number of connections, it allowed neighbours, and even FEMA, to stay connected during the storm.

The U.S. government is now funding mesh networks in various neighbourhoods to prepare for the next storm.

In Harlem, Clayton Banks jumped at the chance to provide his area with one. As head of community tech group Silicon Harlem, he sees the potential reaching far beyond the initial rollout to local businesses.

"We're going to help your kids learn a little bit more about technology. We're going to hire people in this community. We want to be able to give more digital literacy in here," Banks says, noting that close 40 per cent of residents in East Harlem don't have access to broadband internet.

Banks scopes out potential spots for mesh-network wifi access points in his neighbourhood, noting that close 40 per cent of residents in East Harlem don't have access to broadband internet.(CBC)Bridging the digital divide by providing low-cost, high-speed internet is the goal for his mesh network. He says he's tired of seeing kids in his neighbourhood forced to go to coffee shops and use Wi-Fi there to do homework.

"I had a 15-year-old young person come to me and say 'I don't have a computer at home and we don't have broadband. I'm falling behind because those who have those things are no smarter, but they just have the tools to get it done.' So that's why this is so vital."

What's next for mesh

NYC Mesh currently has two supernodes and estimates that with about a dozen more it could blanket the entire city with wireless internet.

Growth is ramping up and more users means more funding, but it's still a volunteer-driven organization — something that may have to change as it scales up.

There's also debate in the community about whether to start charging more for the service as more users join the network.

NYC Mesh currently operates two supernodes providing broadband internet to New York neighbourhoods. Its master plan is to keep adding supernodes - the group estimates it could blanket the city's whole population with about a dozen more.(Steven D'Souza/CBC)The group also knows there will be growing pains as they challenge the status quo and that it's only a matter of time before the big ISPs take notice, which could bring new challenges.

But Giles says his group is a return to the original idea of what the internet was supposed to be, free and accessible to all.

"I would think it's actually actually how it used to be - we are going back to simpler time. It looks complicated, lots of wires, but it's simple."

- Watch Steven D'Souza's feature on NYC Mesh on Wednesday night's The National on CBC television and streamed online

![]()