Facebook Patent: Socioeconomic group classification based on user features [pdf]

Minecraft players exposed to malicious code in modified “skins”

Close to 50,000 Minecraft accounts infected with malware designed to reformat hard-drives and more.

Nearly 50,000 Minecraft accounts have been infected with malware designed to reformat hard-drives and delete backup data and system programs, according to Avast data from the last 30 days. The malicious Powershell script identified by researchers from Avast’s Threat Labs uses Minecraft “skins” created in PNG file format as the distribution vehicle. Skins are a popular feature that modify the look of a Minecraft player’s Avatar. They can be uploaded to the Minecraft site from various online resources.

The malicious code is largely unimpressive and can be found on sites that provide step-by-step instructions on how to create viruses with Notepad. While it is fair to assume that those responsible are not professional cybercriminals, the bigger concern is why the infected skins could be legitimately uploaded to the Minecraft website. With the malware hosted on the official Minecraft domain, any detection triggered could be misinterpreted by users as a false positive.

Hosted URL of malicious skin

Hosted URL of malicious skin

We have contacted Mojang, the creator of Minecraft, and they are working on fixing the vulnerability.

Why Minecraft?

As of January 2018, Minecraft had 74 million players around the globe - an increase of almost 20 million year-on-year. However, only a small percentage of the total user base actively uploads modified skins. Most players use the default versions provided by Minecraft. This explains the low registration of infections. Over the course of 10 days, we’ve blocked 14,500 infection attempts. Despite the low number, the scope for escalation is high given the number of active players globally.

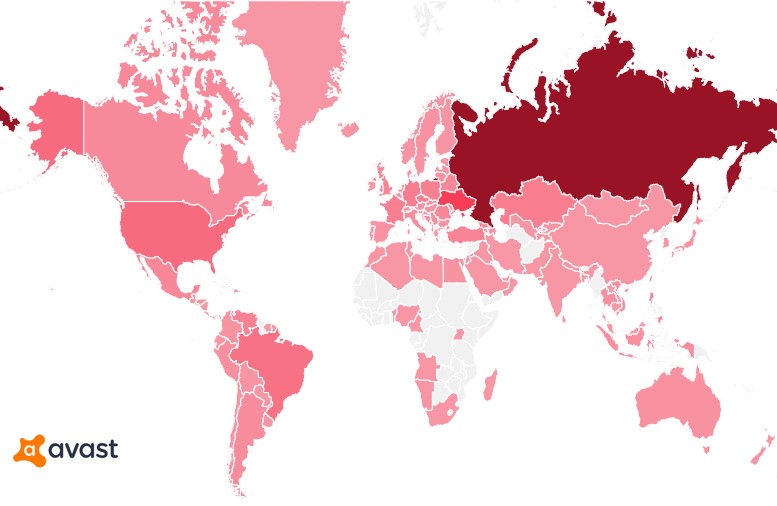

Detection heatmap

Although Minecraft is played by individuals across a broad demographic spectrum, the largest demographic is 15-21 year olds, which accounts for 43% of the user base. The bad actors may have looked to capitalize on a more vulnerable group of unsuspecting users that play a game trusted by parents and guardians. Pentesting is another possibility, but it’s more likely that the vulnerability was exposed for amusement - a more common mindset adopted by script kiddies.

How identifiable is the threat?

Users can identify the threat in a number of ways. The malware is included in skins available on the Minecraft website. Three examples that contain the malware can be seen below.

Not all skins are malicious, but if you’ve downloaded one similar to those featured below, we would recommend you run an antivirus scan.

Users may also receive unusual messages in their account inbox. Some examples identified are:

“You Are Nailed, Buy A New Computer This Is A Piece Of Sh*t”

“You have maxed your internet usage for a lifetime”

“Your a** got glued”

Other evidence of infection includes system performance issues caused by a simple tourstart.exe loop or an error message related to disk formatting.

How can users protect themselves?

Scanning your machine with a strong antivirus (AV) such as Avast Free Antivirus will detect the malicious files and remove them. However, in some cases, the Minecraft application may require reinstallation. In more extreme circumstances where user machines have already been infected with the malware and systems files have been deleted, data restoration is recommended.

YouTube promises expansion of sponsorships, monetization tools for creators

YouTube says it’s rolling out more tools to help its creators make money from their videos. The changes are meant to address creators’ complaints over YouTube’s new monetization policies announced earlier this year. Those policies were designed to make the site more advertiser-friendly following a series of controversies over video content from top creators, including videos from Logan Paul, who had filmed a suicide victim, and PewDiePie, who repeatedly used racial slurs, for example.

The company then decided to set a higher bar to join its YouTube Partner Program, which is what allows video publishers to make money through advertising. Previously, creators only needed 10,000 total views to join; they now need at least 1,000 subscribers and 4,000 hours of view time over the past year to join. This resulted in wide-scale demonetization of videos that previously relied on ads.

The company has also increased policing of video content in recent months, but its systems haven’t always been accurate.

YouTube said in February it was working on better systems for reviewing video content when a video is demonetized over its content. One such change, enacted at the time, involved the use of machine learning technology to address misclassifications of videos related to this policy. This, in turn, has reduced the number of appeals from creators who want a human review of their video content instead.

According to YouTube CEO Susan Wojcicki, the volume of appeals is down by 50 percent as a result.

Wojcicki also announced another new program related to video monetization which is launching into pilot testing with a small number of creators starting this month.

This system will allow creators to disclose, specifically, what sort of content is in their video during the upload process, as it relates to YouTube’s advertiser-friendly guidelines.

“In an ideal world, we’ll eventually get to a state where creators across the platform are able to accurately represent what’s in their videos so that their insights, combined with those of our algorithmic classifiers and human reviewers, will make the monetization process much smoother with fewer false positive demonetizations,” said Wojcicki.

Essentially, this system would rely on self-disclosure regarding content, which would then be factored in as another signal for YouTube’s monetization algorithms to consider. This was something YouTube had also said in February was in the works.

Because not all videos will be brand-safe or meet the requirements to become a YouTube Partner, YouTube now says it will also roll out alternative means of making money from videos.

This includes an expansion of “sponsorships,” which have been in testing since last fall with a select group of creators.

Similar to Twitch subscriptions, sponsorships were introduced to the YouTube Gaming community as a way to support favorites creators through monthly subscriptions (at $4.99/mo), while also receiving various perks like custom emoji and a custom badge for live chat.

Now YouTube says “many more creators” will gain access to sponsorships in the months ahead, but it’s not yet saying how those creators will be selected, or if they’ll have to meet certain requirements, as well. It’s also unclear if YouTube will roll these out more broadly to its community, outside of gaming.

Wojcicki gave updates on various other changes YouTube has enacted in recent months. For example, she said that YouTube’s new moderation tools have led to a 75-plus percent decline in comment flags on channels, where enabled, and these will now be expanded to 10 languages. YouTube’s newer social network-inspired Community feature has also been expanded to more channels, she noted.

The company also patted itself on the back for its improved communication with the wider creator community, saying that this year it has increased replies by 600 percent and improved its reply rate by 75 percent to tweets addressed to its official handles: @TeamYouTube, @YTCreators, and @YouTube.

While that may be true, it’s notable that YouTube isn’t publicly addressing the growing number of complaints from creators who – rightly or wrongly – believe their channel has been somehow “downgraded” by YouTube’s recommendation algorithms, resulting in declining views and loss of subscribers.

This is the issue that led the disturbed individual, Nasim Najafi Aghdam, to attack YouTube’s headquarters earlier this month. Police said that Aghdam, who shot at YouTube employees before killing herself, was “upset with the policies and practices of YouTube.”

It’s obvious, then, why YouTube is likely proceeding with extreme caution when it comes to communicating its policy changes, and isn’t directly addressing complaints similar to Aghdam’s from others in the community.

But the creator backlash is still making itself known. Just read the Twitter replies or comment thread on Wojcicki’s announcement. YouTube’s smaller creators feel they’ve been unfairly punished because of the misdeeds of a few high-profile stars. They’re angry that they don’t have visibility into why their videos are seeing reduced viewership – they only know that something changed.

YouTube glosses over this by touting the successes of its bigger channels.

“Over the last year, channels earning five figures annually grew more than 35 percent, while channels earning six figures annually grew more than 40 percent,” Wojcicki said, highlighting YouTube’s growth.

In fairness, however, YouTube is in a tough place. Its site became so successful over the years, that it became impossible for it to police all the uploads manually. At first, this was the cause for celebration and the chance to put Google’s advanced engineering and technology to work. But these days, as with other sites of similar scale, the challenging of policing bad actors among billions of users, is becoming a Herculean task – and one companies are failing at, too.

YouTube’s over-reliance on algorithms and technology has allowed for a lot of awful content to see daylight – including inappropriate videos aimed a children, disturbing videos, terrorist propaganda, hate speech, fake news and conspiracy theories, unlabeled ads disguised as product reviews or as “fun” content, videos of kids that attract pedophiles, and commenting systems that allowed for harassment and trolling at scale.

To name a few.

YouTube may have woken up late to its numerous issues, but it’s not ignorant of them, at least.

“We know the last year has not been easy for many of you. But we’re committed to listening and using your feedback to help YouTube thrive,” Wojcicki said. “While we’re proud of this progress, I know we have more work to do.”

That’s putting it mildly.

Cloud SQL for PostgreSQL now generally available

Among open-source relational databases, PostgreSQL is one of the most popular—and the most sought-after by Google Cloud Platform (GCP) users. Today, we’re thrilled to announce that PostgreSQL is now generally available and fully supported for all customers on our Cloud SQL fully-managed database service.

Backed by Google’s 24x7 SRE team, high availability with automatic failover, and our SLA, Cloud SQL for PostgreSQL is ready for the demands of your production workloads. It’s built on the strength and reliability of Google Cloud’s infrastructure, scales to support critical workloads and automates all of your backups, replication, patches and updates while ensuring greater than 99.95% availability anywhere in the world. Cloud SQL lets you focus on your application, not your IT operations.

While Cloud SQL for PostgreSQL was in beta, we added high availability and replication, higher performance instances with up to 416GB of RAM, and support for 19 additional extensions. It also joined the Google Cloud Business Associates Agreement (BAA) for HIPAA-covered customers.

Cloud SQL for PostgreSQL runs standard PostgreSQL to maintain compatibility. And when we make improvements to PostgreSQL, we make them available for everyone by contributing to the open source community.

Throughout beta, thousands of customers from a variety of industries such as commercial real estate, satellite imagery, and online retail, deployed workloads on Cloud SQL for PostgreSQL. Here’s how one customer is using Cloud SQL for PostgreSQL to decentralize their data management and scale their business.

How OneMarket decentralizes data management with Cloud SQL

OneMarket is reshaping the way the world shops. Through the power of data, technology, and cross-industry collaboration, OneMarket’s goal is to create better end-to-end retail experiences for consumers.

Built out of Westfield Labs and Westfield Retail Solutions, OneMarket unites retailers, brands, venues and partners to facilitate collaboration on data insights and implement new technologies, such as natural language processing, artificial intelligence and augmented reality at scale.

To build the platform for a network of retailers, venues and technology partners, OneMarket selected GCP, citing its global locations and managed services such as Kubernetes Engine and Cloud SQL.

"I want to focus on business problems. My team uses managed services, like Cloud SQL for PostgreSQL, so we can focus on shipping better quality code and improve our time to market. If we had to worry about servers and systems, we would be spending a lot more time on important, but somewhat insignificant management tasks. As our CTO says, we don’t want to build the plumbing, we want to build the house."

— Peter McInerney, Senior Director of Technical Operations at OneMarket

The OneMarket team employs a microservices architecture to develop, deploy and update parts of their platform quickly and safely. Each microservice is backed by an independent storage service. Cloud SQL for PostgreSQL instances back many of the platform’s 15 microservices, decentralizing data management and ensuring that each service is independently scalable.

"I sometimes reflect on where we were with Westfield Digital in 2008 and 2009. The team was constantly in the datacenter to maintain servers and manage failed disks. Now, it is so easy to scale."

— Peter McInerney

Because the team was able to focus on data models rather than database management, developing the OneMarket platform proceeded smoothly and is now in production, reliably processing transactions for its global customers. Using BigQuery and Cloud SQL for PostgreSQL, OneMarket analyzes data and provides insights into consumer behavior and intent to retailers around the world.

Peter’s advice for companies evaluating cloud solutions like Cloud SQL for PostgreSQL: “You just have to give it a go. Pick a non-critical service and get it running in the cloud to begin building confidence.”

Getting started with Cloud SQL for PostgreSQL

Connecting to a Google Cloud SQL database is the same as connecting to a PostgreSQL database—you use standard connectors and standard tools such as pg_dump to migrate data. If you need assistance, our partner ecosystem can help you get acquainted with Cloud SQL for PostgreSQL. To streamline data transfer, reach out to Google Cloud partners Alooma, Informatica, Segment, Stitch, Talend and Xplenty. For help with visualizing analytics data, try ChartIO, iCharts, Looker, Metabase, and Zoomdata.

Sign up for a $300 credit to try Cloud SQL and the rest of GCP. You can start with inexpensive micro instances for testing and development, and scale them up to serve performance-intensive applications when you’re ready.

Cloud SQL for PostgreSQL reaching general availability is a huge milestone and the best is still to come. Let us know what other features and capabilities you need with our Issue Tracker and by joining the Cloud SQL discussion group. We’re glad you’re along for the ride, and look forward to your feedback!

Upgradeable smart contracts in Ethereum

Imagine a world where a software is maintaining millions of dollars worth of money, but there is an exploit which allows the hacker to take all that money away. Now imagine you can't do much about it because you can't update your software, so all you can do is wait and watch or pull the plug of the whole server.

This is a world we are living in with the software/contracts being developed for the Ethereum blockchain.

Immutability of blockchain has its advantages in making sure that things are tamper proof and the whole history of change can be seen publicly and audited. When it comes to smart contracts, immutability of the contract code has its disadvantages which makes it hard to update in case of bugs. The DAO and the Parity Wallet exploit are a good example of why smart contracts should have the capability to upgrade.

There is no de facto way of upgrading a contract. Several approaches have been developed and I'm going to discuss one of the better ones in my opinion.

Understanding how contracts are called

In Ethereum virtual machine (EVM) there are various ways by which contract A can invoke some function in contract B. Lets go over them

call: This calls the contract B at the given address with provided data, gas and ether. When the call is made the context is changed to this contract B context i.e. the storage used will be of the called contract. The

msg.senderwill be the calling contract A and not the originalmsg.sender.callcode: This is similar to call but the only difference is that the context will be of original contract A so the storage will not be changed to that of the called contract B. This means the code in the contract B can essentially manipulate storage of the contract A. The

msg.senderwill be the calling contract A and not the originalmsg.sender.delegatecall: This is similar to callcode but here the

msg.senderandmsg.valuewill be the original ones. It's as if the user called this contract B directly.

Here delegatecall seems of interest, as this can allow us to proxy a call from the user to a different contract. Now we need to build a contract that allows us to do that.

Upgradeable smart contract

We will write a smart contract that has an owner and it proxies all calls to a different contract address. The owner should be able to upgrade the contract address to which the code proxies to.

This is what that contract would look like

Let's dissect the code

Let's dissect above code. function () payable public is the fallback function. When a user calls a contract without a function call (when sending ether) or a function call that is not implemented, it all gets routed to the fallback function. The function can do whatever it wants to. As we don't know what functions our contracts will have, nor how will it change so this is the ideal place where we route all calls made by user to the required contract address.

Inside this function we have some EVM assembly, as what we want to do is not currently directly available in Solidity.

let ptr := mload(0x40)

calldatacopy(ptr, 0, calldatasize) As the user can send data which would contain information about which function to call and what are the arguments of that function so we also need to send it to our implementation contract. In Solidity the address to free memory is stored at location 0x40. We load the data at 0x40 address and assign it name ptr. Then we call calldatacopy to copy all the data passed in by user to memory starting from ptr address.

let result := delegatecall(gas, _impl, ptr, calldatasize, 0, 0) Here we use the delegatecall as discussed before to call the contract. The result returned is either 0 or 1. 0 means that there was some failure so we need to revert. 1 means the contract code execution was successful.

let size := returndatasize

returndatacopy(ptr, 0, size) As the execution of code might have returned some data so we need to return it back to user, here we copy the returned data to memory starting at ptr address.

switch result

case 0 { revert(ptr, size) }

case 1 { return(ptr, size) } Lastly we just decided whether to return or revert based on the result of delegatecall.

We inherit the contract from Ownable so the contract will have an owner. We expose updateImplementation(address) function to update the implementation and it can only be executed by the owner of contract.

Deploy an upgradeable contract

First deploy the UpgradeableContractProxy contract. Let's say we have a global calculator contract, which has list of numbers in storage and we can get the sum of all numbers in it.

Let's take that contract and deploy it. It should give us some address, now use that address as one of the parameters to call updateImplementation of the previously deployed UpgradeableContractProxy contract. Now our proxy should point to the deployed GlobalCalculator_V1 contract.

Now use the GlobalCalculator_V1 contract interface (ABI) to call the proxy contract. So if you are using web3js then you can do the following

const myGlobalCalculator = new web3.eth.Contract(GlobalCalculator_V1_JSON, 'PROXY_CONTRACT_ADDRESS'); Now when you make a function call to proxy, it will delegate the call to your global calculator contract. Let say you called few addNum(uint) to add numbers to the _nums list in storage and now it has [2, 5] as value.

Let's add a multiplication functionality in your updated contract. We create a new contract which inherits from GlobalCalculator_V1 and add that feature.

Let's deploy GlobalCalculator_V2 contract and call updateImplementation(address) of the proxy to point to this newly deployed contract address.

You can use the GlobalCalculator_V2 contract interface (ABI) to call same proxy contract which would route the call to new implementation.

const myGlobalCalculator_v2 = new web3.eth.Contract(GlobalCalculator_V2_JSON, 'PROXY_CONTRACT_ADDRESS'); You should have the ability to call getMul() now. One thing to notice is that if I call getSum it will return 7 as the _nums in storage is still the same as the old one and have value [2, 5]. So in this way our storage is maintained and we can add more functionality to our contract. The users also don't have to change the address as they always call the proxy contract which delegates it to other contracts.

Pros

- Ability to upgrade code.

- Ability to retain storage, no need to move data.

- Easy to change and fix.

Cons

- As we use fallback function to delegate so ether can't be directly sent to contract (due to not enough gas when using

.transferor.send). We need to have a specific function that needs to be called for receiving ether. - There is some gas cost for executing the proxy logic.

- Your updated codes needs to be backwards compatible.

- The update capability is controlled by one entity. This can be solved by writing a contract that uses tokens and voting mechanism to allow the community to decide whether to update or not.

If you want to look at an example project using truffle which is testing an upgradeable contract see this.

Disclaimer

Don't use the above contract for production as it's not audited for exploits. It is only provided here for educational purpose.

Reference

Google shuttering domain fronting, Signal moving to souqcdn.com

The End of Software: Will Programming Become Obsolete?

Tell a crowd of nerds that software is coming to an end, and you’ll get laughed out of the bar. The very notion that the amount of software and software development in the world will do anything besides continue on an exponential growth curve is unthinkable in most circles. Examine any industry data and you’ll see the same thing – software content is up and to the right. For decades, the trend in system design has been toward increasing the proportion of functionality implemented in software versus hardware, and the makeup of engineering teams has followed suit. It is not uncommon these days to see a 10:1 ratio of software versus hardware engineers on an electronic system development project. And that doesn’t count the scores of applications developed these days that are software alone.

Yep, it’s all coming to an end.

But, software is one of those five immutable elements, isn’t it – fire, water, earth, air, and code? Practically nothing exists in our technological world today without software. Rip out software and you have taken away the very essence of technology – its intelligence – its functionality – its soul.

Is software really all that important?

Let’s back up a bit. Every application, every system is designed to solve a problem. The solution to that problem can generally be broken down into two parts: the algorithm and the data. It is important to understand that the actual answer always lies within the data. The job of the algorithm is simply to find that answer amongst the data as efficiently as possible. Most systems today further break the algorithm portion of that down into two parts: hardware and software. Those elements, of course, form the basis of just about every computing system we design – which comprises most of modern technology.

We all know that, if the algorithm is simple enough, you can sometimes skip the software part. Many algorithms can be implemented in hardware that processes the data and finds the solution directly, with no software required. The original hand calculators, the early arcade video games, and many other “intelligent” devices have used this approach. If you’re not doing anything more complex than multiplication or maybe the occasional quadratic formula, bring on the TTL gates!

The problem with implementing algorithms directly in hardware, though, is that the complexity of the hardware scales with the complexity of the algorithm. Every computation or branch takes more physical logic gates. Back in the earliest days of Moore’s Law, that meant we had very, very tight restrictions on what could be done in optimized hardware. It didn’t take much complexity to strain the limits of what we were willing and able to solder onto a circuit board with discrete logic gates. “Pong” was pushing it. Anything more complicated than that and we leapt blissfully from the reckless optimism of Moore into the warm and comfortable arms of Turing and von Neumann.

The conventional von Neumann processor architecture uses “stored programs” (yep, there it is, software) to allow us to handle arbitrarily complex algorithms without increasing the complexity of our hardware. We can design one standard piece of hardware – the processor, and using that we can execute any number of arbitrarily complex algorithms. Hooray!

But every good thing comes at a price, doesn’t it? The price of programmability is probably somewhere between three and four orders of magnitude. Yep. Your algorithm might run somewhere between 100 and 10,000 times as fast if it were implemented in optimized hardware compared with software on a conventional processor. All of that business with program counters and fetching instructions is really a lot of overhead on top of the real work. And, because of the sequential nature of software, pure von Neumann machines trudge along doing things one at a time that could easily be done simultaneously.

The performance cost of software programmability is huge, but the benefits are, as well. Both hardware cost and engineering productivity are orders of magnitude better. An algorithm you could implement in a few hours in software might require months of development time to create in optimized hardware – if you could do it at all. This tradeoff is so attractive, in fact, that the world has spent over half a century optimizing it. And, during that half century, Moore’s Law has caused the underlying hardware implementation technology to explode exponentially.

The surface of software development has remained remarkably calm considering the turbulence created by Moore’s Law below. The number of components we can cram onto integrated circuits has increased by something like eight orders of magnitude since 1965, and yet we still write and debug code one line at a time. Given tens of millions of times the transistors, processors have remained surprisingly steady as well. Evolution has taken microprocessors from four to sixty-four bits, from one core to many, and has added numerous performance-enhancing features such as caches, pipelines, hardware arithmetic, predictive execution, and so forth, but the basic von Neumann architecture remains largely unchanged.

It’s important to note that all of the enhancements to processor architecture have done – all they ever can do – is to mitigate some of the penalty of programmability. We can make a processor marginally closer to the performance of optimized hardware, but we can never get there – or even close.

Of course, those eight orders of magnitude Moore’s Law has given us in transistor count also mean that the complexity of the algorithms we could implement directly in optimized hardware has similarly increased. If you’re willing and able to make a custom optimized chip to run your specific algorithm, it will always give 100x or more the performance of a software version running on a processor using similarly less energy. The problem there, of course, is the enormous cost and development effort required. Your algorithm has to be pretty important to justify the ~24-month development cycle and most likely eight-figure investment to develop an ASIC.

During that same time, however, we have seen the advent of programmable hardware, in the form of devices such as FPGAs. Now, we can implement our algorithm in something like optimized hardware using a general purpose device (an FPGA) with a penalty of about one order of magnitude compared with actual optimized hardware. This has raised the bar somewhat on the complexity of algorithms (or portions thereof) that can be done without software. In practical terms, however, the complexity of the computing problems we are trying to solve has far outpaced our ability to do them in any kind of custom hardware – including FPGAs. Instead, FPGAs and ASICs are relegated to the role of “accelerators” for key computationally intensive (but less complex) portions of algorithms that are still primarily implemented in software.

Nevertheless, we have a steadily rising level of algorithmic complexity where solutions can be implemented without software. Above that level – start coding. But there is also a level of complexity where software starts to fail as well. Humans can create algorithms only for problems they know how to solve. Yes, we can break complex problems down hierarchically into smaller, solvable units and divide those portions up among teams or individuals with various forms of expertise, but when we reach a problem we do not know how to solve, we cannot write an algorithm to do it.

As a brilliant engineer once told me, “There is no number of 140 IQ people that will replace a 160 IQ person.” OK, this person DID have a 160 IQ (so maybe there was bias in play) but the concept is solid. We cannot write a program to solve a problem we are not smart enough to solve ourselves.

Until AI.

With deep learning, for example, we are basically bypassing the software stage and letting the data itself create the algorithm. We give our system a pile of data and tell it the kind of answer we’re looking for, and the system itself divines the method. And, in most cases, we don’t know how it’s doing it. AI may be able to look at images of a thousand faces and tell us accurately which ones are lying, but we don’t know if it’s going by the angle of the eyebrows, the amount of perspiration, the curl of the lip, or the flaring of the nostrils. Most likely, it is a subtle and complex combination of those.

We now have the role of software bounded – both on the low- and high-complexity sides. If the problem is too simple, it may be subsumed by much more efficient dedicated hardware. If it’s too complex, it may be taken over by AI at a much lower development cost. And the trendlines for each of those is moving toward the center – slowly reducing the role of classical software.

As we build more and more dedicated hardware to accelerate high compute load algorithms, and as we evolve the architectures for AI to expand the scope of problems it can solve, we could well slowly reduce the role of traditional software to nothing. We’d have a world without code.

I wouldn’t go back to bartending school just yet, though. While the von Neumann architecture is under assault from all sides, it has a heck of a moat built for itself. We are likely to see the rapid acceleration of software IP and the continued evolution of programming methods toward higher levels of abstraction and increased productivity for software engineers. Data science will merge with computer science in yet-unforeseen ways, and there will always be demanding problems for bright minds to solve. It will be interesting to watch.

WaystoCap (YC W17) Is Unlocking African Trade. Full Stack Developer? Join Us

A bit about us:

Hi! We're WaystoCap a Y Combinator startup backed by a top tier VC: Battery Ventures; and we are building the future trading platform of B2B in Africa.

We are on a mission to unlock trade in Africa, by creating transparency and trust in international trade.

Our headquarters are in: Morocco and Spain; with offices in the UK and Benin too!

We use the most up-to-date technologies, and we are looking for software developers to help us develop our trading platform and extend our micro services architecture.

Please note as we are still a small company we are looking for people to join us on our journey in the office in Marbella, Spain. We believe building a great company culture starts working together!

A little more about what we do:

Our mission is to help businesses in Africa work with trusted partners globally and grow their company. We are building a trust platform, as its the missing component in any trade! We do more than just a listing or marketplace site, and help our buyers and sellers go through the entire trade process more easily, efficiently, and improved with technology.

Here is a short video about us: The African Opportunity

We want every African business to be able to trade internationally, just like any company and take advantage of cutting edge tech to solve payments, logistics, and most importantly trust issues. In our first year of full operations (2017) we already grew 300%, and the sky is the limit!

Your challenge as a full stack developer:

You will be part of a cross functional agile team helping to build a strong and scalable products. The team will plan, design and build innovative solutions to unlock international trading in Africa. backed by a deep understanding of software engineering and best practices, your duties will include build, monitor and maintain scalable applications.

We are looking for full stack software engineers with:

- Exceptional ability to work anywhere in the technical stack, delivering quality code both on the frontend and backend.

- Fluency in any backend server language, and expertise in relational databases and schema design.

- Strong motivation to drive impact by making product improvements.

- An ability to share knowledge, and evangelize best practices.

- You should be proactive, with strong good communication skills and an ability to learn fast.

Requirements:

- More than 4 years professional experience

- Experience in programming with one of the following language: Golang, Node, Java, Scala

- Experience in software design and testing

- Experience in database and storage technologies (RDBMS, NoSQL,...)

- Experience in frontend technologies (PWA, ReactJS/angular/VueJs)

- Experience with CI/CD pipeline

- Experience working on an agile team

What we offer:

- Product ownership and decision making in the entire development process

- Work with a talented team and learn from international advisors and investors

- Work in sunny Marbella, with a great lifestyle and excellent quality of living

- Be involved in a fundamental company supporting development in Africa

- Very competitive salary and stock options in a Silicon Valley startup

- Regular afterwork drinks, company team buildings, celebrations

- Generous PTO

- Support for relocation (if needed)

- Company provided laptop and screens (you choose your set up!)

- Have a voice in what tools you want us to use

Why does software cost so much?

Posted on by in Data Modeling and Analytics

By Robert Stoddard Principal Researcher Software Solutions Division

Cost estimation was cited by the Government Accountability Office (GAO) as one of the top two reasons why DoD programs continue to have cost overruns. How can we better estimate and manage the cost of systems that are increasingly software intensive? To contain costs, it is essential to understand the factors that drive costs and which ones can be controlled. Although we understand the relationships between certain factors, we do not yet separate the causal influences from non-causal statistical correlations. In this blog post, we explore how the use of an approach known as causal learning can help the DoD identify factors that actually cause software costs to soar and therefore provide more reliable guidance as to how to intervene to better control costs.

As the role of software in the DoD continues to increase so does the need to control the cost of software development and sustainment. Consider the following trends cited in a March 2017 report from the Institute for Defense Analysis:

- The National Research Council (2010) wrote that "The extent of the DoD code in service has been increasing by more than an order of magnitude every decade, and a similar growth pattern has been exhibited within individual, long-lived military systems."

- The Army (2011) estimated that the volume of code under Army depot maintenance (either post-deployment or post-production support) had increased from 5 million to 240 million SLOC between 1980 and 2009. This trend corresponds to approximately 15 percent annual growth.

- A December 2017 Selected Acquisition Report (SAR) showed cost growth in large-scale DoD programs is common, with a $91 billion cost growth to-date (engineering and estimating) in the DoD portfolio. Poor cost estimation, including early lifecycle estimates, represents almost $8 billion of the $91 billion.

The SEI has a long track record of cost-related research to help the DoD manage costs. In 2012, we introduced Quantifying Uncertainty in Early Lifecycle Cost Estimation (QUELCE) as a method for improving pre-Milestone-A software cost estimates through research designed to improve judgment regarding uncertainty in key assumptions (which we term "program change drivers"), the relationships among the program change drivers, and their impact on cost.

QUELCE synthesized scenario planning workshops, Bayesian Belief Network (BBN) modeling, and Monte Carlo simulation into an estimation method that quantifies uncertainties, allows subjective inputs, visually depicts influential relationships among program change drivers and outputs, and assists with the explicit description and documentation underlying an estimate. The outputs of QUELCE necessarily then feed the inputs to existing cost estimation machinery.

While QUELCE focused on early-lifecycle cost estimation (which is highly dependent on subjective expert judgment) to create a connected probabilistic model of change drivers, we realized that major portions of the model could be derived from causal learning of historical DoD program experiences. We found that several existing cost-estimation tools could go beyond traditional statistical correlation and regression and benefit from causal learning as well.

The focus on cost estimation alone does not require causal knowledge, as it is focused on prediction rather than intervention. When prediction is the goal, statistical and maximum likelihood (ML) estimation methods are suitable. If the goal, however, is to intervene to deliberately cause a change via policy or management action, causal learning is more suitable and offers benefits beyond statistical and machine learning methods.

The problem is that statistical correlation and regression alone do not reveal causation because correlation is only a noisy indicator of a causal connections. As a result, the relationships identified by traditional correlation-based statistical methods are of limited value for driving cost reductions. Moreover, the magnitude of cost reductions cannot be confidently derived from the current body of cost-estimation work.

At the SEI, I am working with a team of researchers including

- Mike Konrad, who has background in machine, causal, and deep learning and has recently co-developed measures and analytic tools for the Complexity and Safety, Architecture-Led Incremental System Assurance (ALISA), and other research projects,

- Dave Zubrow, who has an extensive background in statistical analysis and led the development of the SEI DoD Factbook, based on DoD Software Resource Data Report (SRDR) program reporting data, and

- Bill Nichols, who has extensive background with the Team Software Process (TSP) and Personal Software Process (PSP) frameworks, analyzing associated costs, and in designing an organizational dashboard to monitor their effectiveness and deployment.

Why Do We Care About Causal Learning?

If we want to proactively control outcomes, we would be far more effective if we knew which organizational, program, training, process, tool, and personnel factors actually caused the outcomes we care about. To know this information with any certainty, we must move beyond correlation and regression to the subject of causation. We also want to establish causation without the expense and challenge of conducting controlled experiments, the traditional approach to learning about causation within a domain. Establishing causation with observational data remains a vital need and a key technical challenge.

Different approaches have arisen to help determine which factors cause which outcomes from observational data. Some approaches are constraint-based, in which conditional independence tests reveal the graphical structure of the data (e.g., if two variables are independent conditioned on a third, there is no edge connecting them). Other approaches are score-based, in which causal graphs are incrementally grown and then shrunk to improve the score (likelihood-based) of how well the resulting graph fits the data. Such a categorization, however, barely does justice to the diversity of causal algorithms that have been developed to address non-linear--as well as linear--causal systems with Gaussian-- as well as non-Gaussian--error terms.

Judea Pearl, who is credited as the inventor of Bayesian Networks and one of the pioneers of the field, wrote the following about causal learning in Bayesian Networks to Causal and Counterfactual Reasoning:

The development of Bayesian Networks, so people tell me, marked a turning point in the way uncertainty is handled in computer systems. For me, this development was a stepping stone towards a more profound transition, from reasoning about beliefs to reasoning about casual and counterfactual relationships.

Causal learning has come of age from both a theoretical and practical tooling standpoint. Causal learning may be performed on data whether it is derived from experimentation or passive observation. Causal learning has been used recently to identify causal factors within domains such as energy economics, foreign investment benefits, and medical research.

For the past two years, our team has trained in causal learning (causal discovery and causal estimation) at Carnegie Mellon University (CMU) through workshops led by Dr. Richard Scheines, Dean of the Dietrich College of Humanities and Social Sciences, whose research examined graphical and statistical causal inference and foundations of causation. We have also trained and worked with several faculty and staff within the CMU Department of Philosophy led by Dr. David Danks, whose research focuses on causal learning (human and machine). Additional Department of Philosophy faculty and staff include Peter Spirtes, a professor of graphical and statistical modeling of causes; Kun Zhang, professor in time-series-oriented causal learning; Joseph Ramsey, the director of research computing; and Madelyn Glymour, causal analyst and co-author of a causal-learning primer.

What Are the Causal Factors Driving Software Cost?

Armed with this new approach, we are exploring causal factors driving software cost within DoD programs and systems. For example, vendor tools might identify anywhere between 25 and 45 inputs that are used to estimate software development. We believe that the great majority of these factors are not causal and thus distract program managers from identifying factors that should be controlled. For example, our approach may identify that one causal factor in software cost is the level of a programmer's experience. Such a result would allow the DoD to recommend and review the level of experience of programmers involved in a software-development project.

The first phase of our research began an initial assessment of causality at multiple levels of granularity to identify and evaluate families of factors related to causality (e.g., customer interface, team composition, experience with domain and tool, design and code complexity, and quality). We have begun to analyze and apply this approach to software development project data from several sources, including:

We are initiating collaborations with major commercial cost-estimation-tool vendors. Through these collaborations, the vendors will be instructed by our research team in conducting their own causal studies on their individual proprietary cost-estimation databases and sharing sanitized causal conclusions with our research team.

We are also working with two doctoral students advised by Dr. Barry Boehm, creator of the Constructive Cost Model (COCOMO) and founding director of the Center for Systems and Software Engineering at the University of Southern California (Los Angeles). Last year, our team trained these and other students in causal learning and began coaching them to analyze their tightly controlled COCOMO research data. An initial paper summarizing the work has been published and several other papers will be presented at the 2018 Acquisition Research Symposium.

The tooling for causal learning consists primarily of the Tetrad tool, an open-source program developed and maintained by CMU and University of Pittsburgh researchers, through the Center for Causal Discovery, that provides access to several dozen causal-learning algorithms to enable causal search, inference, and estimation. The aim of Tetrad is to provide a more complete set of causal search algorithms in a friendly graphical user interface requiring no programming knowledge.

Looking Ahead

While we are excited with the potential of causal learning, we are limited by the availability of relevant research data. In many cases, available data may not be shareable due to proprietary concerns or federal regulations governing the access to software project data. The SEI is currently participating in a pilot program through which more software program data may be made available for research. We continue to solicit additional research collaborators who have access to research or field data and who want to learn more about causal learning.

Having recently completed a first-year exploratory research project on applying causal learning, we just embarked on a three-year research project to explore more specific factors at greater depth including where alternative factors may identify more causal influences of DoD software program cost. These additional factors will then be incorporated into the causal model to provide a holistic causal cost model for further research and tool development, as well as guidance for software development and acquisition program managers.

Additional Resources

Learn more about Causal Learning:

CMU Open Learning Initiative: Causal & Statistical Reasoning

This tutorial on Causal Learning by Richard Scheines is part 1 of the Workshop on Case Studies of Causal Discovery with Model Search, held on October 25-27, 2013, at Carnegie Mellon University.

Center for Causal Discovery Summer Seminar 2016 Part 1: An Overview of Graphical Models, Loading Tetrad, Causal Graphs, and Interventions

Center for Causal Discovery Summer Seminar 2016 Part 2

Center for Causal Discovery Summer Seminar 2016 Part 3

Center for Causal Discovery Summer Seminar 2016 Part 4

Center for Causal Discovery Summer Seminar 2016 Part 5

Center for Causal Discovery Summer Seminar 2016 Part 6

Center for Causal Discovery Summer Seminar 2016 Part 7

Center for Causal Discovery Summer Seminar 2016 Part 8

Center for Causal Discovery Summer Seminar 2016 Part 9

Center for Causal Discovery Summer Seminar 2016 Part 10

Center for Causal Discovery Summer Seminar 2016 Part 11

Center for Causal Discovery Summer Seminar 2016 Part 12

Center for Causal Discovery Summer Seminar 2016 Part 13

The Book of Why: The New Science of Cause and Effect 1st Edition

Causal Inference in Statistics: A Primer

Counterfactuals and Causal Inference: Methods and Principles for Social Research (Analytical Methods for Social Research)

Python 3.7: Introducing Data Classes

Python 3.7 is set to be released this summer, let’s have a sneak peek at some of the new features! If you’d like to play along at home with PyCharm, make sure you get PyCharm 2018.1 (or later if you’re reading this from the future).

There are many new things in Python 3.7: various character set improvements, postponed evaluation of annotations, and more. One of the most exciting new features is support for the dataclass decorator.

Most Python developers will have written many classes which looks like:

def__init__(self,var_a,var_b): |

Data classes help you by automatically generating dunder methods for simple cases. For example, a __init__ which accepted those arguments and assigned each to self. The small example before could be rewritten like:

A key difference is that type hints are actually required for data classes. If you’ve never used a type hint before: they allow you to mark what type a certain variable _should_ be. At runtime, these types are not checked, but you can use PyCharm or a command-line tool like mypy to check your code statically.

So let’s have a look at how we can use this!

You know a movie’s fanbase is passionate when a fan creates a REST API with the movie’s data in it. One Star Wars fan has done exactly that, and created the Star Wars API. He’s actually gone even further, and created a Python wrapper library for it.

Let’s forget for a second that there’s already a wrapper out there, and see how we could write our own.

We can use the requests library to get a resource from the Star Wars API:

response=requests.get('https://swapi.co/api/films/1/') |

This endpoint (like all swapi endpoints) responds with a JSON message. Requests makes our life easier by offering JSON parsing:

dictionary=response.json() |

And at this point we have our data in a dictionary. Let’s have a look at it (shortened):

'characters':['https://swapi.co/api/people/1/', …], 'created':'2014-12-10T14:23:31.880000Z', 'director':'George Lucas', 'edited':'2015-04-11T09:46:52.774897Z', 'opening_crawl':'It is a period of civil war.\r\n … ', 'planets':['https://swapi.co/api/planets/2/', 'producer':'Gary Kurtz, Rick McCallum', 'release_date':'1977-05-25', 'species':['https://swapi.co/api/species/5/', ...], 'starships':['https://swapi.co/api/starships/2/', ...], 'url':'https://swapi.co/api/films/1/', 'vehicles':['https://swapi.co/api/vehicles/4/', ...] |

To properly wrap an API, we should create objects that our wrapper’s user can use in their application. So let’s define an object in Python 3.6 to contain the responses of requests to the /films/ endpoint:

title:str, episode_id:int, opening_crawl:str, director:str, producer:str, release_date:datetime, characters:List[str], planets:List[str], starships:List[str], vehicles:List[str], species:List[str], created:datetime, edited:datetime, url:str ): self.title=title self.episode_id=episode_id self.opening_crawl=opening_crawl self.director=director self.producer=producer self.release_date=release_date self.characters=characters self.planets=planets self.starships=starships self.vehicles=vehicles self.species=species self.created=created self.edited=edited self.url=url iftype(self.release_date)isstr: self.release_date=dateutil.parser.parse(self.release_date) iftype(self.created)isstr: self.created=dateutil.parser.parse(self.created) iftype(self.edited)isstr: self.edited=dateutil.parser.parse(self.edited) |

Careful readers may have noticed a little bit of duplicated code here. Not so careful readers may want to have a look at the complete Python 3.6 implementation: it’s not short.

This is a classic case of where the data class decorator can help you out. We’re creating a class that mostly holds data, and only does a little validation. So let’s have a look at what we need to change.

Firstly, data classes automatically generate several dunder methods. If we don’t specify any options to the dataclass decorator, the generated methods are: __init__, __eq__, and __repr__. Python by default (not just for data classes) will implement __str__ to return the output of __repr__ if you’ve defined __repr__ but not __str__. Therefore, you get four dunder methods implemented just by changing the code to:

release_date:datetime characters:List[str] starships:List[str] |

We removed the __init__ method here to make sure the data class decorator can add the one it generates. Unfortunately, we lost a bit of functionality in the process. Our Python 3.6 constructor didn’t just define all values, but it also attempted to parse dates. How can we do that with a data class?

If we were to override __init__, we’d lose the benefit of the data class. Therefore a new dunder method was defined for any additional processing: __post_init__. Let’s see what a __post_init__ method would look like for our wrapper class:

iftype(self.release_date)isstr: self.release_date=dateutil.parser.parse(self.release_date) iftype(self.created)isstr: self.created=dateutil.parser.parse(self.created) iftype(self.edited)isstr: self.edited=dateutil.parser.parse(self.edited) |

And that’s it! We could implement our class using the data class decorator in under a third of the number of lines as we could without the data class decorator.

By using options with the decorator, you can tailor data classes further for your use case. The default options are:

@dataclass(init=True,repr=True,eq=True,order=False,unsafe_hash=False,frozen=False) |

- init determines whether to generate the

__init__dunder method. - repr determines whether to generate the

__repr__dunder method. - eq does the same for the

__eq__dunder method, which determines the behavior for equality checks (your_class_instance == another_instance). - order actually creates four dunder methods, which determine the behavior for all lesser than and/or more than checks. If you set this to true, you can sort a list of your objects.

The last two options determine whether or not your object can be hashed. This is necessary (for example) if you want to use your class’ objects as dictionary keys. A hash function should remain constant for the life of the objects, otherwise the dictionary will not be able to find your objects anymore. The default implementation of a data class’ __hash__ function will return a hash over all objects in the data class. Therefore it’s only generated by default if you also make your objects read-only (by specifying frozen=True).

By setting frozen=True any write to your object will raise an error. If you think this is too draconian, but you still know it will never change, you could specify unsafe_hash=True instead. The authors of the data class decorator recommend you don’t though.

If you want to learn more about data classes, you can read the PEP or just get started and play with them yourself! Let us know in the comments what you’re using data classes for!

Random Darknet Shopper: A Live Mail Art Piece

------------------------------------------------------------------------

RANDOM██████╗ █████╗ ██████╗ ██╗ ██╗███╗ ██╗███████╗████████╗

██╔══██╗██╔══██╗██╔══██╗██║ ██╔╝████╗ ██║██╔════╝╚══██╔══╝

██║ ██║███████║██████╔╝█████╔╝ ██╔██╗ ██║█████╗ ██║

██║ ██║██╔══██║██╔══██╗██╔═██╗ ██║╚██╗██║██╔══╝ ██║

██████╔╝██║ ██║██║ ██║██║ ██╗██║ ╚████║███████╗ ██║

╚═════╝ ╚═╝ ╚═╝╚═╝ ╚═╝╚═╝ ╚═╝╚═╝ ╚═══╝╚══════╝ ╚═╝

SHOPPER

Version 0.13 / Runs on AlphaBay Market

------------------------------------------------------------------------

DATE: 30.12.2015

ITEM NO: 18

------------------------------------------------------------------------

OPENING ALPHABAY MARKET - http://lo4wpvx3tcdbqra4.onion

CONNECTING VIA TOR (this might take a while)

------------------------------------------------------------------------

LOGIN

INEJECTING COOKIE

------------------------------------------------------------------------

CHOOSING RANDOM CATEGORY: Fraud > Personal Information & Scans > Personal Information & Scans

FILTERING ITEMS WHICH ARE SHIPPED TO UK & ARE BELOW 100 USD

COLLECTING ALL ITEMS IN CATEGORY

------------------------------------------------------------------------------------

NR | ITEM | VENDOR | BTC | USD |

------------------------------------------------------------------------------------

1 | Personal Information~SSN~DOB~ect... | BooMstick | 0.0005 |$ 0.2 |

2 | ( ^Θ^) ID Template and Scan Pack 13 GB ... | tastych... | 0.0006 |$ 0.25 |

3 | CreditCard Template Pack!!!!! | Halfman | 0.0007 |$ 0.28 |

4 | +++ 0.50 cents FRESH 2015 USA PROFILES... | wakawaka | 0.0012 |$ 0.5 |

5 | USA Full informations profile with SSN | bakov | 0.0012 |$ 0.5 |

6 | 1100+ Personal Information From USA, 45... | bestworks | 0.0012 |$ 0.5 |

7 | [MasterSplynter2] Florida Drivers Licen... | masters... | 0.0018 |$ 0.75 |

8 | Personal Information~SSN~DOB Fulls - ... | verdugin | 0.0019 |$ 0.8 |

9 | German Templates: CCs, Documents ++more | zZz | 0.0023 |$ 0.99 |

10 | ** DRIVER SCAN FLORIDA ** MEN | Dark-Eu... | 0.0024 |$ 1.0 |

11 | [GlobalData] USA Profile /w Background ... | globaldata | 0.0024 |$ 1.0 |

12 | Personal Inforamtion + | BooMstick | 0.0024 |$ 1.0 |

13 | APRIL 2015 FRESH USA PROFILES SSN/DOB | wakawaka | 0.0024 |$ 1.0 |

14 | National Identity Card (Italy) with SSN... | GrayFac... | 0.0024 |$ 1.0 |

15 | Bitcoin lottery | MoneyTr... | 0.0024 |$ 1.0 |

16 | Personal Information~SSN~DOB~ect... | BooMstick | 0.0005 |$ 0.2 |

17 | ( ^Θ^) ID Template and Scan Pack 13 GB ... | tastych... | 0.0006 |$ 0.25 |

18 | CreditCard Template Pack!!!!! | Halfman | 0.0007 |$ 0.28 |

19 | +++ 0.50 cents FRESH 2015 USA PROFILES... | wakawaka | 0.0012 |$ 0.5 |

20 | USA Full informations profile with SSN | bakov | 0.0012 |$ 0.5 |

21 | 1100+ Personal Information From USA, 45... | bestworks | 0.0012 |$ 0.5 |

22 | [MasterSplynter2] Florida Drivers Licen... | masters... | 0.0018 |$ 0.75 |

23 | Personal Information~SSN~DOB Fulls - ... | verdugin | 0.0019 |$ 0.8 |

24 | German Templates: CCs, Documents ++more | zZz | 0.0023 |$ 0.99 |

25 | ** DRIVER SCAN FLORIDA ** MEN | Dark-Eu... | 0.0024 |$ 1.0 |

26 | [GlobalData] USA Profile /w Background ... | globaldata | 0.0024 |$ 1.0 |

27 | Personal Inforamtion + | BooMstick | 0.0024 |$ 1.0 |

28 | APRIL 2015 FRESH USA PROFILES SSN/DOB | wakawaka | 0.0024 |$ 1.0 |

29 | National Identity Card (Italy) with SSN... | GrayFac... | 0.0024 |$ 1.0 |

30 | Bitcoin lottery | MoneyTr... | 0.0024 |$ 1.0 |

31 | [GlobalData] USA Profile /w Background ... | globaldata | 0.0024 |$ 1.0 |

32 | ** DRIVER SCAN FLORIDA ** MEN | Dark-Eu... | 0.0024 |$ 1.0 |

33 | |Ultra HQ Scan | Verification Documents... | Raven1 | 0.0024 |$ 1.0 |

34 | APRIL 2015 FRESH USA PROFILES SSN/DOB | wakawaka | 0.0024 |$ 1.0 |

35 | ✯ Premium HQ PSD Templates ✯ USA ✯ Phot... | Hund | 0.0025 |$ 1.05 |

36 | German ID And Other Scan | shonajaan | 0.0026 |$ 1.1 |

37 | 2015 FRESH SSN + DOB Fulls - All 50 Sta... | Andigatel | 0.003 |$ 1.25 |

38 | FBI,CIA and Other US Government Officia... | bestworks | 0.0033 |$ 1.4 |

39 | + USA PROFILES SSN/DOB/DL/BANK + FREE ... | wakawaka | 0.0036 |$ 1.5 |

40 | UK DEAD FULLZ/PROFILES | pringle... | 0.0036 |$ 1.5 |

41 | ★★★7 REASONS WHY THAT CVV YOUR USING GE... | GodsLef... | 0.0036 |$ 1.5 |

42 | [GlobalData] UK Profiles - 50% Discount! | globaldata | 0.0036 |$ 1.5 |

43 | [AUTOSHOP] UK LEADS NAME|ADDRESS|MOBILE... | UKDOCS | 0.0036 |$ 1.54 |

44 | ||Ultra HQ Scan|| Custom Passport, DL, ... | sky88 | 0.0047 |$ 2.0 |

45 | ** PASSPORT SCAN LITHUANIA ** MEN | Dark-Eu... | 0.0047 |$ 2.0 |

46 | ** PASSPORT SCAN LITHUANIA ** MEN | Dark-Eu... | 0.0047 |$ 2.0 |

47 | ||Ultra HQ Scan|| Custom Passport, DL, ... | sky88 | 0.0047 |$ 2.0 |

48 | $$ Highest Quality BANKING FULLZ USA $$ | Grimm | 0.0047 |$ 2.0 |

49 | ** ID CARD SCAN CHINA ** MEN ** | Dark-Eu... | 0.0047 |$ 2.0 |

50 | Business Profiles EIN + DOB + SSN ( Per... | Despot | 0.0047 |$ 2.0 |

51 | US Passport and Other Scan Read Full Li... | shonajaan | 0.0052 |$ 2.2 |

52 | 272 Passport Scans From 46 Countries | bestworks | 0.0066 |$ 2.8 |

53 | French Documents: IDs, Passports, Licen... | zZz | 0.0071 |$ 2.99 |

54 | ⚫⚫▶▶♕ ♕ HQ IDs and PASSPORTS ♕ ♕ | KingCarder | 0.0071 |$ 2.99 |

55 | How to to open your OWN Bank Drops **20... | 7awjn | 0.0071 |$ 3.0 |

56 | Wordlist for password bruteforce cracki... | Asphyxi... | 0.0071 |$ 3.0 |

57 | approximately 1000 onion sites | 7awjn | 0.0071 |$ 3.0 |

58 | ★★★LEARN HOW TO CREATE YOUR VERY OWN BA... | GodsLef... | 0.0071 |$ 3.0 |

59 | Hacked Email [ Email + Pass ] | Code | 0.0071 |$ 3.0 |

60 | UK Templates Bank Statment,Credit Card ... | shonajaan | 0.0078 |$ 3.3 |

61 | U.S. Passport & CC Templates | zZz | 0.0083 |$ 3.49 |

62 | Bitcoin lottery | MoneyTr... | 0.0095 |$ 4.0 |

63 | Valid SSN+MMN+DOB+E-mail+Phone number -... | Kingscan | 0.0095 |$ 4.0 |

64 | Make Your own BestBuy Receipt (PSD) | tkmremi | 0.0104 |$ 4.39 |

65 | Amazon Unlimited Money | COLOR | 0.0107 |$ 4.5 |

66 | ★Uk DOB Service 90% Success Rate★ | IcyEagle | 0.0118 |$ 4.99 |

67 | Unique UK Identity Set ( Passport+DL+Bi... | Kingscan | 0.0118 |$ 5.0 |

68 | Residential Lease Agreement - Use for Drop | GetVeri... | 0.0118 |$ 5.0 |

69 | BRITISH GAS UTILITY BILL "Template" [Au... | TheNice... | 0.0118 |$ 5.0 |

70 | [Editable] US Passport | Code | 0.0118 |$ 5.0 |

71 | HUGE e-mail list 500,000 emails SPAM TH... | nucleoide | 0.0118 |$ 5.0 |

72 | Aussie Email Logins ( @iinet.net.au / @... | OzRort | 0.0118 |$ 5.0 |

73 | ★★★WELLS FARGO CASHOUT GUIDE!!! LEARN H... | GodsLef... | 0.0118 |$ 5.0 |

74 | Canada Profiles / Personnal data (No cc) | REDF0X | 0.0118 |$ 5.0 |

75 | Link to US Automobile Database | GetVeri... | 0.0118 |$ 5.0 |

76 | German Documents Credit Cards Bills and... | magneto | 0.0118 |$ 5.0 |

77 | Unique BOA (Statment+CC) Custom Digital... | Kingscan | 0.0118 |$ 5.0 |

78 | ★★★WELLS FARGO CASHOUT GUIDE!!! LEARN H... | GodsLef... | 0.0118 |$ 5.0 |

79 | Link to US Automobile Database | GetVeri... | 0.0118 |$ 5.0 |

80 | [Editable] US Passport | Code | 0.0118 |$ 5.0 |

81 | USA PERSONAL PROFILES *BLACKSTAR* | BlackSt... | 0.0118 |$ 5.0 |

82 | BRITISH GAS UTILITY BILL "Template" [Au... | TheNice... | 0.0118 |$ 5.0 |

83 | ✯ Premium HQ PSD Templates ✯ German Pho... | Hund | 0.013 |$ 5.48 |

84 | USA Passport Template | adeadra... | 0.013 |$ 5.5 |

85 | Link to two (2) Databases to Find Most ... | itabraus2 | 0.0142 |$ 5.99 |

86 | ★★★HOW TO CREATE KEYGENS FOR YOUR FAVOR... | GodsLef... | 0.0142 |$ 6.0 |

87 | HQ Scans - German Passports or IDs | bakov | 0.0142 |$ 6.0 |

88 | ★★★HOW TO CARD AMAZON!!! LEARN HOW TO U... | GodsLef... | 0.0142 |$ 6.0 |

89 | SSN Social Security Number Template + B... | sexypanda | 0.0164 |$ 6.92 |

90 | **ULTIMATE MONEY MAKING PACKAGE** WELCO... | GodsLef... | 0.0166 |$ 6.99 |

91 | German ID Card - Deutscher Personalausw... | MadeInG... | 0.0169 |$ 7.13 |

92 | USA SSN+DOB Lookup | Raffi | 0.0171 |$ 7.22 |

93 | [NEW BATCH - AUTO] CANADA FULLZ CREDIT ... | phackman | 0.0171 |$ 7.22 |

94 | ★USA SSN + DOB + ADDRESS Service 90% Su... | IcyEagle | 0.0189 |$ 7.99 |

95 | ★★★WELLS FARGO TO BTC!! THIS GUIDE TEAC... | GodsLef... | 0.0189 |$ 8.0 |

96 | USA only SSN DOB Lookup. Almost 100% re... | verdugin | 0.0189 |$ 8.0 |

97 | California License - custom creation - ... | BTH-Ove... | 0.0189 |$ 8.0 |

98 | ✪✪✪✪✪ SSN Provision Service - Find SSN ... | verdugin | 0.0189 |$ 8.0 |

99 | 1 Driver's License Scan Canada Ontario | Passpor... | 0.0189 |$ 8.0 |

100| Passport scan of real person High Quali... | CardPass | 0.0189 |$ 8.0 |

101| Link to Top 2 Database to Find Most Ame... | verdugin | 0.0189 |$ 8.0 |

102| UK Birth Certificate PSD Template | S.O.M | 0.0199 |$ 8.39 |

103| ★★★MAKE THOUSANDS LEARNING HOW TO CASHO... | GodsLef... | 0.0213 |$ 9.0 |

104| HOW TO STEAL PEOPLE's INFORMATIONS ▄▀... | fake | 0.0213 |$ 9.0 |

105| PayPal VERIFIED accounts with BANK & CC... | GodsLef... | 0.0213 |$ 9.0 |

106| SUPREME FRAUD PACKAGE!!! I BRING YOU TH... | GodsLef... | 0.0225 |$ 9.5 |

107| 10x Freshly gathered USA fullz without ... | BlockKids | 0.0237 |$ 10.0 |

108| 1 Passport Scan of Canada + Matching pe... | Passpor... | 0.0237 |$ 10.0 |

109| USA SSN AND DOB SEARCH *BLACKSTAR* | BlackSt... | 0.0237 |$ 10.0 |

110| USA utility Bill digital document | LikeApr0 | 0.0237 |$ 10.0 |

111| USA Social Security Number SSN digital ... | LikeApr0 | 0.0237 |$ 10.0 |

112| ★★★CREDIT CARD FRAUD, A BEGINNERS GUIDE... | GodsLef... | 0.0237 |$ 10.0 |

113| Full USA FUllz (SSN,DOB,MMN) | QTwoTimes | 0.0237 |$ 10.0 |

114| Link to Top 2 Database to Find Most Ame... | GetVeri... | 0.0237 |$ 10.0 |

115| Aussie Docs | OzRort | 0.0237 |$ 10.0 |

116| Full USA FUllz (SSN,DOB,MMN) | QTwoTimes | 0.0237 |$ 10.0 |

117| ****GodsLeftNuts Carding Software****TH... | GodsLef... | 0.0237 |$ 10.0 |

118| CANADIAN DL SCAN REAL INFOS/DL+PASSPORT... | pringle... | 0.0237 |$ 10.0 |

119| COX.net Email Leads [Auto-... | Code | 0.0237 |$ 10.0 |

120| ★★★INTRODUCTION INTO EXPERT CARDING!!!!... | GodsLef... | 0.0237 |$ 10.0 |

121| US Bank Check Template | GetVeri... | 0.0237 |$ 10.0 |

122| ****GodsLeftNuts Carding Software****TH... | GodsLef... | 0.0237 |$ 10.0 |

123| ★ CHEAPEST PRICE Passport & Driving Lic... | Maddy1980 | 0.0237 |$ 10.0 |

124| Full USA FUllz (SSN,DOB,MMN) | QTwoTimes | 0.0237 |$ 10.0 |

125| Full USA FUllz (SSN,DOB,MMN) | QTwoTimes | 0.0237 |$ 10.0 |

126| USA utility Bill digital document | LikeApr0 | 0.0237 |$ 10.0 |

127| Full USA FUllz (SSN,DOB,MMN) | QTwoTimes | 0.0237 |$ 10.0 |

128| SSN Provision Service - Find SSN and DOB | GetVeri... | 0.0237 |$ 10.0 |

129| ★★★INTRODUCTION INTO EXPERT CARDING!!!!... | GodsLef... | 0.0237 |$ 10.0 |

130| USA Social Security Number SSN digital ... | LikeApr0 | 0.0237 |$ 10.0 |

131| UK SCANS SERVICE | datrude... | 0.0237 |$ 10.0 |

132| Find Anybody DOB 100% Accurate and Free | GetVeri... | 0.0237 |$ 10.0 |

133| UK utility Bill and template passport +... | theone | 0.0247 |$ 10.42 |

134| Custom pay stubs | Raffi | 0.0256 |$ 10.83 |

135| THE COOPERATIVE BANK STATEMENT "Templat... | Nesquik7 | 0.045 |$ 19.0 |

136| COMCAST - CUSTOM MADE UTILITY BILL | suzie | 0.026 |$ 11.0 |

137| BANK OF AMERICA - BANK STATEMENT | suzie | 0.026 |$ 11.0 |

138| SSN CARD SCAN | suzie | 0.026 |$ 11.0 |

139| Make Your own Fake Doctor Notes | tkmremi | 0.0264 |$ 11.15 |

140| EXIF data VERIFICATION BOOSTER | Battalion | 0.0267 |$ 11.26 |

141| 1 Romanian passport scan | Passpor... | 0.0284 |$ 12.0 |

142| BIG US & EU TEMPLATE PACK [PROMO PRICE] | yummy5656 | 0.0284 |$ 12.0 |

143| 1 Passport Scan of France | Passpor... | 0.0284 |$ 12.0 |

144| 1 Passport Scan of the USA | Passpor... | 0.0284 |$ 12.0 |

145| Wells Fargo VISA Bank Statement "Template" | Nesquik7 | 0.045 |$ 19.0 |

146| UK DOB SEARCH | datrude... | 0.0317 |$ 13.38 |

147| [Service] UK DOB SEARCH (Date Of Birth) | UKDOCS | 0.0324 |$ 13.69 |

148| 1 National Identity Card Scan Spain | Passpor... | 0.0332 |$ 14.0 |

149| Background Reports, Credit Reports! | sal | 0.0355 |$ 15.0 |

150| HQ DL+SIN+Passport Scan of Canadian cit... | ordapro... | 0.0355 |$ 15.0 |

151| British Gas bill - Create your own temp... | boggalertz | 0.0355 |$ 15.0 |

152| Uk British Gas electricity bill template | dilling... | 0.0355 |$ 15.0 |

153| Super HQ - Utility Bill Template - Unli... | GetVeri... | 0.0355 |$ 15.0 |

154| Water utility bill - Create your own te... | boggalertz | 0.0355 |$ 15.0 |

155| ***CERTIFIED ETHICAL HACKER OFFICIAL CO... | GodsLef... | 0.0355 |$ 15.0 |

156| Slovenian custom utility bill RTV | CardPass | 0.0355 |$ 15.0 |

157| █ █ CVV ssn dob + Background Check & Cr... | fake | 0.0355 |$ 15.0 |

158| HQ DL+SIN+Passport Scan of Canadian cit... | ordapro... | 0.0355 |$ 15.0 |

159| Uk Profile - good for finance | jojo100 | 0.0355 |$ 15.0 |

160| Custom Credit/Debit Physical Card Blanks | Kingscan | 0.0355 |$ 15.0 |

161| HQ DL+Passport scans of Canadian citize... | ordapro... | 0.0355 |$ 15.0 |

162| DL+Passport Scans of Canadian citizen. ... | ordapro... | 0.0355 |$ 15.0 |

163| German proof of Address - deutscher Adr... | DarkLor... | 0.0355 |$ 15.0 |

164| 1 Passport Scan from any Country you N... | Passpor... | 0.0355 |$ 15.0 |

165| HQ DL+SIN+Passport Scan of Canadian cit... | ordapro... | 0.0355 |$ 15.0 |

166| Uk Profile - good for finance | jojo100 | 0.0355 |$ 15.0 |

167| British Gas bill - Create your own temp... | boggalertz | 0.0355 |$ 15.0 |

168| Slovenian custom utility bill RTV | CardPass | 0.0355 |$ 15.0 |

169| Water utility bill - Create your own te... | boggalertz | 0.0355 |$ 15.0 |

170| [Service} UK MOBILE PHONE NUMBER SEARCH... | UKDOCS | 0.0366 |$ 15.47 |

171| 1 Driver Licence Scan of the U.K. | Passpor... | 0.0379 |$ 16.0 |

172| SCAN CNI FR + RIB FR | tutoFrP... | 0.039 |$ 16.47 |

173| 1 Passport Scan of the UK + tenancy agr... | Passpor... | 0.0403 |$ 17.0 |

174| UTILITY BILLS SCAN (USA,UK,FR,IT,DE,ES) | pringle... | 0.0414 |$ 17.5 |

175| Free Electoral Roll Search Tutorial - UK | Data_De... | 0.0426 |$ 18.0 |

176| [SERVICE] UK MMN SEARCH (Mother Maiden ... | UKDOCS | 0.0446 |$ 18.83 |

177| BRITISH GAS UTILITY BILL "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

178| AGL (AUS) Utility Bills "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

179| TALKTALK (UK) Utility Bill "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

180| HM Revenue & Customs TAX CODE UTILITY B... | Nesquik7 | 0.045 |$ 19.0 |

181| Vodafone (AUS) Utility Bills "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

182| Momentum (AUS) Utility Bills "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

183| United Utilities (UK) Utility Bill "Tem... | Nesquik7 | 0.045 |$ 19.0 |

184| Free (FRANCE) Utility Bills "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

185| RSB BANK STATEMENT "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

186| LLOYDS BANK STATEMENT "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

187| BRITISH GAS UTILITY BILL "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

188| Chase Bank Statement "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

189| TALKTALK (UK) Utility Bill "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

190| Entergy Utility Bill "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

191| Orange (FRANCE) Utility Bills "Template... | Nesquik7 | 0.045 |$ 19.0 |

192| Comcast Utility Bill "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

193| EDF (FRANCE) Utility Bills "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

194| Halifax (UK) Bank Statement "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

195| BT GROUP UTILITY BILL "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

196| BT GROUP UTILITY BILL "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

197| NATWEST BANK STATEMENT "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

198| United Utilities (UK) Utility Bill "Tem... | Nesquik7 | 0.045 |$ 19.0 |

199| BRITISH GAS UTILITY BILL "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

200| Direct Energie (FRANCE) Utility Bills "... | Nesquik7 | 0.045 |$ 19.0 |

201| Chase Bank Statement "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

202| Orange (FRANCE) Utility Bills "Template... | Nesquik7 | 0.045 |$ 19.0 |

203| RSB BANK STATEMENT "Template" ⇊ | Nesquik7 | 0.045 |$ 19.0 |

204| Canadian Fullz Credit Registration Etc | casa | 0.0473 |$ 19.99 |

205| Doxing Service | ULTIMATE BARGAIN PRICE... | HighPing | 0.0473 |$ 19.99 |

206| USA passport digital document | LikeApr0 | 0.0474 |$ 20.0 |

207| UK driver license digital document | LikeApr0 | 0.0474 |$ 20.0 |

208| UK Passport info Search | boggalertz | 0.0474 |$ 20.0 |

209| 700+ Credit Score Complete Fullz | HuggleB... | 0.0474 |$ 20.0 |

210| Background Report Service | GetVeri... | 0.0474 |$ 20.0 |

211| Standard US Drivers License Template (B... | motorhe... | 0.0474 |$ 20.0 |

212| 1x CHECKED FULLZ+BANK ACCOUNT from jaho... | jahoda | 0.0474 |$ 20.0 |

213| SPAIN ID CARD digital document DNI | LikeApr0 | 0.0474 |$ 20.0 |

214| Social Security Card Scan - HQ *Sample ... | GetVeri... | 0.0474 |$ 20.0 |

215| USA driver license digital document | LikeApr0 | 0.0474 |$ 20.0 |

216| Exteme ID Template Megapack! ALL 50 US ... | bartock | 0.0474 |$ 20.0 |

217| UK DOB LOOKUP - $20 | ScJ | 0.0474 |$ 20.0 |

218| Custom Social Security Number Card Scan... | Kingscan | 0.0474 |$ 20.0 |

219| custom amount of data | alphabase | 0.0474 |$ 20.0 |

220| PANAMA DRIVER LICENSE digital document | LikeApr0 | 0.0474 |$ 20.0 |

221| Aussie Passport Scans | OzRort | 0.0474 |$ 20.0 |

222| Utility Bill Scan *Sample Link Inside* | GetVeri... | 0.0474 |$ 20.0 |

223| EU Editable Photoshop Template HQ - Rom... | cyberpe... | 0.0474 |$ 20.0 |

224| UK Scan Service - Customized documents ... | boggalertz | 0.0474 |$ 20.0 |

225| Background Report Service | GetVeri... | 0.0474 |$ 20.0 |

226| Utility Bill Scan *Sample Link Inside* | GetVeri... | 0.0474 |$ 20.0 |

227| UK Scan Service - Customized documents ... | boggalertz | 0.0474 |$ 20.0 |

228| 700+ Credit Score Complete Fullz | HuggleB... | 0.0474 |$ 20.0 |

229| California State Drivers License Templa... | motorhe... | 0.0474 |$ 20.0 |

230| USA Documents Credit Cards Bills and Mo... | magneto | 0.0474 |$ 20.0 |

231| SPAIN ID CARD digital document DNI | LikeApr0 | 0.0474 |$ 20.0 |

232| UK DOB search -UK ONLY $20 each With fr... | boggalertz | 0.0474 |$ 20.0 |

233| USA driver license digital document | LikeApr0 | 0.0474 |$ 20.0 |

234| EU Editable Photoshop Template HQ - Rom... | cyberpe... | 0.0474 |$ 20.0 |

235| Social Security Card Template - SSN PSD... | GetVeri... | 0.0592 |$ 25.0 |

236| Aussie Drivers License Scans | OzRort | 0.0474 |$ 20.0 |

237| USA Frontier Communication Utility Bill... | mackay | 0.0485 |$ 20.5 |

238| [OFFICIAL] EQUIFAX/TRANSUNION CANADIAN ... | phackman | 0.0513 |$ 21.66 |

239| photos PHYSICAL CC/ID NEEDED FOR VERIF... | CINABICAB | 0.0534 |$ 22.57 |

240| photos PHYSICAL id/cc NEEDED FOR VERIF... | CINABICAB | 0.0534 |$ 22.57 |

241| 195 photos for fake ID : Man & Woman | FrenchyBoy | 0.0536 |$ 22.64 |

242| BARCLAYS BANK STATEMENT "Template" ⇊ | Nesquik7 | 0.0545 |$ 23.0 |

243| ..::n0unit_Evo::..US fullz x50 | n0units | 0.0592 |$ 25.0 |

244| Arizona DL Scans | mackay | 0.0592 |$ 25.0 |

245| Florida DL Scans | mackay | 0.0592 |$ 25.0 |

246| USA CC Scans | mackay | 0.0592 |$ 25.0 |

247| Massachusetts DL Scans | mackay | 0.0592 |$ 25.0 |

248| Maryland DL scans | mackay | 0.0592 |$ 25.0 |

249| Minnesota DL Scans | mackay | 0.0592 |$ 25.0 |

250| La Caixa Payment Service Notification -... | mackay | 0.0592 |$ 25.0 |

251| Full Credit File/report 1 Star rating F... | boggalertz | 0.0592 |$ 25.0 |

252| [CA] Canadian Pros - Instant Delivery | ramboiler | 0.0592 |$ 25.0 |

253| PREMIUM Ally Bank drops | verdugin | 0.0592 |$ 25.0 |

254| USA DL/SSN scans | mackay | 0.0592 |$ 25.0 |

255| USA Chase Bank CC Statement scans | mackay | 0.0592 |$ 25.0 |

256| Massachusetts DL Scans | mackay | 0.0592 |$ 25.0 |

257| USA DiSH Bill scans - 100% Perfect Qual... | mackay | 0.0592 |$ 25.0 |

258| Iowa DL scans | mackay | 0.0592 |$ 25.0 |

259| Michigan DL Scans | mackay | 0.0592 |$ 25.0 |

260| Florida DL Scans | mackay | 0.0592 |$ 25.0 |

261| Minnesota DL Scans | mackay | 0.0592 |$ 25.0 |

262| 2013 BotNet LogFilez - 1 TXT with varia... | o-c-king | 0.0592 |$ 25.0 |

263| Maryland DL scans | mackay | 0.0592 |$ 25.0 |

264| USA Chase Bank CC Statement scans | mackay | 0.0592 |$ 25.0 |

265| USA DL/SSN scans | mackay | 0.0592 |$ 25.0 |

266| ..::n0unit_Evo::..US fullz x50 | n0units | 0.0592 |$ 25.0 |

267| [CA] Canadian Pros - Instant Delivery | ramboiler | 0.0592 |$ 25.0 |

268| ★ Driving Licence, Passports, Bank Stat... | tescovo... | 0.0592 |$ 25.0 |

269| Indiana DL scans | mackay | 0.0592 |$ 25.0 |

270| richtext - /virgin/ american ssn & *rea... | richtext | 0.0592 |$ 25.0 |

271| Idaho DL Scans | mackay | 0.0592 |$ 25.0 |

272| Utility Bill/Bank Statements Scans | mackay | 0.0592 |$ 25.0 |

273| USA Ally Bank Statement - 100% Perfect ... | mackay | 0.0592 |$ 25.0 |

274| custom listing for vbainf1000 | mackay | 0.0663 |$ 28.0 |

275| Scan personnalisé CNI Carte Identité Fr... | FrenchyBoy | 0.0593 |$ 25.04 |

276| UK ID Pack Templates | dilling... | 0.071 |$ 30.0 |

277| Michigan State Drivers License Template | motorhe... | 0.071 |$ 30.0 |

278| USA Passport Card Scans | mackay | 0.071 |$ 30.0 |