A deep dive into Bitcoin Core v0.15

Greg Maxwell

2017-08-28

video: https://www.youtube.com/watch?v=nSRoEeqYtJA

slides: https://people.xiph.org/~greg/gmaxwell-sf-015-2017.pdf

https://twitter.com/kanzure/status/903872678683082752

git repo: https://github.com/bitcoin/bitcoin

preliminary v0.15 release notes (not finished yet): http://dg0.dtrt.org/en/2017/09/01/release-0.15.0/

Alright let's get started. There are a lot of new faces in the room. I feel like there's an increase in excitement around bitcoin right now. That's great. For those of you who are new to this space, I am going to be talking about some technical details here, so you'll get to drink from the firehose. And if you don't understand something, feel free to ask me questions, or other people in the room after the event, because there are a lot of people here who know a lot about this stuff.

So tonight I'd like to start off by talking about the new cool stuff in Bitcoin Core v0.15 which is just about to be released. And then I am going to open up for open-format question and answer and maybe we'll have some interesting discuss.

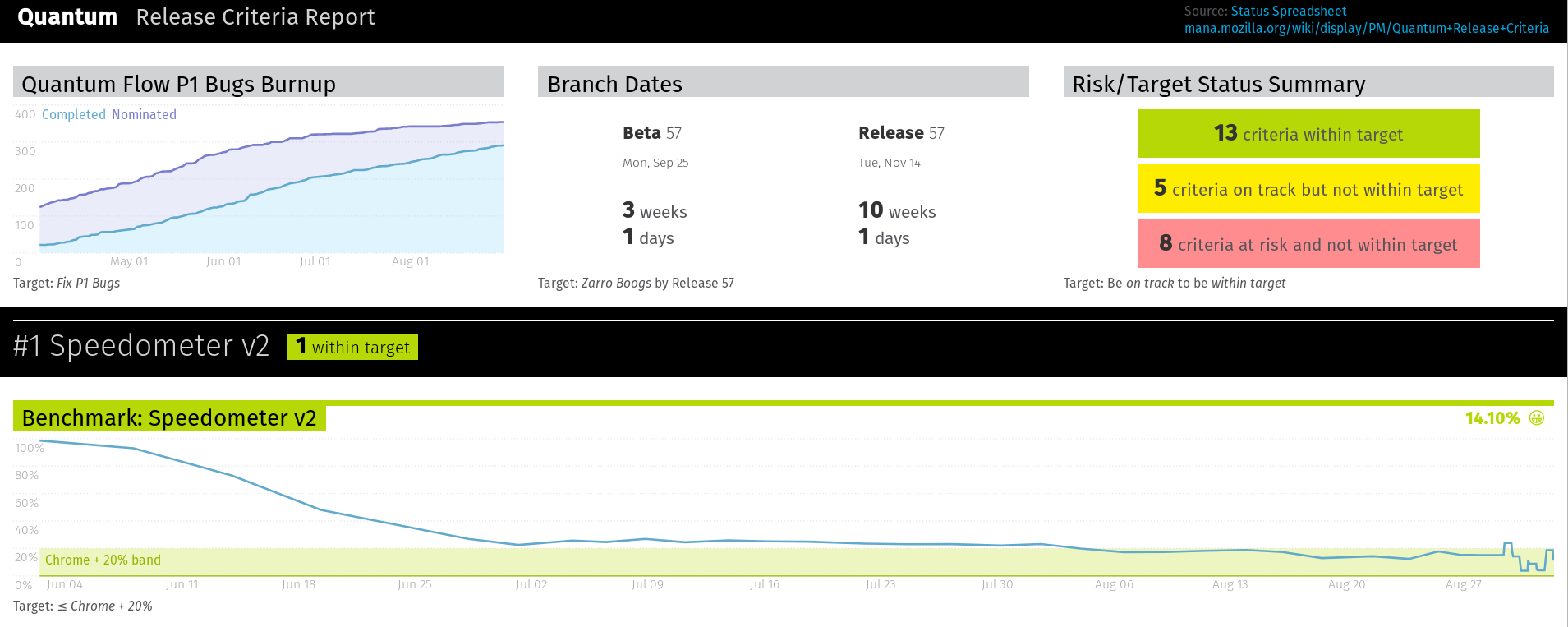

First thing I want to talk about for v0.15 is system number wise breakdown. What kind of activities go into bitcoin development these days? I am going to talk about the major themes and major improvements, things about performance, wallet features, and talk a little bit about this service bit disconnection thing, which is a really minor and obscure thing but it created some press and I think there's a lot of misunderstanding about it. And then I am going to talk about some of the future work that is not in v0.15 that will be in future versions that I think is interesting.

Quick refresher on how Bitcoin Core releases work. So there's this master trunk branch of bitcoin development. And releases are branched off of it and then new fixes go into that releases are written into the master branch and then copied into the release branches. This is a pretty common process for software development. What this means is that features that are in v0.15 are a lot of them are also in v0.14.2 and v0.14 because v0.15 started with the release of v0.14.0 not with the release of v0.14.2. So back in February this year, v0.14 branched off, and in March v0.14.0 was released. In August, v0.15 branched off, and we had two release candidates, and there's a third that should be up tonight or tomorrow morning, and we're expecting the full release of v0.15 to be around the 14th or 15th, and we expect it to be delayed due to developers flying around and not having access to their cryptographic keys. Our original target date was September 1st, so two week slip there, unfortunate but not a big deal.

Just some raw numbers. What kind of levels of activities are going into bitcoin development these days? Well, in the last 185 days, which is the development of v0.15, there were 627 pull requests merged on github. So these are individual high-level changes. Those requests contained 1,081 non-merge commits by 95 authors, which comes out to 6 commits per day which is pretty interesting compared to other cryptocurrency projects. One of the interesting things about v0.15 is that 20% of the commits are test-related, so they were adding or updating tests. And a lot of those actually came from jnewbery, a relatively new contributor who works at Chaincode Labs. His first commit was back in November 2016 and he was responsible for half of all the test-related commits and he was also the top committer. And then it falls down from there, lots of other people, a lot of contributions, and then a broad swath of everyone else. The total number of lines changed was rather large, 52k lines inserted. This was actually mostly introduction of new tests. That's pretty good, we have a large backlog of things that are under-tested, for our standards... there's some humor there because Bitcoin Core is already relatively well-tested compared to most software. All of this activity results in about 3k lines changed per week to review. For someone like me, who does most of his contributions in the form of review, there's a lot of activity to keep up with.

So in v0.15, we had a couple of big areas of focus. As always, but especially in v0.15, we had a big push on performance. One of the reasons for this is that the bitcoin blockchain is growing, and just keeping up with that growth requires the software to get faster. But also, with segwitcomingonline, we knew that the blockchain would be growing at an even faster rate, so there was a desire to try to squeeze out all the performance we could, to make up for that increase in capacity.

There were some areas that were polished and problem areas that were made a little more reliable. And there were some areas that we didn't work on-- such as new consensus rules, we've been waiting to see how segwit shakes out, so there hasn't been big focus on new consensus rules. There were a bunch of wallet features that we realized that were easier with segwit, so we held off on implementing those. There are some things that were held back, waiting for segwit, and now they can move forward at a pretty good speed.

One of the important performance improvements is that we have completely reworked the chainstate database in Bitcoin Core. The chainstate database is what stores the information that is required to validate new blocks as they come in. This is also called the UTXO set. The current structure we've been using has been around since v0.80, and when it was introduced the disk structure, which has a separate database of just the information required to validate blocks, was something like a 40x performance improvement. That was an enormous performance improvement from what we had. So this new update is improving this further.

The previous structure we often talk about as a per-output database. So this is a UTXO database. People think of it as tracking every output from every transaction as a separate coin. But that's not actually how it was implemented on the backend. The backend system previously batched up all outputs for a single transaction to a single record in the database and stored those together. Back at the time of v0.80, that was a much better way of doing it. The usage patterns and the load on the bitcoin network changed since then, though. The batching saved space because it shared some of the information, like height of a transaction, whether or not it's a coinbase transaction, and the txid shared among all the outputs. One of several problems wth this is that when you spend the outputs, you have to read them all and write them back, and this creates a quasi-quadratic blow-up where a lot more work is required to modify transactions with many outputs. It also made the software a lot more complicated. If a pure utxo database only had to create outputs and delete them when you spend, but the merging form in the past supported modifications, such as spending some of the outputs but saving the rest back. This whole process resulted in considerable memory overhead.

We have changed to a new structure where we store one record per txout. This results in a 40% faster sync, so it's a big performance improvement. This is 10% less memory for the same number of cache entries, so you can have larger caches but same amount of memory on your system, or you can run Bitcoin Core on a machine with less memory than you could have before. This 40% was given on a host with very fast disks, but if you're going to run the software on a computer with slow media such as spinning disks or USB or something like that, then I would expect to see faster results even beyond 40% but I haven't benchmarked.

There's a downside, though, which is that it makes the database larger on disk. 15% chainstate size increase (2.8 GB now). So there's a 15% increase in the chainstate directory. But this isn't really a big deal.

Here's a visualization of the trade. In the old scheme you had this add, modify, modify, delete operation sequence. So you had three outputs, one gets spent, another spent, and then you delete the rest. And the new way, which is what people thought it was doing all along. This same structure has been copied into other alternative implementations so btcd, bcoin, these other implementations copied the same structure as from Bitcoin Core so they all work like the original v0.80 method.

This is a database format change, so there's a migration process when you start v0.15 on an older host it will then migrate to a new format. On a fast system this takes 2-3 minutes, but on a raspberry pi this is going to take a few hours. Once you run v0.15 on a node, in order to go back to an older version of bitcoind, you will have to do a chainstate reindex and that will take you hours. There's a corner case where if you ran Bitcoin Core master in the couple week period from when we introduced this until we improved it a bit, your chainstate directory may have become 5.8 GB in size, so double size, and that's because leveldb the underlying backend database was not particularly aggressive about removing old records. There's a hidden forcecompactdb option that you can run as a one-time operation that will shrink the database back to its size. So if you see that you have a 5.8 GB chainstate directory, run that command and get it back to what it should be.

The graph at the bottom here is showing the improvements from this visually, and it's a little strange to read. The x-axis is progress in terms of what percent of the blockchain was synced. And on the first graph, the y-axis is showing how much time has passed. This purple line at the top is the older code without the improvement, but if you look early on in the history, the difference isn't as large. The lower graph here is showing the amount of data stored in the cache at a point in time. So you see the data increasing, then you see it flush the cache to disk and it goes to nothing, and so on. If you see the purple line there, it's flushing very often as it gets further along in synchronization, and it's flushing frequently over here so that it doesn't have to do as much I/O.

Since this change is really a change at the heart of the consensus algorithm in bitcoin, we had some very difficult challenges related to testing it. We wouldn't want to deploy a change that would cause it to corrupt or lose records, because that would cause a network split or network partition. So major consensus critical part of the system. This redo, actually, had a couple of months of history before it was made public where Pieter Wuille was working on it in private, trying a couple of different designs. Some of his work was driven by his work on the next feature that I am going to talk about in a minute. But once it was made public, it had 2 months of public review, there were at least 145 comments on it, people going through and reviewing the software line by line, asking questions, getting comments improved and things like that. This is an area of the software where we already had pretty good automated testing for it. We also added some new automated tests. We also used a technique called mutation testing, where basically we took the updated copy of bitcoin in its new tests, and we went line by line through the software and everywhere we saw a location where a byte could have occurred-- such as every if-branch that could have been inverted, or every zero that could have been a one, and every add could be a subtract-- we made each bug in term, and ran the tests, and verified that every change to the software would make the tests fail. So this process didn't turn up any bugs in the new database, hooray, but it did turn up a pre-existing non-exploitable crash bug and some short-comings in the tests where some tests thought they were testing one aspect but it turns out they were testing nothing. And that's a thing that happens, because often people don't test the tests. You can think of mutation testing, in your mind, as normally the tests is the test of the software, but what's the test of the test? Well, the software is the test of the test, you just have to break the software to see the results.

https://www.youtube.com/watch?v=nSRoEeqYtJA&t=13m40s

A feature related to the new chainstate database which we also did shortly after is non-atomic flushing. This database cache in bitcoin software is really more of a buffer than a cache. The thing it does that improves performance isn't that it saves you from having to read things off disk-- reading things off-disk is relatively fast. But what it does is that it prevents things from being written to disk such as when someone makes a transaction and then two blocks later they spend the output of that transaction, with a buffer you can avoid ever taking that data and writing it to the database. So that's where almost all of the performance improvement from 'caching' really comes from in bitcoin. It's this buffer operation. So that's great. And it works pretty well.

One of the problems with it is that in order to make the system robust to crashes, the state on the disk always has to be consistent with a particular block so that if you were to yank the power out of your machine and your cache is gone, since it was just in memory, you want the node to be able to come up and continue validation from a particular block instead of having partial data on disk. It doesn't have to be the latest block, it just has to be some exact block. So the way that this was built in Bitcoin Core was that whenever the cache would fill up, we would force-flush all the dirty entries to disk all at once, and then we could be sure that it was consistent with that point in time. In order to do that, we would use a database transaction, and the database transaction required creating another full copy of the data that was going to be flushed. So this extra operation meant that basically we lost half of the memory that we could tolerate in our cache with this really short 100 ms long memory peak, so we had to in older versions like v0.14 if you configure a database cache of say a gigabyte, then we really use 500 MB for cache and then 500 MB is left unused to handle this little peak that occurs during flushing. We're also doing some work with smarter cache management strategies, where we incrementally flush things, but they interact poorly with the whole "flush at once" concept.

But we realized that the blockchain itself is actually a write-ahead log. It's the exact thing you need for a database to not have to worry about what order your writes were given to the disk. With this in mind, our UTXO database doesn't actually need to be consistent. What we can do instead is that we store in the database the earliest point at which writes could have occurred, such as the earliest blockheight for which there were writes in flight, and the latest, and then on startup we simply go through the blockchain on disk and re-apply all of the changes. Now, this was much harder when the database could have entries that were modified, but after changing to the per-TXO model, every entry is either inserted or deleted. Inserting a record twice just does nothing, and deleting a record twice also does nothing. So the software to do this is quite simple, it starts up, sees where it could have had writes in flight, and then follows them all out.

So this really simplifies a lot of things. It also gives us more flexibility on how we manage that database in the future. So it would be much easier to switch to a custom data structure or do other fancy things with it. It also allows us to do fancier cache management and lower latency flushing in the future, like we could in the future incrementally flush every block to avoid the latency spikes from doing a full flush all at once. Something we've experimented with, we just haven't proposed it yet. But it's now easy to do with this change. It also means that we--- we've benchmared all the trendy database things that you might use in place of leveldb in this system, and so far we've not found anything that performs significantly better, but if in the future we found something that performs significantly better, we wouldn't have to worry about supporting transactions anymore.

Also in v0.15, we did a bit of platform-specific acceleration work. And this is stuff that we knew we could do for a long time, but it didn't rise to the top of the performance priority list. So, we now use SSE4 assembly implementation of sha256. And that results in a 5% speedup of initial block download, and a similar speedup for connecting a new block at the tip, maybe more like 10% there.

In v0.15, it's not enabled in the build by default because we introduced this just a couple of days before the feature freeze and then spent 3 days trying to figure out why it was crashing on macosx, and it turns out that the macosx linker was optimizing out the entire chunk of assembly code, because of an error we made in the capitalization of the label. So... this took like 3 days to fix, and we didn't feel like merging it in and then having v0.15 release delayed by potentially us finding out later that it doesn't work on openbsd or something obscure or some old osx. But it will be enabled in the next releases.

We also implemented support for the sha-native instruction support. This is a new instruction set to do sha hardware acceleration that Intel has announced. They announced it but they really haven't put it into many of their products, it's supported in like one Atom CPU. The new AMD rizon stuff contains this instruction, though, and the use of it gives another 10% speedup in initial block download for those AMD systems.

We also made the backend database which uses CRC32 to detect errors in the database files, we made that use the SSE4 instruction too.

Another one of the big performance improvements in v0.15 was this script validation caching. So bitcoin has had since v0.7, a cache that basically memorizes every public key message signature tuple and will allow you to validate those much faster if they were in the cache. This change was actually the last change to the bitcoin software by Satoshi, he had written the patch and sent it to Gavin and it just sat languishing in his mailbox for about a year and there's some DoS attacks that can be solved by having a cache. The DoS attack is basically where you have a transaction with 10,000 valid signatures in it, and then the 10,001 first signature in the transaction is invalid, and you will spend all this time validating each of the signatures, to get down to the last one and find out that it's invalid, and then the peer disconnects and sends you the same transaction but with one different invalid signature at the bottom and do it again... So the signature cache was introduced to fix that, but it also makes validation off the tip much faster. But even though that's in place, all it's caching is the elliptic curve operations, it's not caching the validation of the scripts total. And this is a big deal for signature hashing. For transactions to be signed, you have to hash the transaction to determine what it's signing, and for large non-segwit transactions, that can take a long time.

One question that has arised since 2012 was well why not use the fact that the transaction is in the mempool as a proxy for whether it's valid. If it's in your mempool, then it's already valid, you already validated, go ahead and accept it. Well the problem wit hthis is that the rules for a transaction going into a mempool are not the same as the rules for a transaction into a block. They are supposed to be a subset, but it's easy for software errors to turn it into a superset. There have been bugs in the mempool handling in the past that have resulted in invalid transactions appearing in the mempool. Because of how the rest of the software is structured, that error is completely harmless except wasting a little memory. But if you were using the mempool for validation, then having an invalid transaction in the mempool would immediately become a consensus splitting (network partition) bug. Using the mempool would massively increase the portion of the codebase that is consensus-critical, and nobody working on this project is really interested in using the mempool for doing that.

So what we've introduced in v0.15 is a separate cache for script validation caching. It maintains a cache where the keys are the txid and the validation flag for which rules are applicable for that tx, it caches that, and all the validity rules other than sequence number and blocktime are a pure function of the hash of the transaction, then that's all it has to cache. For segwit that's wtxid, not just txid. So the presence of this cache creates a 50% speedup of accepting blocks at the tip, so when a new block comes into a node.

There's a ton of other speedups in v0.15. A couple that I wanted to highlight are on this slide. DisconnectTip which is the operation central to reorgs, so to unplug a block from the chain and undo it, that was made much faster, something on the order of 10s of times faster, especially if you are doing a reorg of many blocks, mostly by deferring mempool processing. Previously, you would disconnect the block, take all the transactions out, put it in your mempool, disconnect another block and put the transactions into the mempool, and so on, and we changed this so that it does all of this in a batch instead. We disconnect the block, throw the transactions into a buffer, leave the mempool alone until you're done.

We added a cache for compact block messages. So when you're relaying compact block messages to different peers, we hash the constructed message rather than reconstructing it for each peer.

The key generation in the wallet was made on the order of 20x faster by not flushing the database between every key that it inserted.

And there were some minor little secp2561 speedups on the order of 1%. But 1% is worth mentioning here because 1% speedups in the underlying crypto library are very hard to find. 1% speedup is at least weeks of work.

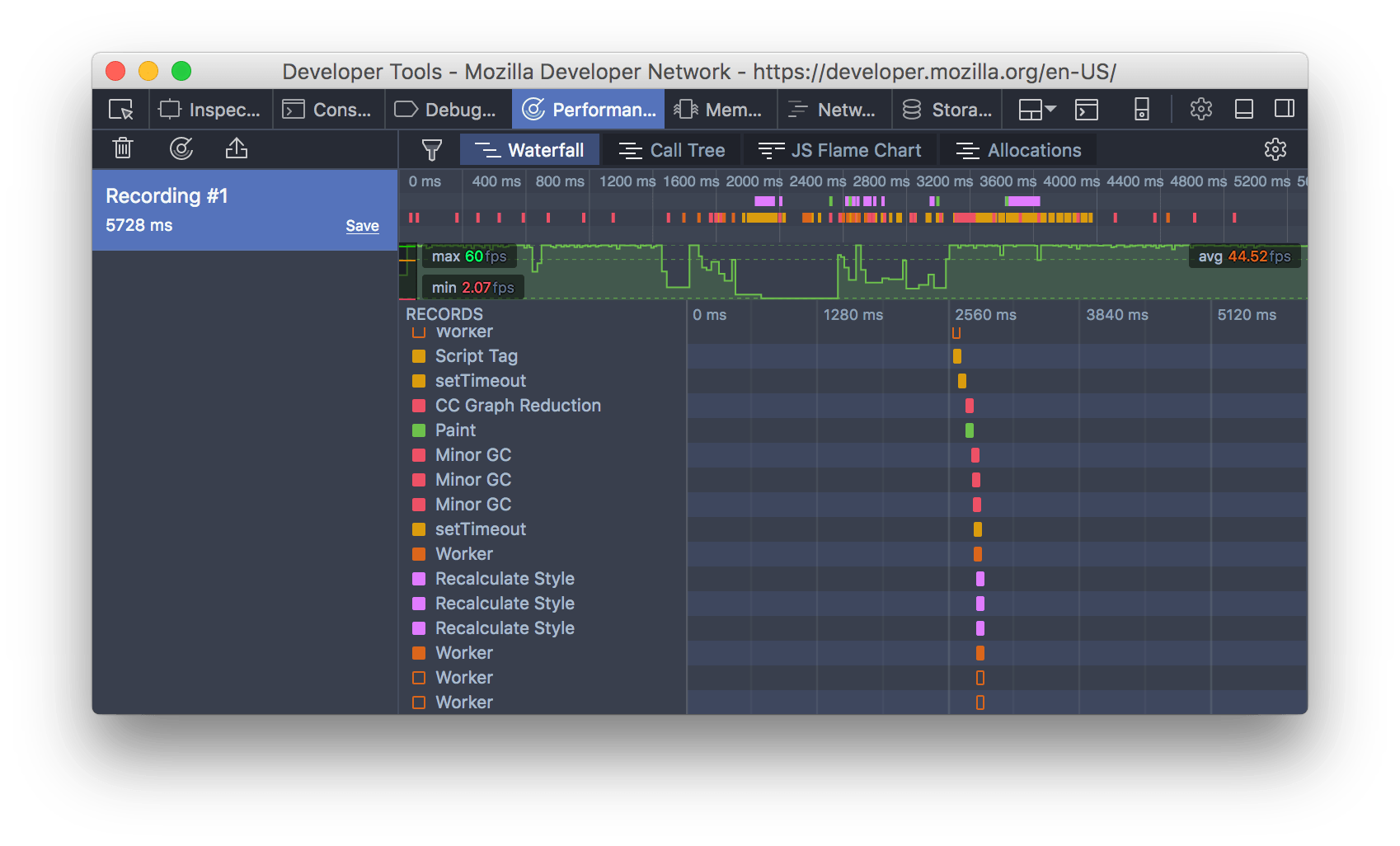

In result, here is an initial block download (IBD) chart. The top bar is v0.14.2, and the bottom bar is v0.15 rc3. And this is showing number of seconds for initial sync across the network. In this configuration, I've set the dbcache size to effectively infinite, like 60 gigs. So the dbcache never fills on both of these hosts I was testing on. The reason why I tested with a dbcache size of infinite is that two reasons-- one is that if you have decent hardware, then that's the configuration you want to run while syncing anyway, and my second reason was that because with normal size dbcache which was sized to fit on a host with 1 GB of RAM, the benchmarks were taking so long that it wasn't ready in time for my presentation tonight, and they will finish sometime around tonight. The two segments in the bars are showing how long it took to sync to a period of about 2 weeks ago, the outer point, and the inner part is how long it takes to sync to the point where v0.14 was released. And so what you can see in the lower bar, it's considerbaly faster, almost a 50% speedup there. It's actually taking about the same amount of time as v0.14 as v0.14 took to sync, so all of these speedups basically got us back to where we were at the beginning of the year in terms of aggregate total size just due to the underlying blockchain growth. And these numbers are in seconds, that's probably not clear, but the shorter bar runs out to 3 hours, and that's on a machine with 64 GB RAM and 24 cores.

https://www.youtube.com/watch?v=nSRoEeqYtJA&t=25m50s

Out of the realm of performance for a while.... In v0.15, and as a long-requested feature that people have been asking about since 2011 is multiwallet support. Right now it's a CLI and RPC-only feature. This allows the Bitcoin Core wallet to have multiple wallets loaded at once. It's not released in the GUI yet, it will be in the next release. It's easier to test in the CLI first and most of the developers are using CLI. You configure with a wallet conf argument for which wallet you want, and you tell the CLI which wallet you want to talk with, or if you're using RPC then you change the URL to have the wallet slash the name of the wallet you want to use. This is very useful if you have a pruned node and you want to keep many wallets in sync and not have to rescan them, because you can't rescan them on a pruned node.

For the moment, this should be considered somewhat experimental. The main delay with finally getting this in into v0.15 was debate over the interface and we weren't sure what interface we should be providing on it. In the release notes, we explicitly mention that the interface is not stable and we didn't want to delay on this. We've been working on this since v0.12, doing the backend restructuring, and in v0.15 was just the UI component.

https://www.youtube.com/watch?v=nSRoEeqYtJA&t=27m30s

v0.15 features greatly improved fee estimation software. It tracks the... it starts with the original model we had in v0.14, but it tracks estimates on multiple time horizons, so that it can be more responsive to fast changes. It supports two estimation modes, conservative and economical. The economical mode responds much faster. The conservative just says whta's the fee that basically guarantees the transaction will be confirmed based on history. And the economical is "eh whatever's most likely". For bip125 replaceable transactions, if you underpay on it, it's fixable, but if your transaction is not replaceable then we default to using the conservative estimator.

We also made the fee estimator machinery output more data, which will help us improve it in the future, but it's also being used by external estimators where other people have built fee estimation frameworks that use Bitcoin Core's estimator as its input. And this new fee estimator can produce fee estimates for much longer ranges of time, it can estimate for up to 1008 blocks back, so a week back in time, so you can say that you don't care if it takes a long time to confirm then it can still produce useful estimates for that. Also it has fixed some corner cases where estimates were just nuts before.

The fundamental behavior hasn't changed, so the fee estimator in bitcoin has this difficult challenge that it needs to safely operate unsupervised, which is that someone could be attacking your node and the fee estimator should not give a result where you pay a high fees just because someone is attacking you. And we need to assume that the user doesn't know what high fees are... so this takes away from some of the obvious things we might do to make the fee estimator better. For example, the current fee estimator does not look at the current queue of transactions in the mempool that are ready to go into a block. It doesn't bid against the mempool, and the reason why it doesn't do this is because someone could send transactions that they know the miners are censoring, and they could pay very high fees on those transactions and cause you to outbid those transactions that will never get confirmed.

There are many ways that this could be improved in the future, such as using the mempool information but only to lower the fees that you pay. But that isn't done yet. Onwards and upwards.

Separate from fee estimation, there are a number of fixes in the wallet related to fee handling. One of the big ones that I think a lot of people care about is that in the GUI there is support for turning on replaceability for transactions. You can make a transaction, and if you didn't add enough fee and you regret it then you can now hit a button to increase the fee on the bip125 replaceable transaction. This was in the prior release as an RPC-only feature, it's now available in the GUI. With segwit coming into play, this feature will get much more powerful. There are really cool bumpfee things that you can't do without segwit.

There were some corner cases where the automatic coin selection could result in fee overpayment ((possibly wrong link)). Basically the bitcoin wallet whenever you make a transaction, it has to solve a pretty complicated problem where it has to figure out which of the coins to spend and then how much fee to pay. It doesn't know how much fee it wants to spend until it knows which coins it wants to spend, and it doesn't know which coins it wants to spend unless it knows the total amount of the transaction. So there's an interative algorithm that tries to solve this problem. There could be, in some wallets, with lots of tiny inputs, cases where the wallet would in the first pass select a lot of tiny coins for spending and then it goes oh we don't have enough fees for all these coins so I need to select some more value-- and it would go and select some more, but it's not monotone, and it might go spend some 50 BTC input, and my fees are still high because that's where I ended up in the last iteration and it would give up, and it would potentially overpay fees in that case. If your transaction had a change output, then it would pay those extra fees to change, but in the case where there wasn't a change output, it would overpay, and v0.15 fixes this case.

v0.15 also makes the wallet smarter about not producing change outputs when they wouldn't really be useful. So, it doesn't make sense to make a change output for only 1 satoshi because the cost of spending that change is going to be greater than the cost of creating it. The wallet for a long time has avoided creaitng low value ones. It now has a better rational framework for this, and there's now a configuration option called discardfee where you can make it more or less aggressive. But basically it has, it looks at the long term fee estimations to figure out what kind of output value is going to be completely worthless in the future, and it avoids creating smaller ones.

In v0.13, Bitcoin Core introduced hdwallet support. This is being able to create a wallet once and deterministically generate all of its future keys so that you have less need to back up the wallet, so that if you fail to regularly backup your wallet you would be less likely to lose your funds. The rescan support in prior versions was effectively broken... you could take a backed-up hdwallet, put it on a new node, and it wouldn't notice when all of the keys that had been pre-generated had been spent and fill up the keypool... So this made recovery not reliable. This is fixed in v0.15, where it now does a correct rescan including auto top-up, we also increased the size of the default keypool to 1000 keys so that it looks ahead. Whenever it sees a spend in its wallet for a key, it will advance to a thousand keys past that point.

This isn't quite completely finished in v0.15 because we don't handle the case where the wallet is encrypted. If the wallet is encrypted, then you need to manually unlock the wallet and trigger a rescan, if you're restoring from a backup-- it doesn't do that, yet, but in future versions we'll make this work or do something else.

And finally, and htis was an oft-requested feature, there is now an abortrescan RPC command, because rescan takes hours, and their node is useless for a while, so there's a command to stop rescan.

https://www.youtube.com/watch?v=nSRoEeqYtJA&t=34m18s

More of the backend minutia of the system... we've been working on improving the random number generator, this is part of a long-term effort to completely eliminate any use of openssl in the bitcoin software. Right now all that remains of openssl use in the software is the random number generator and bitcoin-qt has bitcoin payment protocol support which uses https which uses QT which uses openssl.

Most of the random number use in the software package has been replaced with using chacha20stream ciphers, including all the cases where we weren't using a cryptographic random number generator, like unix rand-style random number generators that needed to be somewhat random but not secure, but those all use cryptographic RNG now because it was hardly any slower to do so.

One of the things that we're concerned about in this design is that there have been, in recent years, issues with operating systems RNGs-- both freebsd and netbsd have shipped versions where the kernel gave numbers that were not random. And also, on systems running inside of virtual machines, there are issues where you store a snapshot of the VM and then restore it and then the system regenerates the same "random" numbers that it generated before, which can be problematic.

That was a version for a famous bug in 2013 that caused bitcoinj to... so..

We prefer a really conservative design. Right now what we're doing in v0.15 is that any of the long-term keys are generated by using the OS random number generator, the openssl random number generator, and a state that we ... through... And in the future we will replace the openssl component with a separate initializer.

Q: What are you using for entropy source?

A: The entropy on that is whatever openssl does, /dev/urandom, rdrand, and then state that is queried through repeated runs of this algorithm.

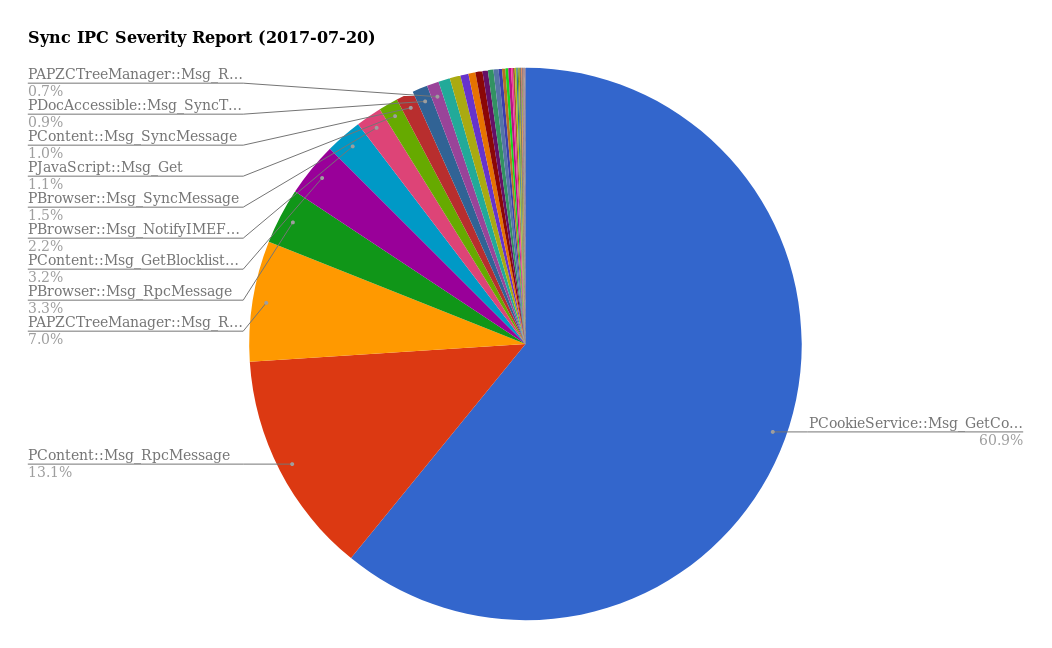

I want to talk about this disconnecting service bits feature. It's sort of a minutia that I wouldn't normally mention, so now it's interesting to talk about because I think there's a lot of misunderstanding. A little bit of background first. Bitcoin's partition resistance is really driven by many different heuristics to try to make sure that a node is not isolated from the network. If a node is isolated from the network because of the blockchain proof-of-work, you're relatively safe but you're still denied service. Consequently, the way that bitcoin does this is that we're very greedy about keeping connections that we previously found to be useful, such as ones that relayed us transactions, blocks and have high uptime. The idea is that if there's an attacker that tries to saturate all the connections on the network, he'll have a hard time doing it because his connections came later, and everyone else is preferring good working connections. The downside of this is that the network can't handle sudden topology changes. You can't go from everyone connected to everyone, and then we're all going to disconnect from everyone and connect to different people, you can't do that, because you blow away all of that management state, and you can end up in situations where nodes are stuck and unable to connect to other nodes for long periods of time because you have lost all of their connections.

When we created segwit, which itself required a network topology change, the way we handled this is by front-loading the topology change to be more safe aobut it. So when you installed v0.13.1, your node made its new connections differently than prior nodes. It preferred to connect to other segwit-capable peers. And our rationale for doing that was that if something went wrong, if there weren't enough segwit-capable peers, if you know this caused you to take a long time to get connected, then that's fine because you just installed an upgrade and perhaps you weren't expecting things to go perfectly. But it also means that it doesn't happen to the whole network all at once, because people applied that upgrade over the course of months. So you didn't go from being connected one second to some totally different topology immediately, it was a very gradual change and avoided thundering hurds of nodes attempting to connect to the few segwit-capable peers at first. So that's what we did for segwit, and it worked really well.

Recently... there was a spin-off of Bitcoin, the Bitcoin Cash altcoin, which for complex political insanity reasons used the bitcoin peer-to-peer port and the bitcoin p2p magic and was basically indistinguishable on the bitcoin network from a bitcoin node. And so, what that meant was that the bcash nodes would end up connected to bitcoin nodes, and bitcoin nodes likewise, sort of half-talking to each other and wasting each other's connection slots. Don't cross the streams. This didn't really cause much damage for bitcoin because there weren't that many bcash connections at the time, but it did cause significant damage to bcash and in fact it still does to this day because if you try to bring up a bcash node it will sync up to the point where they diverged, and it will often sit for 6-12 hours to connect to another bcash node and learn about its blocks. In bcash's case, it was pretty likely to get disconnected because the bcash transactions are consensus-invalid under the bitcoin rules. So it would trigger banning, just not super fast.

There is another proposed spin-off called segwit2x, which is not related to the segwit project. And the S2X has the same problem but much worse. It's even harder for it to get automatically banned, because it doesn't have strong replay protection. And so it will take longer for nodes to realize that they are isolated. There's a risk here that their new consensus rules could activate for their nodes and you could have a node that was connected to only s2x peers, your node isn't going to accept their blockchain because it's consensus-incompatible, but they're not going to accept anything from you and likewise, and you could be isolated for potentially hours, and if you're a miner then you could be in this situation for hours and it would create a big amount of mess. So v0.15 will disconnect any peer which is setting service bits 6 and 8, which is s2x and bcash which are setting those service bits. It will continue to do this until 2018-08-01. So whenever nodes are detected that are setting these bits, it just immediately disconnects them. This reduces these otherwise honest users inadvertedly DoS attacking each other.

The developer of S2X showed up and complained about premature partitioning pointing out that how it only really needs to do this at the moment their new rules activate. But as I have just explained, the network can't really handle the sudden topology change all at once. And, we really think that this concern about partitioning is basically unjustified because S2X nodes will still happily connect to each other and to older versions. We know from prior upgrades that people are still happily running v0.12, v0.10 nodes out on the network. It would take years for S2X nodes to find nothing for them to connect to... but their consensus nodes will change in 2 months after the v0.15 release. So the only risk to them is if the entire bitcoin network upgrades to v0.15 within 2 months, which is just not going to happen. And if it does happen, then people will setup nodes for compatibility. Since there was some noise created about that, I wanted to talk about it.

OK, time for a drink. Just water. ((laughter))

https://www.youtube.com/watch?v=nSRoEeqYtJA&t=42m25s

There's lots of really cool things going on. And often in the bitcoin space people are looking for the bitcoin developers to set a roadmap for what's going to happen. But the bitcoin project is an open collaboration, and it's hard to do anything that's like a roadmap.

I have a quote from Andrew Morton about the Linux kernel developers, which I'd like to read, where he says: "Instead of a roadmap, there are technical guidelines. Instead of a central resource allocation, there are persons and companies who all have a stake in the further development of the Linux kernel, quite independently from one another: People like Linus Torvalds and I don’t plan the kernel evolution. We don’t sit there and think up the roadmap for the next two years, then assign resources to the various new features. That's because we don’t have any resources. The resources are all owned by the various corporations who use and contribute to Linux, as well as by the various independent contributors out there. It's those people who own the resources who decide."

It's the same kind of thing that also applies to bitcoin. What's going to happen in bitcoin development? The real answer to that is another question: what are you going to make happen in bitcoin development? Every person involved has a stake in making it better and contributing. Nobody can really tell you what's going to happen for sure. But I can certainly talk about what I know people are working on and what I know other people are working on, which might seem like a great magic trick of prediction but it's really not I promise.

https://www.youtube.com/watch?v=nSRoEeqYtJA&t=43m45s

So the obvious one is getting segwit fully supported in the wallet. In prior versions of bitcoin, we had segwit support but it's really intended for testing, it's not exposed in the GUI. All the tests use it, it works fine, but it's not a user-friendly feature. There's a good reason why we didn't go and put it in the GUI in advance, and that's people might have used segwit before its rules were enforced and they would have had their funds potentially stolen. Also just in terms of allocating their own resources, we thought it was more important to make the system more reliable and faster, and we didn't know when segwit would activate.

In any case, we're planning on doing a short release right after v0.15.0 with full support for segwit including things like sending to bip173 (bech32) addresses which Pieter Wuille presented here a few months back. Amusingly, this might indirectly help with the bcash spin-off for example where it uses the same addresses as bitcoin and people have already lost considerable amounts of money by sending things to the wrong addresses. So introducing a new address type into bitcoin will indirectly help this situation, unfortunately there's really nothing to prevent people from copying bitcoin address format in a reasonable way.

There are other wallet improvements that people are working on. I had mentioned previously this evening about this fee bumping capability. There's an idea for fee bumping which is that the wallet should keep track of all the things you're currently trying to pay, and whenever you make a new payment, it should recompute the transactions for the things you're trying to pay, to update them and potentially increase the fees at the time. It could also concurrently create timelocked alternative versions of the transactions that pay higher fees, so that you can pre-sign them and have them ready to go... So we went to go design this out a few months back, and we found that there were cases caused by transaction malleability that were hard to solve, but now with segwit activated, we should be able to support this kind of advanced fee bumping in the wallet, and that should be pretty cool.

There is support being worked on for hardware wallets and easy off-line signing. You can do offline signing with Bitcoin Core today but it's the sort of thing that I even I don't love. It's complicated. Andrew Chow has a bip proposal recently posted to the mailing list for partially signed bitcoin transactions (see also hardware wallet standardization things), it's a format that can carry all the data that an offline wallet needs for signing, including hardware wallets. The deployment of this into Bitcoin Core is much easier for us to do safely and efficiently with segwit in place.

Another thing that people are working on is branch-and-bound coin selection to produce changeless outputs much of the time. So this is Murch's design which Andrew Chow has been working on implementing. There's a pull request barely missed going into v0.15 but the end result of this will be transactions less expensive for users and making the network more efficient because it's creating change outputs much less often.

Obviously I mentioned the multiwallet feature before... it's going to get supported in the GUI.

There are several pull requests in flight for full-block lite mode, where you run a bitcoin node download the full blocks so that you have full privacy but don't do full validation for the past history so that you have instant start.

There's some work on hashed timelock contracts (HTLCs) so that you can do more interesting things with smart contracts. Checksequenceverify (bip112) transactions which can be used for various security measures like cosigners that expire after a certain amount of time. And there's some interesting work going on for separating the Bitcoin Core GUI from the backend process, and there are patches that do this, but I don't know if those patches will be the ones that end up getting merged but that work is making progress right now.

There are interesting things going on with network and consensus. One is a proposal for rolling UTXO set hashes (PR #10434). This is a design basically compute a hash of the UTXO set that is very efficient to incrementally update every block so that you don't need to go through the entire UTXO set to compute a new hash. This can make it easier to validate that a node isn't corrupted. But it also opens up new potentials for syncing a new node-- where you say you don't want to validate history from before a year ago, and you want to sync up to a state where up to that point and then continue on further. That's a security trade-off, but we think we have ways of making that more realistic. We have some interesting design questions open-- there are two competing approaches and they have different performance tradeoffs, like different performance in different cases.

We've been doing work on signature aggregation. You can google for my bitcointalk post on signature aggregation. The idea is that basically if you have a transaction with many inputs, maybe it's multsig or with many participants, it's possible with some elliptic curve math to have just a single signature covering all of those inputs even if they are keys held by related but separate parties. This can be a significant bandwidth improvement for the system. Pieter Wuille and Andrew Poelstra and myself presented a paper at Financial Crypto 2017 about what we're proposing to implement here and the risks and tricky math problems that have to be solved. One of the reviewers of our paper found an even earlier paper than ours that we completely missed, which implemented something that was almost identical to what we were doing, and it had even better security proofs than what we had, so we switched to that approach. We have an implementation of the backend and we're playing around now with performance optimizations because they have some implications with regards to how the interface looks for bitcoin. One of the cool things that we also get out of this at the same time is what's called batch aggregation where you can take multiple independent transactions and validate their signatures more efficiently in batch than you could by validating them one at a time.

The byte savings from using signature aggregation-- we ran through simulation of the prior bitcoin history and said, if the prior bitcoin history had used signature aggregation, how much smaller would the blockchain be? Well, the amount of savings changes over time because the aggregation gains change depending on how much multisig you're doing and how many inputs transactions on. Early on in bitcoin, it would have saved hardly nothing. But later on, it stabilized out at around 20%, and it would go up if more multisig was in use or if more coinjoins were in use because this aggregation process incentivizes coinjoin on making the fees lower for the aggregate.

When this is combined with segwit, if everyone is using it, is about 20%. So it's a little less than the byte savings because of the segwit weight calculations. Now, what this scheme does is that it reduces the size of the signature to just one signature per transaction but the amount of computation done to verify it is still proportional to the number of public keys going into it, but we're able to use the batching operation to combine them together and make it go much faster. This chart shows the different schemes we've been experimenting with. It gets faster as more keys are involved. At the 1,000 keys level, so if you're making a transaction with a lot of inputs or a lot of multisig or batch validation, then we're at the point where we can get a 5x speedup over the single signature time validation. So it's a pretty good speedup. And this has been mostly work that Pieter Wuille, Andrew Poelstra and Jonas Nick have been focusing on, trying to get all the performance out of this that we can.

Other things going on with the network and consensus... There's bip150 and bip151 (see the bip151 talk) for encrypted and optionally authenticated p2p. I think the bips are half-way done. Jonas Schnelli will be talking about these in more detail next week. We've been waiting on network refactors before implementing this into Bitcoin Core. So this should be work that comes through pretty quickly.

There's been work ongoing regarding private transaction announcement (the Dandelion paper and proposed dandelion BIP). Right now in the bitcoin network, there are people who connect to nodes all over the network and try to monitor where transactions are originating in an attempt to deanonymize people. There are countermeasures against this in the bitcoin protocol, but they are not especially strong. There is a recent paper proposing a technique called Dandelion which makes it much stronger. The authors have been working on an implementation and I've sort of been guiding them on that-- either they will finish their implementation or I'll reimplement it-- and we'll get this in relatively soon probably in the v0.16 timeframe. It requires a slight extension to the p2p protocol where you tell a peer that you would like it to relay a transaction but only to one peer. The idea is that transactions are relayed in a line through the network, just one node to one node to one node and then after basically after a random timeout they hit a spot where they expand to everything and they curve through the network and then explodes everywhere. Their paper makes a very good argument for the improvements in privacy of this technique. Obviously, if you want privacy then you should be running Bitcoin Core over tor. Still, I think this is a good technique to implement as well.

Q: Is the dandelion approach going to effect how fast your transactions might get into a block? If I'm not rushed...?

A: The dandelion approach can be tuned. Even with relatively modest delays, when used in an anonymous manner, it should only increase the time it takes for your transaction to get into a block by the time it takes for the transaction to reach the whole network. This is only on the order of 100s of milliseconds. It's not a big delay. You can choose to not use it, if you want.

Q: ....

A: Right. So, the original dandelion paper is actually not robust. If a peer drops the transaction being forwarded and doesn't forward it on further, then your transaction will disappear. That was my response to the author and they were working on a more robust version with a timeout. Every node along the stem that has seen the transaction using dandelion, starts a timer and the timer is timed to be longer than the propagation time of the network. If they don't see the transaction go into the burst mode, then they go into burst mode on their own.

Q: ....

A: Yeah. I know, it's not that surprising.

Q: What if you send it to a v0.15 node, wouldn't that invalidate that approach completely?

A: No, with dandelion there will be a service bit assigned to it. You'll know which peers are compatible. When you send a transaction with dandelion, it will traverse only through a graph of nodes that are capable of it. When this is ready, I am sure there will be another talk on this, maybe by the authors of the paper.

Q: It's also really, it's a great question, for applications.. trust.

A: Yes, it could perhaps be used by botnets, but so could bitcoin transactions anyway.

Work has started on something called "peer interrogation" to basically more rapidly kickoff peers that are on different consensus rules. If I have received an invalid block on one peer, then I can go to all my other peers and say hey do you have this block. Anyone else that says yes that you consider it invalid then you can disconnect them because obviously they are on different consensus rules than you. There are a number of techniques we've come up with that should be able to make the network a little more robust from crazy altcoins running on the same freaking p2p port. It's mostly robust against this because we also have to tolerate attackers who aren't you know going to be nice. But it would be nice if these altcoins could distinguish themselves from outright malicious behavior. It's unfortunate when otherewise honest users use software that makes them inadvertedly attack other nodes.

There's been ongoing work on improved block fetch robustness. So right now the way that fetching works is that assuming you're not using compact blocks high-bandwidth opportunistic send where peers send blocks even without you asking for it, you will only ask for blocks from a single peer at a time. So if I say give me a block, and he falls asleep and doesn't send it, I will wait for a long multi-minute timeout before I try to get the block from someone else. So there's some work ongoing to have the software try to fetch a block from multiple peers at the same time, and occassionally waste time.

Another thing that I expect to come in relatively soon is ... proposal for gcs-lite-client BIP for bloom "map" for blocks. We'll implement that in Bitcoin Core as well.

All of the things that I have just talked about are things where I've seen code for it and I think they are going to happen. Going a little further out to where I haven't seen code for it yet, well, segwit made it much easier to make enhancements to the script system to replace and update it. There are several people working on script system upgrades including full replacement script alterative systems that are radically more powerful. It's really cool, but it's also really hard to say when something like that could become adopted. There are also other mprovements being proposed, like being able to do merkle tree lookups inside of bitcoin script, allowing you to build massively more scalable multisig like Pieter Wuille presented on a year ago for key tree signatures.

There's some work done to use Proof-of-Work as an additional priority mechanism for connections (bip154). Right now in bitcoin there's many different ways that a peer can be preferred, such as doing good work, being connected for a long time, having low latency, etc. Our security model for peer connections is that we have lots of different ways and we hope that an attacker can't defeat all of them. We'd like to add proof-of-work as an additional way that we can compute connection slot priority.

There's been some work done on using private information retrieval for privately fetching transactions on a pruned node. So say you have a wallet started on a pruned node, you don't have the blockchain stored locally and you need to find out about your transactions. Well if you just connect to a normal server, you're going to reveal your addresses to them and that's not very private. There are cryptographic tools that allow you to query databases and look things up in them without revealing your private information. They are super inefficient. But bitcoin transactions are super small, so I think there's a good match here and you might see in the future some wallets using PIR methods to look things up.

I've been doing some work on mempool reconciliation. Right now, transactions in the mempool just get there via the flooding mechanism. If you start up a node clean it will only learn about new transactions and wont have anything in its mempool. This is not ideal because it means that when a new block shows up in the network, you won't be able to exploit the speed of compact blocks (bip152) until you have it running for a day or two and build up your mempool. We do save the mempool across restarts, but if you've been down for a while then that data is no longer useful. It's also useful to have mempool pre-loaded fast because you can use it for better.... there are techniques to efficiently reconcile databsaes between different hosts, and there's a bitcointalk post that I made about using some of these techniques for mempool reconciliation and I think we might do this in the not-too-distant future.

There has been some work on doing alternative serialization for transactions, which could be optionally used on disk or on the peer-by-peer basis to use this compact serialization and the one that Pieter Wuille has proposed gives a 25% reduction in the size of transactions. This particular feature is interesting to me outside of the context of Bitcoin Core because Blockstream has recently announced its satellite system and it could really use a 25% reduction in the amount of bandwidth required to send transactions.

Many important improvements in v0.15. I think the most important is the across-board 50% speedup which is able to get sync time back to where it was in February 2017. Hopefully it means that additional load created as people start using segwit, will be less damaging to the system. There are many exciting things being developed, and I didn't even talk about the more speculative things, which I am sure people will have questions about them. There are many new contributors joining the project and ramping up. There are several organizations now paying people. I don't know if they want attention, but I think it's great that people can contribute on a more consistent basis. Anyone is welcome to contribute, and we're particularly eager to meet people who can test the GUI and wallet because those get the least love in the system today. So, thank you, and let's move to some open questions.

https://www.youtube.com/watch?v=nSRoEeqYtJA&t=1h3m13s

First priority is for questions for the presentation, but after that, let's open it up to whatever. Also wait for the mic.

Q: For the new UTXO storage format, how many bytes is it allocating to the index for the output for it?

A: It uses a compact int for it. In fact, it's a little bit-- this format is more efficient, it's 15% larger but it might not think about a lot. leveldb will compress entries itself.

Q: What if some of the participants of the bitcoin network decided to not activate another soft-fork like schnorr signatures?

A: I mentioned the signature aggregation stuff, which would require schnorr signatures, yeah that's a soft-fork and it would have to be activated in the network. So we did have kind of a long slog to get segwit activated. (Someone tosses Greg a segwit hat)... Obviously people are going to do ... but if in the end of the day, it's all the users in the system that decide what the rules are. Especially for a backwards compatible rule like signature aggregation that we could use, well, miners are mining to make money, and certainly there are many factors involved in this but mining operations are very expensive, and if they choose not to use the rules of the bitcoin network is a miner that will soon be bankrupt. Users have the power in bitcoin. I wasn't a big fan of bip148 although I think it has been successful. I think it was way too hastily carried out. I don't anticipate problems with activating signature aggregation in the future, but that's because I trust the users to get this activated and make use of it. Thanks.

Q: A few rapid fire questions I guess. For hte new chainstate database, is the key just the transaction id and then the output index?

A: Yes that's the key. It's both.

Q: Is it desirable to ditch openssl because it's heavy weight?

A: No, openssl was not well designed. It was previously used everywhere in bitcoin and it was not designed or maintained in a way that is suitable for consensus code. They changed their signature validation method in a backwards incompatible way. For all the basic crypto operations it's better for us to have a consensus critical implementation that is consistent. As far as the RNG goes, I think nobody has read that code and still has a question about why removing it-- it's time tested yes, but it's obviously bad code and it needs to go.

Q: ...

A: Yes. The comment was made that all of openssl, the only thing that it has going for it is that it is time tested. Sure. That can't be discounted.

Q: You mentioned in fee esitmation that miners might censor transactions. Have we seen that before?

A: Well, we have seen it with soft-forks. A newly introduced soft-fork for someone who hasn't upgraded, will look like censorship of a transaction. There are some scary proposals out there, people have written entire papers on bribing miners to not insert transactions. There are plenty of good arguments out there for why we're not going to see that in the future, if mining is decentralized. But we still need to have a sytsem that is robust against it potentially happening.

Q: So the Core of this point was that it created a buffer for a register that was like in moving thing for certain number of transactions for a certain period of time, so you ept track track of the outputs..

A: The outputs are the public keys.

Q: So you keep track of those and this was the bulk of the...?

A: Of the performance improvement.

Q: 50%?

A: Yes. The blockchain is pretty big. There have been over a billion transaction outputs created. Small changes in the performance of finding outputs and deleting it, has big impacts on performance.

Q: Is it possible for a non-bitcoin blockchain application, ...

A: Yes I think the same techniques for optimization here would be applicable to any system with general system properties. Our focus in Bitcoin Core is on keeping bitcoin running.

Q: You mentioned at the end that GUI and wallet are two areas where you need more help. Can you expand on it?

A: There's some selection bias in the bitcoin project. Bitcoin developers are overwhelmingly system programmers and we don't even use GUIs generally. As a result of that, we also attract other system programmers to the project. There are some people that work on the GUI, but not so many. We don't have any big design people working on it, for example. There's nobody doing user testing on the wallet. We don't expect Bitcoin Core to be for grandma, it's expected to be industrial machinery, but there's still a lot of user factors that there aren't a lot o resources going into but we would like that to be different.

Q: Yeah I can speak about ... I heard ... blockchain... lightning right now. How is that going?

A: So the question is Blockstream has some people working on lightning. I am not one of them but my understanding is that the big news is that the specifications are coming to completion and there's been great interop testing going on. There are other people in this room that are far more expert on lightning than I am, including laolu who can answer all questions about lightning. Yes, he's waving his hand. I'm pleased to see it moving along.

Q: Hi, you mentioned taking out openssl. But someone who is working on secure communication between peers, is that using openssl?

A: Right, no chance of that. Openssl has a lot of stuff going for it-- largely wideployed, but has lots of attack surface. We don't need openssl for secure communication.

sipa: If I can comment briefly on this, the most important reason to use openssl is if you need openssl. For soething like this, we have the expertise to build a much more narrow protocol without those dependencies.

A: We also have some interesting things different for bitcoin, normally our connections are unathenticated because we have anonymous peers... there are some interesting tricks for that. I can have multiple keys to authenticate to peers, so we can have trace-resistance there, and we can't do that in openssl. Your use of authentication doesn't make you super-identifiable to everyone you conect with.

Q: Question about.. wondering when, what do you think would need to be happen for 1.0 version? More like longer term? Is there some kind of special requirement for a 1.0 release? I know that's far out.

A: It's just numbers, in my opinion. The list of things that I'd like to see in Bitcoin Core and the protocol would be improved fungibility. It's a miles-long list. I expect it to be miles-long for the rest of my life. But maybe this should have nothing to do with v1.0 or not. There are many projects that have changed version numbers purely for marketing reasons, like Firefox and Chrome. There are versions of bitcoin altcoins that use version 1.14 to try to say they are 1 version greater... I roll my eyes at that, it sometimes contributes to user confusion, so I wouldn't be surprised if the number gets ludicrously increased to 1000 or something. In terms of the system being complete, I think we have a long way to go. There are many credible things to do.

Q: There was recently a fork of the bitcoin network that was polite enough to include replay protection. But people are working on another fork that might not include replay protection. So is there a way to defend against this kind of malicious behavior?

A: Well I think that the most important thing is to encourage people to be sensible. But if they won't be sensible and add replay protection, then there are many features that give some amount of automatic replay protection. In ethereum, it's replay vulnerable because it uses accounts model. In bitcoin every time you spend you reference your coins, it's automatic replay connection. There's no problem with replay between testnet and bitcoin or litecoin and bitcoin, it's the inherent replay protection built-in. Unfortunately, this doesn't work if you literally copy the history of the system. The best way probably to deal with replay is to take advantage of replay prevention by making your transaction spend outputs that don't exist on the other chain. People have circulated patches in the past that allow miners to create 0-value outputs that anyone can spend, and then wallets could then pick up those wallets and spend them. If you spend an output created by a miner that only exists on the chain that you want to spend on, then you're naturally replay-protected. If that split happens without replay protection, there willbe tools to help, but it will still be a mess. You see in ethereum and ethereum classic continued snafuus.

Q: There's a altcoin that copied the bitcoin state that you can use identical equipment on.. and it will be more profitable to mine, and it will bounce back and forth between miners.

A: Well, the system works as designed in my opinion. Bcash uses the same PoW function. They changed their difficulty adjustment algorithm. They go into hyperinflation when they lose hashrate. And then those blocks coming in once every few minutes makes it more profitable to mine. Miners are trying to make money. They have to factor in the risk that the bcash coins will become worthless before they can sell them. Miners move them over, bitcoin remains stable, transaction fees go up, which has happened now twice because bitcoin becomes more profitable to mine just from the transaction fees. At the moment, bcash is in a low difficulty period but bitcoin is more profitable to mine because of transaction fees. Bitcoin's update algorithm is not trying to chase out all possible altcoins. At the moment it works fine, it's just a little slow.

Q: ... further... lower.. increase.

A: Well, ... one large miner after segwit activated seemed to lower their blocksize limit, probably related to other unrelated screwups. The system seems to be self-calibrating and it is working as expected, fees are high at the moment, but that's all the more reason for people to adopt segwit and take advantage of lower fees. I'm ofthe opinion that the oscillation is damaging to the bcash sidebecause it's bushing them into hyperinflation. Because their block size is way larger, and they are producing 10 kb blocks, I don't know what twill pay people to mine it as they wear out their inflation schedule. And it should have an accelerating inflation rate because of how that algorithm works. Some of the bcash miners are trying to stop the difficulty adjustments because they realize it's bad. I'm actually disappointed that bcash is having these problems, it's attractive for people to have some alternative if your idea of the world that bitcoin should have no block size limit that's not a world that I can support based on the science we have today; if people want it, they ca have it, and I'm happy to say here go use that thing, but that thing is going off the rails maybe a little faster than I thought, but we'll see.

Q: If I give you a chip that is a million times faster than the nvidia chip, how much saving would you have the mining?

A: A million times faster than nvidia chip... bitcoin mining today is done with highly specialized ASICs. How long until... So, bitcoin will soon to have done 288 cummulative sha256 operations. Oh yes, so we're over 288 sha256 operations done for mining. This is a mind-bogglingly large number. We crossed 280 the other year. If you started at core 2 duo at the beginning of time, then it will just have done 280 work just when 280 was crossed. We have just crossed 256x more work than that. So that's why something like a million times faster nvidia chip still wouldn't hold a candle to the computing power from all these sha256 asics. I would recommend against 160-bit hashes for any cases where collisions are a concern. We use them for addresses, but addresses aren't a collision concern, although segwit does change it for that reason.

Q: There's a few different proposlas nad trying to solve the same thing, like wea blocks, thin blocks, invertible bloom filters, is there anything in that realm on the horizon, what do you think is the most probable development there?

A: There's a class of proposals called pre-consensus, like weak blocks (or here), where participants come to agreement in advance on what they will put into blocks before it is found. I think those techiques are neat, I've done some work on them, I think other people will work on them. There are many design choices, ew could run multiples of these in parallel. We have made great progress on efficient block transmission with FIBRE and compact blocks. We went 5000 blocks ish without an orphan a couple weeks ago, so between FIBRE and v0.14 speedups, we've seen the orphan rate drop, it's not as much of a concern. We might see it pop back up as segwit gets utilized, we'll have to see.

Q: Is rolling utxo hash a segwit precondition?

A: I think most of these chemes wouldn't make use of a rolling utxo set, there's some potential interaction for fast syncing nodes on it. A lot of these, th epreconsensus techniques you can have no consensus enforcement of the rules and the miners just go along with it. They are interesting techniques. There's a paper 2 years ago called bitcoin-ng which itself can be seen as a pre-consensus technique that talks about some interesting things in this space.

Q: Going 5000 blocks...

A: Yeah, so... Bram is saying that if we go 5000 blocks without an orphan, then this implies that the blocks are going out on the order of 200 ms, that's right. Well actually it's more like 100 ms. Particularly BlueMatt's technique transmits pretty rleiably near the speed of light. Lots of ISPs... yeah so, part of the reason why ew are able to do this, this is where BlueMatt's stuff is really helpful, he setup a network of nodes that have been carefully placed to connect with each other and be low latency, and this is very difficult and it has taken some work, but it helps out the network.

Q: Hi. What do you think about proof-of-stake as a consensus mechanism?

A: There's a couple of things. There have been many proof-of-stake proposals that are outright broken. And people haven't done a good job of distinguishing the proposals. As an example, proof-of-stae in NXT is completely broken and you can just stakegrind it. The reason why people haven't attacked it, because it hasn't been worth it to do so... There are other proposals that achieve very different security properties than what bitcoin achieves. Bitcoin achieves this anonymous anyone can join very distributed mechanism of security, and the various proof-of-stake proposals out there have different tradeoffs. The existing users can exclude new users by not letting them stake their coins. I think the people working on proof-of-stake have done a poor job of stating their security assumptions and showing exactly what goals they achieve. There's been also some work in this space where they make unrealistic assumptions. There was a recent PoS paper that claimed to solve problems, but the starting assumption was that the users have a synchronous network which is where all users will receive all the messages in order all the time. If you had a synchronous network then you don't need a blockchain at all. This was one of the starting assumptions in a recent paper on PoS. So I think it's interesting that people are exploring this stuff, but I think a lot of this work is not likely to be productive. I hope that the start of the art will improve... it's tiresome to read papers that claim to solve these problems but then you get to page 14 and it says oh it requires a synchronous network, into the trash. This is a new area, and science takes time to evolve.

Q: Beyond .. bad netcode... what are the highlights for your current threat model for protecting the future of bitcoin?

A: I think that the most important highlight is education. Ultimately bitcoin is a social and economic system. We need to understand it and have a common set of beliefs. Even as constructed today. There are some long term problems related to mining centralization. I am of the belief that they are driven by short-term single shot events where people entered and overbuilt hardware. Perhaps it's a short-term effect. But if it's not, and a few years later we look at this and we see nothing improving, then maybe it's time to get another answer. The bitcoin space is so surprising. Last time I spoke here, the bitcoin market price was $580. We can't allow our excitement about adoption to make bad moves related to technology. I want bitcoin to expand to the world as fast as it can, but no faster.

Q: Hey uh... so it sounds like you, in November whenever s2x gets implemented, and it gets more work than bitcoin, I mean it sounds like you consider it an altcoin it's like UASF territory at what point is bitcoin is bitcoin and what would y'all do with sha256 algorithm if s2x gets more work on it?

A: I think that the major contributors on Bitcoin Core are pretty consistent and clear on their views on s2x (again), we're not interested and not going along with it. I think it will be unlikely to get more work on it. Miners are going to follow the money. Hypothetically? Well, I've never been of the opinion that more work matters. It's always secondary to following the rules. Ethereum might have had more joules pumped into its minin than bitcoin, although I haven't done the math that's at least possible. I wouldn't say ethereum is now bitcoin though... just because of the joules. Every version of bitcoin all the way back has had nodes enforcing the rules. It's essential to bitcoin. Can I think bitcoin can hard-fork? Yeah, but all the users have to agree, and maybe that's hard to achieve because we can do things without hard-forks. And I think that's fine. If we can change bitcoin for a controversial change, then I think that's bad because you could make other controversial changes. Bitcoin is a digital asset that is not going to change out from you. As for future proof-of-work functions, that's unclear. If s2x gets more hashrate, then I think that would be because users as a whole were adopting it, and I think if that was the case then perhaps the Bitcoin developers would go do something else instead of Bitcoin development. It might make sense to use a different proof of work function. Changing a PoW function is a nuclear option and you don't do it unless you have no other choice. But if you have no other choice, yeahdo it.

Q: So sha256 is not a defining characteristic of bitcoin?

A: Right. We might even have to change sha256 in the future for security reasons anyway.

Q: Rusty Russell wrote a series of articles about if a hard-fork is necessary, what does it mean, how much time is required, I'm not say I'm proposing a hard-fork, but what kinds of changes might be useful to make it easier for us to make good decisions at that point?

A: I don't think the system can decide on what it is. It's inherently external. I am in favor of a system where we make changes with soft-forks, and we use hard-forks only to clean up technical debt so that it's more easy to get social consensus on those. I think Rusty's posts are interesting, but there's this property that I've observed in bitcoin where there's an inverse rleationship between the distance you are to the code and how ... no, proprotional relationship: distance between the code and how viable you think the proposals are. Rusty is very technical, but he's not a regular contributor to Bitcoin Core. He's a lightning developer. This is a pattern we see in other places as well.

I think that was the last question. Alright, thanks.

![]()

It’s important to state that Lu Ban’s role in Chinese culture is very minor at best, and obviously being a deity he does not directly correspond to say Edison (If you’d showed your axe to Edison he’d either have stolen the design or declared it a menace and tried to run you out of business so he could sell more saws). Again, I’m grossly oversimplifying both Chinese culture and history but you should not have to become an expert in either to get some PCBs made.

It’s important to state that Lu Ban’s role in Chinese culture is very minor at best, and obviously being a deity he does not directly correspond to say Edison (If you’d showed your axe to Edison he’d either have stolen the design or declared it a menace and tried to run you out of business so he could sell more saws). Again, I’m grossly oversimplifying both Chinese culture and history but you should not have to become an expert in either to get some PCBs made.