Every illness is a story, and Annie Page’s began with the kinds of small, unexceptional details that mean nothing until seen in hindsight. Like the fact that, when she was a baby, her father sometimes called her Little Potato Chip, because her skin tasted salty when he kissed her. Or that Annie’s mother noticed that her breathing was sometimes a little wheezy, though the pediatrician heard nothing through his stethoscope.

The detail that finally mattered was Annie’s size. For a while, Annie’s fine-boned petiteness seemed to be just a family trait. Her sister, Lauryn, four years older, had always been at the bottom end of the pediatrician’s growth chart for girls her age. By the time Annie was three years old, however, she had fallen off the chart. She stood an acceptable thirty-four inches tall but weighed only twenty-three pounds—less than ninety-eight per cent of girls her age. She did not look malnourished, but she didn’t look quite healthy, either.

“Failure to thrive” is what it’s called, and there can be scores of explanations: pituitary disorders, hypothyroidism, genetic defects in metabolism, inflammatorybowel disease, lead poisoning, H.I.V., tapeworm infection. In textbooks, the complete list is at least a page long. Annie’s doctor did a thorough workup. Then, at four o’clock on July 27, 1997—“I’ll never forget that day,” her mother, Honor, says—the pediatrician called the Pages at home with the results of a sweat test.

It’s a strange little test. The skin on the inside surface of a child’s forearm is cleaned and dried. Two small gauze pads are applied—one soaked with pilocarpine, a medicine that makes skin sweat, and the other with a salt solution. Electrodes are hooked up. Then a mild electric current is turned on for five minutes, driving the pilocarpine into the skin. A reddened, sweaty area about an inch in diameter appears on the skin, and a collection pad of dry filter paper is taped over it to absorb the sweat for half an hour. A technician then measures the concentration of chloride in the pad.

Over the phone, the doctor told Honor that her daughter’s chloride level was far higher than normal. Honor is a hospital pharmacist, and she had come across children with abnormal results like this. “All I knew was that it meant she was going to die,” she said quietly when I visited the Pages’ home, in the Cincinnati suburb of Loveland. The test showed that Annie had cystic fibrosis.

Cystic fibrosis is a genetic disease. Only a thousand American children per year are diagnosed as having it. Some ten million people in the United States carry the defective gene, but the disorder is recessive: a child will develop the condition only if both parents are carriers and both pass on a copy. The gene—which was discovered, in 1989, sitting out on the long arm of chromosome No. 7—produces a mutant protein that interferes with cells’ ability to manage chloride. This is what makes sweat from people with CF so salty. (Salt is sodium chloride, after all.) The chloride defect thickens secretions throughout the body, turning them dry and gluey. In the ducts of the pancreas, the flow of digestive enzymes becomes blocked, making a child less and less able to absorb food. This was why Annie had all but stopped growing. The effects on the lungs, however, are what make the disease lethal. Thickened mucus slowly fills the small airways and hardens, shrinking lung capacity. Over time, the disease leaves a child with the equivalent of just one functioning lung. Then half a lung. Then none at all.

The one overwhelming thought in the minds of Honor and Don Page was: We need to get to Children’s. Cincinnati Children’s Hospital is among the most respected pediatric hospitals in the country. It was where Albert Sabin invented the oral polio vaccine. The chapter on cystic fibrosis in the “Nelson Textbook of Pediatrics”—the bible of the specialty—was written by one of the hospital’s pediatricians. The Pages called and were given an appointment for the next morning.

“We were there for hours, meeting with all the different members of the team,” Honor recalled. “They took Annie’s blood pressure, measured her oxygen saturation, did some other tests. Then they put us in a room, and the pediatrician sat down with us. He was very kind, but frank, too. He said, ‘Do you understand it’s a genetic disease? That it’s nothing you did, nothing you can catch?’ He told us the median survival for patients was thirty years. In Annie’s lifetime, he said, we could see that go to forty. For him, he was sharing a great accomplishment in CF care. And the news was better than our worst fears. But only forty! That’s not what we wanted to hear.”

The team members reviewed the treatments. The Pages were told that they would have to give Annie pancreatic-enzyme pills with the first bite of every meal. They would have to give her supplemental vitamins. They also had to add calories wherever they could—putting tablespoons of butter on everything, giving her ice cream whenever she wanted, and then putting chocolate sauce on it.

A respiratory therapist explained that they would need to do manual chest therapy at least twice a day, half-hour sessions in which they would strike—“percuss”—their daughter’s torso with a cupped hand at each of fourteen specific locations on the front, back, and sides in order to loosen the thick secretions and help her to cough them up. They were given prescriptions for inhaled medicines. The doctor told them that Annie would need to come back once every three months for extended checkups. And then they went home to start their new life. They had been told almost everything they needed to know in order to give Annie her best chance to live as long as possible.

The one thing that the clinicians failed to tell them, however, was that Cincinnati Children’s was not, as the Pages supposed, among the country’s best centers for children with cystic fibrosis. According to data from that year, it was, at best, an average program. This was no small matter. In 1997, patients at an average center were living to be just over thirty years old; patients at the top center typically lived to be forty-six. By some measures, Cincinnati was well below average. The best predictor of a CF patient’s life expectancy is his or her lung function. At Cincinnati, lung function for patients under the age of twelve—children like Annie—was in the bottom twenty-five per cent of the country’s CF patients. And the doctors there knew it.

It used to be assumed that differences among hospitals or doctors in a particular specialty were generally insignificant. If you plotted a graph showing the results of all the centers treating cystic fibrosis—or any other disease, for that matter—people expected that the curve would look something like a shark fin, with most places clustered around the very best outcomes. But the evidence has begun to indicate otherwise. What you tend to find is a bell curve: a handful of teams with disturbingly poor outcomes for their patients, a handful with remarkably good results, and a great undistinguished middle.

In ordinary hernia operations, the chances of recurrence are one in ten for surgeons at the unhappy end of the spectrum, one in twenty for those in the middle majority, and under one in five hundred for a handful. A Scottish study of patients with treatable colon cancer found that the ten-year survival rate ranged from a high of sixty-three per cent to a low of twenty per cent, depending on the surgeon. For heartbypass patients, even at hospitals with a good volume of experience, risk-adjusted death rates in New York vary from five per cent to under one per cent—and only a very few hospitals are down near the one-per-cent mortality rate.

It is distressing for doctors to have to acknowledge the bell curve. It belies the promise that we make to patients who become seriously ill: that they can count on the medical system to give them their very best chance at life. It also contradicts the belief nearly all of us have that we are doing our job as well as it can be done. But evidence of the bell curve is starting to trickle out, to doctors and patients alike, and we are only beginning to find out what happens when it does.

In medicine, we are used to confronting failure; all doctors have unforeseen deaths and complications. What we’re not used to is comparing our records of success and failure with those of our peers. I am a surgeon in a department that is, our members like to believe, one of the best in the country. But the truth is that we have had no reliable evidence about whether we’re as good as we think we are. Baseball teams have win-loss records. Businesses have quarterly earnings reports. What about doctors?

There is a company on the Web called HealthGrades, which for $7.95 will give you a report card on any physician you choose. Recently, I requested the company’s report cards on me and several of my colleagues. They don’t tell you that much. You will learn, for instance, that I am in fact certified in my specialty, have no criminal convictions, have not been fired from any hospital, have not had my license suspended or revoked, and have not been disciplined. This is no doubt useful to know. But it sets the bar a tad low, doesn’t it?

In recent years, there have been numerous efforts to measure how various hospitals and doctors perform. No one has found the task easy. One difficulty has been figuring out what to measure. For six years, from 1986 to 1992, the federal government released an annual report that came to be known as the Death List, which ranked all the hospitals in the country by their death rate for elderly and disabled patients on Medicare. The spread was alarmingly wide, and the Death List made headlines the first year it came out. But the rankings proved to be almost useless. Death among the elderly or disabled mostly has to do with how old or sick they are to begin with, and the statisticians could never quite work out how to apportion blame between nature and doctors. Volatility in the numbers was one sign of the trouble. Hospitals’ rankings varied widely from one year to the next based on a handful of random deaths. It was unclear what kind of changes would improve their performance (other than sending their sickest patients to other hospitals). Pretty soon the public simply ignored the rankings.

Even with younger patients, death rates are a poor metric for how doctors do. After all, very few young patients die, and when they do it’s rarely a surprise; most already have metastatic cancer or horrendous injuries or the like. What one really wants to know is how we perform in typical circumstances. After I’ve done an appendectomy, how long does it take for my patients to fully recover? After I’ve taken out a thyroid cancer, how often do my patients have serious avoidable complications? How do my results compare with those of other surgeons?

Getting this kind of data can be difficult. Medicine still relies heavily on paper records, so to collect information you have to send people to either scour the charts or track the patients themselves, both of which are expensive and laborious propositions. Recent privacy regulations have made the task still harder. Yet it is being done. The country’s veterans’ hospitals have all now brought in staff who do nothing but record and compare surgeons’ complication rates and death rates. Fourteen teaching hospitals, including my own, have recently joined together to do the same. California, New Jersey, New York, and Pennsylvania have been collecting and reporting data on every cardiac surgeon in their states for several years.

One small field in medicine has been far ahead of most others in measuring the performance of its practitioners: cystic-fibrosis care. For forty years, the Cystic Fibrosis Foundation has gathered detailed data from the country’s cystic-fibrosis treatment centers. It did not begin doing so because it was more enlightened than everyone else. It did so because, in the nineteen-sixties, a pediatrician from Cleveland named LeRoy Matthews was driving people in the field crazy.

Matthews had started a cystic-fibrosis treatment program as a young pulmonary specialist at Babies and Children’s Hospital, in Cleveland, in 1957, and within a few years was claiming to have an annual mortality rate that was less than two per cent. To anyone treating CF at the time, it was a preposterous assertion. National mortality rates for the disease were estimated to be higher than twenty per cent a year, and the average patient died by the age of three. Yet here was Matthews saying that he and his colleagues could stop the disease from doing serious harm for years. “How long [our patients] will live remains to be seen, but I expect most of them to come to my funeral,” he told one conference of physicians.

In 1964, the Cystic Fibrosis Foundation gave a University of Minnesota pediatrician named Warren Warwick a budget of ten thousand dollars to collect reports on every patient treated at the thirty-one CF centers in the United States that year—data that would test Matthews’s claim. Several months later, he had the results: the median estimated age at death for patients in Matthews’s center was twenty-one years, seven times the age of patients treated elsewhere. He had not had a single death among patients younger than six in at least five years.

Unlike pediatricians elsewhere, Matthews viewed CF as a cumulative disease and provided aggressive treatment long before his patients became sick. He made his patients sleep each night in a plastic tent filled with a continuous, aerosolized water mist so dense you could barely see through it. This thinned the tenacious mucus that clogged their airways and enabled them to cough it up. Like British pediatricians, he also had family members clap on the children’s chests daily to help loosen the mucus. After Warwick’s report came out, Matthews’s treatment quickly became the standard in this country. The American Thoracic Society endorsed his approach, and Warwick’s data registry on treatment centers proved to be so useful that the Cystic Fibrosis Foundation has continued it ever since.

Looking at the data over time is both fascinating and disturbing. By 1966, mortality from CF nationally had dropped so much that the average life expectancy of CF patients had already reached ten years. By 1972, it was eighteen years—a rapid and remarkable transformation. At the same time, though, Matthews’s center had got even better. The foundation has never identified individual centers in its data; to insure participation, it has guaranteed anonymity. But Matthews’s center published its results. By the early nineteen-seventies, ninety-five per cent of patients who had gone there before severe lung disease set in were living past their eighteenth birthday. There was a bell curve, and the spread had narrowed a little. Yet every time the average moved up Matthews and a few others somehow managed to stay ahead of the pack. In 2003, life expectancy with CF had risen to thirty-three years nationally, but at the best center it was more than forty-seven. Experts have become as leery of life-expectancy calculations as they are of hospital death rates, but other measures tell the same story. For example, at the median center, lung function for patients with CF—the best predictor of survival—is about three-quarters of what it is for people without CF. At the top centers, the average lung function of patients is indistinguishable from that of children who do not have CF.

What makes the situation especially puzzling is that our system for CF care is far more sophisticated than that for most diseases. The hundred and seventeen CF centers across the country are all ultra-specialized, undergo a rigorous certification process, and have lots of experience in caring for people with CF. They all follow the same detailed guidelines for CF treatment. They all participate in research trials to figure out new and better treatments. You would think, therefore, that their results would be much the same. Yet the differences are enormous. Patients have not known this. So what happens when they find out?

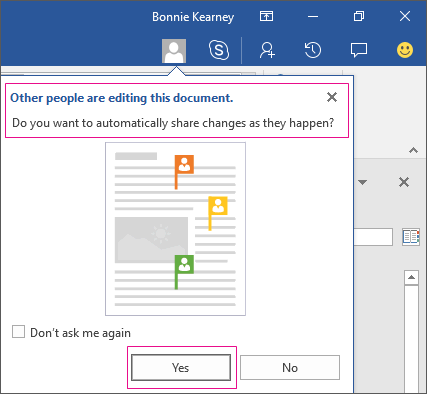

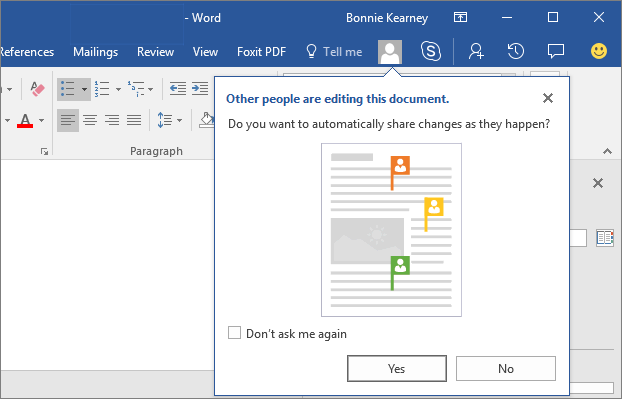

In the winter of 2001, the Pages and twenty other families were invited by their doctors at Cincinnati Children’s to a meeting about the CF program there. Annie was seven years old now, a lively, brown-haired second grader. She was still not growing enough, and a simple cold could be hellish for her, but her lung function had been stable. The families gathered in a large conference room at the hospital. After a brief introduction, the doctors started flashing PowerPoint slides on a screen: here is how the top programs do on nutrition and respiratory performance, and here is how Cincinnati does. It was a kind of experiment in openness. The doctors were nervous. Some were opposed to having the meeting at all. But hospital leaders had insisted on going ahead. The reason was Don Berwick.

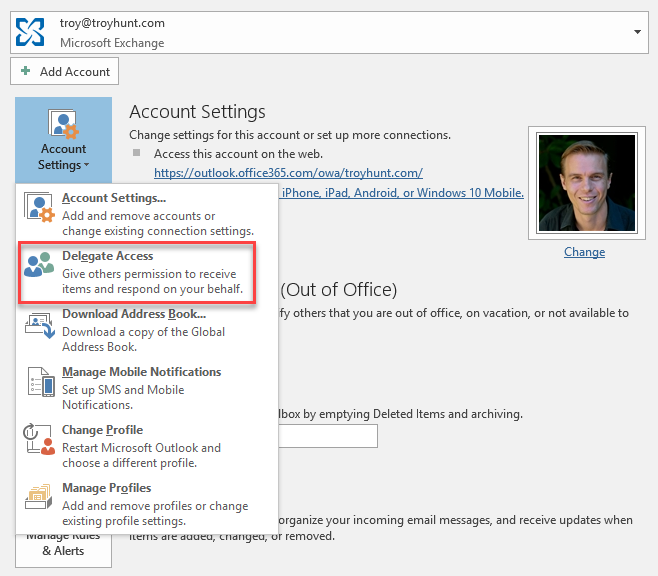

Berwick runs a small, nonprofit organization in Boston called the Institute for Healthcare Improvement. The institute provided multimillion-dollar grants to hospitals that were willing to try his ideas for improving medicine. Cincinnati’s CF program won one of the grants. And among Berwick’s key stipulations was that recipients had to open up their information to their patients—to “go naked,” as one doctor put it.

Berwick, a former pediatrician, is an unusual figure in medicine. In 2002, the industry publication Modern Healthcare listed him as the third most powerful person in American health care. Unlike the others on the list, he is powerful not because of the position he holds. (The Secretary of Health and Human Services, Tommy Thompson, was No. 1, and the head of Medicare and Medicaid was No. 2.) He is powerful because of how he thinks.

In December, 1999, at a health-care conference, Berwick gave a forty-minute speech distilling his ideas about the failings of American health care. Five years on, people are still talking about the speech. The video of it circulated like samizdat. (That was how I saw it: on a grainy, overplayed tape, about a year later.) A booklet with the transcript was sent to thousands of doctors around the country. Berwick is middle-aged, soft-spoken, and unprepossessing, and he knows how to use his apparent ordinariness to his advantage. He began his speech with a gripping story about a 1949 Montana forest fire that engulfed a parachute brigade of firefighters. Panicking, they ran, trying to make it up a seventy-six-per-cent grade and over a crest to safety. But their commander, a man named Wag Dodge, saw that it wasn’t going to work. So he stopped, took out some matches, and set the tall dry grass ahead of him on fire. The new blaze caught and rapidly spread up the slope. He stepped into the middle of the burned-out area it left behind, lay down, and called out to his crew to join him. He had invented what came to be called an “escape fire,” and it later became a standard part of Forest Service fire training. His men, however, either thought he was crazy or never heard his calls, and they ran past him. All but two were caught by the inferno and perished. Inside his escape fire, Dodge survived virtually unharmed.

As Berwick explained, the organization had unravelled. The men had lost their ability to think coherently, to act together, to recognize that a lifesaving idea might be possible. This is what happens to all flawed organizations in a disaster, and, he argued, that’s what is happening in modern health care. To fix medicine, Berwick maintained, we need to do two things: measure ourselves and be more open about what we are doing. This meant routinely comparing the performance of doctors and hospitals, looking at everything from complication rates to how often a drug ordered for a patient is delivered correctly and on time. And, he insisted, hospitals should give patients total access to the information. “ ‘No secrets’ is the new rule in my escape fire,” he said. He argued that openness would drive improvement, if simply through embarrassment. It would make it clear that the well-being and convenience of patients, not doctors, were paramount. It would also serve a fundamental moral good, because people should be able to learn about anything that affects their lives.

Berwick’s institute was given serious money from the Robert Wood Johnson Foundation to offer those who used his ideas. And so the doctors, nurses, and social workers of Cincinnati Children’s stood uncertainly before a crowd of patients’ families in that hospital conference room, told them how poorly the program’s results ranked, and announced a plan for doing better. Surprisingly, not a single family chose to leave the program.

“We thought about it after that meeting,” Ralph Blackwelder told me. He and his wife, Tracey, have eight children, four of whom have CF. “We thought maybe we should move. We could sell my business here and start a business somewhere else. We were thinking, Why would I want my kids to be seen here, with inferior care? I want the very best people to be helping my children.” But he and Tracey were impressed that the team had told them the truth. No one at Cincinnati Children’s had made any excuses, and everyone appeared desperate to do better. The Blackwelders had known these people for years. The program’s nutritionist, Terri Schindler, had a child of her own in the program. Their pulmonary specialist, Barbara Chini, had been smart, attentive, loving—taking their late-night phone calls, seeing the children through terrible crises, instituting new therapies as they became available. The program director, Jim Acton, made a personal promise that there would soon be no better treatment center in the world.

Honor Page was alarmed when she saw the numbers. Like the Blackwelders, the Pages had a close relationship with the team at Children’s, but the news tested their loyalty. Acton announced the formation of several committees that would work to improve the program’s results. Each committee, he said, had to have at least one parent on it. This is unusual; hospitals seldom allow patients and families on internal-review committees. So, rather than walk away, Honor decided to sign up for the committee that would reëxamine the science behind patients’ care.

Her committee was puzzled that the center’s results were not better. Not only had the center followed national guidelines for CF; two of its physicians had helped write them. They wanted to visit the top centers, but no one knew which those were. Although the Cystic Fibrosis Foundation’s annual reports displayed the individual results for each of the country’s hundred and seventeen centers, no names were attached. Doctors put in a call and sent e-mails to the foundation, asking for the names of the top five, but to no avail.

Several months later, in early 2002, Don Berwick visited the Cincinnati program. He was impressed by its seriousness, and by the intense involvement of the families, but he was incredulous when he learned that the committee couldn’t get the names of the top programs from the foundation. He called the foundation’s executive vice-president for medical affairs, Preston Campbell. “I was probably a bit self-righteous,” Berwick says. “I said, ‘How could you do this?’ And he said, ‘You don’t understand our world.’ ” This was the first Campbell had heard about the requests, and he reacted with instinctive caution. The centers, he tried to explain, give their data voluntarily. The reason they have done so for forty years is that they have trusted that it would be kept confidential. Once the centers lost that faith, they might no longer report solid, honest information tracking how different treatments are working, how many patients there are, and how well they do.

Campbell is a deliberate and thoughtful man, a pediatric pulmonologist who has devoted his career to cystic-fibrosis patients. The discussion with Berwick had left him uneasy. The Cystic Fibrosis Foundation had always been dedicated to the value of research; by investing in bench science, it had helped decode the gene for cystic fibrosis, produce two new drugs approved for patients, and generate more than a dozen other drugs that are currently being tested. Its investments in tracking patient care had produced scores of valuable studies. But what do you do when the research shows that patients are getting care of widely different quality?

A couple of weeks after Berwick’s phone call, Campbell released the names of the top five centers to Cincinnati. The episode convinced Campbell and others in the foundation that they needed to join the drive toward greater transparency, rather than just react. The foundation announced a goal of making the outcomes of every center publicly available. But it has yet to come close to doing so. It’s a measure of the discomfort with this issue in the cystic-fibrosis world that Campbell asked me not to print the names of the top five. “We’re not ready,” he says. “It’d be throwing grease on the slope.” So far, only a few of the nation’s CF treatment centers are committed to going public.

Still, after travelling to one of the top five centers for a look, I found I could not avoid naming the center I saw—no obscuring physicians’ identities or glossing over details. There was simply no way to explain what a great center did without the particulars. The people from Cincinnati found this, too. Within months of learning which the top five centers were, they’d spoken to each and then visited what they considered to be the very best one, the Minnesota Cystic Fibrosis Center, at Fairview-University Children’s Hospital, in Minneapolis. I went first to Cincinnati, and then to Minneapolis for comparison.

What I saw in Cincinnati both impressed me and, given its ranking, surprised me. The CF staff was skilled, energetic, and dedicated. They had just completed a flu-vaccination campaign that had reached more than ninety per cent of their patients. Patients were being sent questionnaires before their clinic visits so that the team would be better prepared for the questions they would have and the services (such as X-rays, tests, and specialist consultations) they would need. Before patients went home, the doctors gave them a written summary of their visit and a complete copy of their record, something that I had never thought to do in my own practice.

I joined Cori Daines, one of the seven CF-care specialists, in her clinic one morning. Among the patients we saw was Alyssa. She was fifteen years old, freckled, skinny, with nails painted loud red, straight sandy-blond hair tied in a ponytail, a soda in one hand, legs crossed, foot bouncing constantly. Every few minutes, she gave a short, throaty cough. Her parents sat to one side. All the questions were directed to her. How had she been doing? How was school going? Any breathing difficulties? Trouble keeping up with her calories? Her answers were monosyllabic at first. But Daines had known Alyssa for years, and slowly she opened up. Things had mostly been going all right, she said. She had been sticking with her treatment regimen—twice-a-day manual chest therapy by one of her parents, inhaled medications using a nebulizer immediately afterward, and vitamins. Her lung function had been measured that morning, and it was sixty-seven per cent of normal—slightly down from her usual eighty per cent. Her cough had got a little worse the day before, and this was thought to be the reason for the dip. Daines was concerned about stomach pains that Alyssa had been having for several months. The pains came on unpredictably, Alyssa said—before meals, after meals, in the middle of the night. They were sharp, and persisted for up to a couple of hours. Examinations, tests, and X-rays had found no abnormalities, but she’d stayed home from school for the past five weeks. Her parents, exasperated because she seemed fine most of the time, wondered if the pain could be just in her head. Daines wasn’t sure. She asked a staff nurse to check in with Alyssa at home, arranged for a consultation with a gastroenterologist and with a pain specialist, and scheduled an earlier return visit than the usual three months.

This was, it seemed to me, real medicine: untidy, human, but practiced carefully and conscientiously—as well as anyone could ask for. Then I went to Minneapolis.

The director of Fairview-University Children’s Hospital’s cystic-fibrosis center for almost forty years has been none other than Warren Warwick, the pediatrician who had conducted the study of LeRoy Matthews’s suspiciously high success rate. Ever since then, Warwick has made a study of what it takes to do better than everyone else. The secret, he insists, is simple, and he learned it from Matthews: you do whatever you can to keep your patients’ lungs as open as possible. Patients with CF at Fairview got the same things that patients everywhere did—some nebulized treatments to loosen secretions and unclog passageways (a kind of mist tent in a mouth pipe), antibiotics, and a good thumping on their chests every day. Yet, somehow, everything he did was different.

In the clinic one afternoon, I joined him as he saw a seventeen-year-old high-school senior named Janelle, who had been diagnosed with CF at the age of six and had been under his care ever since. She had come for her routine three-month checkup. She wore dyed-black hair to her shoulder blades, black Avril Lavigne eyeliner, four earrings in each ear, two more in an eyebrow, and a stud in her tongue. Warwick is seventy-six years old, tall, stooped, and frumpy-looking, with a well-worn tweed jacket, liver spots dotting his skin, wispy gray hair—by all appearances, a doddering, mid-century academic. He stood in front of Janelle for a moment, hands on his hips, looking her over, and then he said, “So, Janelle, what have you been doing to make us the best CF program in the country?”

“It’s not easy, you know,” she said.

They bantered. She was doing fine. School was going well. Warwick pulled out her latest lung-function measurements. There’d been a slight dip, as there was with Alyssa. Three months earlier, Janelle had been at a hundred and nine per cent (she was actually doing better than normal); now she was at around ninety per cent. Ninety per cent was still pretty good, and some ups and downs in the numbers are to be expected. But this was not the way Warwick saw the results.

He knitted his eyebrows. “Why did they go down?” he asked.

Janelle shrugged.

Any cough lately? No. Colds? No. Fevers? No. Was she sure she’d been taking her treatments regularly? Yes, of course. Every day? Yes. Did she ever miss treatments? Sure. Everyone does once in a while. How often is once in a while?

Then, slowly, Warwick got a different story out of her: in the past few months, it turned out, she’d barely been taking her treatments at all.

He pressed on. “Why aren’t you taking your treatments?” He appeared neither surprised nor angry. He seemed genuinely curious, as if he’d never run across this interesting situation before.

“I don’t know.”

He kept pushing. “What keeps you from doing your treatments?”

“I don’t know.”

“Up here”—he pointed at his own head—“what’s going on?”

“I don’t know,” she said.

He paused for a moment. And then he began speaking to me, taking a new tack. “The thing about patients with CF is that they’re good scientists,” he said. “They always experiment. We have to help them interpret what they experience as they experiment. So they stop doing their treatments. And what happens? They don’t get sick. Therefore, they conclude, Dr. Warwick is nuts.”

“Let’s look at the numbers,” he said to me, ignoring Janelle. He went to a little blackboard he had on the wall. It appeared to be well used. “A person’s daily risk of getting a bad lung illness with CF is 0.5 per cent.” He wrote the number down. Janelle rolled her eyes. She began tapping her foot. “The daily risk of getting a bad lung illness with CF plus treatment is 0.05 per cent,” he went on, and he wrote that number down. “So when you experiment you’re looking at the difference between a 99.95-per-cent chance of staying well and a 99.5-per-cent chance of staying well. Seems hardly any difference, right? On any given day, you have basically a one-hundred-per-cent chance of being well. But”—he paused and took a step toward me—“it is a big difference.” He chalked out the calculations. “Sum it up over a year, and it is the difference between an eighty-three-per-cent chance of making it through 2004 without getting sick and only a sixteen-per-cent chance.”

He turned to Janelle. “How do you stay well all your life? How do you become a geriatric patient?” he asked her. Her foot finally stopped tapping. “I can’t promise you anything. I can only tell you the odds.”

In this short speech was the core of Warwick’s world view. He believed that excellence came from seeing, on a daily basis, the difference between being 99.5-per-cent successful and being 99.95-per-cent successful. Many activities are like that, of course: catching fly balls, manufacturing microchips, delivering overnight packages. Medicine’s only distinction is that lives are lost in those slim margins.

And so he went to work on finding that margin for Janelle. Eventually, he figured out that she had a new boyfriend. She had a new job, too, and was working nights. The boyfriend had his own apartment, and she was either there or at a friend’s house most of the time, so she rarely made it home to take her treatments. At school, new rules required her to go to the school nurse for each dose of medicine during the day. So she skipped going. “It’s such a pain,” she said. He learned that there were some medicines she took and some she didn’t. One she took because it was the only thing that she felt actually made a difference. She took her vitamins, too. (“Why your vitamins?” “Because they’re cool.”) The rest she ignored.

Warwick proposed a deal. Janelle would go home for a breathing treatment every day after school, and get her best friend to hold her to it. She’d also keep key medications in her bag or her pocket at school and take them on her own. (“The nurse won’t let me.” “Don’t tell her,” he said, and deftly turned taking care of herself into an act of rebellion.) So far, Janelle was O.K. with this. But there was one other thing, he said: she’d have to come to the hospital for a few days of therapy to recover the lost ground. She stared at him.

“Today?”

“Yes, today.”

“How about tomorrow?”

“We’ve failed, Janelle,” he said. “It’s important to acknowledge when we’ve failed.”

With that, she began to cry.

Warwick’s combination of focus, aggressiveness, and inventiveness is what makes him extraordinary. He thinks hard about his patients, he pushes them, and he does not hesitate to improvise. Twenty years ago, while he was listening to a church choir and mulling over how he might examine his patients better, he came up with a new stethoscope—a stereo-stethoscope, he calls it. It has two bells dangling from it, and, because of a built-in sound delay, transmits lung sounds in stereo. He had an engineer make it for him. Listening to Janelle with the instrument, he put one bell on the right side of her chest and the other on her left side, and insisted that he could systematically localize how individual lobes of her lungs sounded.

He invented a new cough. It wasn’t enough that his patients actively cough up their sputum. He wanted a deeper, better cough, and later, in his office, Warwick made another patient practice his cough. The patient stretched his arms upward, yawned, pinched his nose, bent down as far as he could, let the pressure build up, and then, straightening, blasted everything out. (“Again!” Warwick encouraged him. “Harder!”)

He produced his most far-reaching invention almost two decades ago—a mechanized, chest-thumping vest for patients to wear. The chief difficulty for people with CF is sticking with the laborious daily regimen of care, particularly the manual chest therapy. It requires another person’s help. It requires conscientiousness, making sure to bang on each of the fourteen locations on a patient’s chest. And it requires consistency, doing this twice a day, every day, year after year. Warwick had become fascinated by studies showing that inflating and deflating a blood-pressure cuff around a dog’s chest could mobilize its lung secretions, and in the mid-nineteen-eighties he created what is now known as the Vest. It looks like a black flak jacket with two vacuum hoses coming out of the sides. These are hooked up to a compressor that shoots quick blasts of air in and out of the vest at high frequencies. (I talked to a patient while he had one of these on. He vibrated like a car on a back road.) Studies eventually showed that Warwick’s device was at least as effective as manual chest therapy, and was used far more consistently. Today, forty-five thousand patients with CF and other lung diseases use the technology.

Like most medical clinics, the Minnesota Cystic Fibrosis Center has several physicians and many more staff members. Warwick established a weekly meeting to review everyone’s care for their patients, and he insists on a degree of uniformity that clinicians usually find intolerable. Some chafe. He can have, as one of the doctors put it, “somewhat of an absence of, um, collegial respect for different care plans.” And although he stepped down as director of the center in 1999, to let a protégé, Carlos Milla, take over, he remains its guiding spirit. He and his colleagues aren’t content if their patients’ lung function is eighty per cent of normal, or even ninety per cent. They aim for a hundred per cent—or better. Almost ten per cent of the children at his center get supplemental feedings through a latex tube surgically inserted into their stomachs, simply because, by Warwick’s standards, they were not gaining enough weight. There’s no published research showing that you need to do this. But not a single child or teen-ager at the center has died in years. Its oldest patient is now sixty-four.

The buzzword for clinicians these days is “evidence-based practice”—good doctors are supposed to follow research findings rather than their own intuition or ad-hoc experimentation. Yet Warwick is almost contemptuous of established findings. National clinical guidelines for care are, he says, “a record of the past, and little more—they should have an expiration date.” I accompanied him as he visited another of his patients, Scott Pieper. When Pieper came to Fairview, at the age of thirty-two, he had lost at least eighty per cent of his lung capacity. He was too weak and short of breath to take a walk, let alone work, and he wasn’t expected to last a year. That was fourteen years ago.

“Some days, I think, This is it—I’m not going to make it,” Pieper told me. “But other times I think, I’m going to make sixty, seventy, maybe more.” For the past several months, Warwick had Pieper trying a new idea—wearing his vest not only for two daily thirty-minute sessions but also while napping for two hours in the middle of the day. Falling asleep in that shuddering thing took some getting used to. But Pieper was soon able to take up bowling, his first regular activity in years. He joined a two-night-a-week league. He couldn’t go four games, and his score always dropped in the third game, but he’d worked his average up to 177. “Any ideas about what we could do so you could last for that extra game, Scott?” Warwick asked. Well, Pieper said, he’d noticed that in the cold—anything below fifty degrees—and when humidity was below fifty per cent, he did better. Warwick suggested doing an extra hour in the vest on warm or humid days and on every game day. Pieper said he’d try it.

We are used to thinking that a doctor’s ability depends mainly on science and skill. The lesson from Minneapolis is that these may be the easiest parts of care. Even doctors with great knowledge and technical skill can have mediocre results; more nebulous factors like aggressiveness and consistency and ingenuity can matter enormously. In Cincinnati and in Minneapolis, the doctors are equally capable and well versed in the data on CF. But if Annie Page—who has had no breathing problems or major setbacks—were in Minneapolis she would almost certainly have had a feeding tube in her stomach and Warwick’s team hounding her to figure out ways to make her breathing even better than normal.

Don Berwick believes that the subtleties of medical decision-making can be identified and learned. The lessons are hidden. But if we open the book on physicians’ results, the lessons will be exposed. And if we are genuinely curious about how the best achieve their results, he believes they will spread.

The Cincinnati CF team has already begun tracking the nutrition and lung function of individual patients the way Warwick does, and is getting more aggressive in improving the results in these areas, too. Yet you have to wonder whether it is possible to replicate people like Warwick, with their intense drive and constant experimenting. In the two years since the Cystic Fibrosis Foundation began bringing together centers willing to share their data, certain patterns have begun to emerge, according to Bruce Marshall, the head of quality improvement for the foundation. All the centers appear to have made significant progress. None, however, have progressed more than centers like Fairview.

“You look at the rates of improvement in different quartiles, and it’s the centers in the top quartile that are improving fastest,” Marshall says. “They are at risk of breaking away.” What the best may have, above all, is a capacity to learn and adapt—and to do so faster than everyone else.

Once we acknowledge that, no matter how much we improve our average, the bell curve isn’t going away, we’re left with all sorts of questions. Will being in the bottom half be used against doctors in lawsuits? Will we be expected to tell our patients how we score? Will our patients leave us? Will those at the bottom be paid less than those at the top? The answer to all these questions is likely yes.

Recently, there has been a lot of discussion, for example, about “paying for quality.” (No one ever says “docking for mediocrity,” but it amounts to the same thing.) Congress has discussed the idea in hearings. Insurers like Aetna and the Blue Cross-Blue Shield companies are introducing it across the country. Already, Medicare has decided not to pay surgeons for intestinal transplantation operations unless they achieve a predefined success rate. Not surprisingly, this makes doctors anxious. I recently sat in on a presentation of the concept to an audience of doctors. By the end, some in the crowd were practically shouting with indignation: We’re going to be paid according to our grades? Who is doing the grading? For God’s sake, how?

We in medicine are not the only ones being graded nowadays. Firemen, C.E.O.s, and salesmen are. Even teachers are being graded, and, in some places, being paid accordingly. Yet we all feel uneasy about being judged by such grades. They never seem to measure the right things. They don’t take into account circumstances beyond our control. They are misused; they are unfair. Still, the simple facts remain: there is a bell curve in all human activities, and the differences you measure usually matter.

I asked Honor Page what she would do if, after all her efforts and the efforts of the doctors and nurses at Cincinnati Children’s Hospital to insure that “there was no place better in the world” to receive cystic-fibrosis care, their comparative performance still rated as resoundingly average.

“I can’t believe that’s possible,” she told me. The staff have worked so hard, she said, that she could not imagine they would fail.

After I pressed her, though, she told me, “I don’t think I’d settle for Cincinnati if it remains just average.” Then she thought about it some more. Would she really move Annie away from people who had been so devoted all these years, just because of the numbers? Well, maybe. But, at the same time, she wanted me to understand that their effort counted for more than she was able to express.

I do not have to consider these matters for very long before I start thinking about where I would stand on a bell curve for the operations I do. I have chosen to specialize (in surgery for endocrine tumors), so I would hope that my statistics prove to be better than those of surgeons who only occasionally do the kind of surgery I do. But am I up in Warwickian territory? Do I have to answer this question?

The hardest question for anyone who takes responsibility for what he or she does is, What if I turn out to be average? If we took all the surgeons at my level of experience, compared our results, and found that I am one of the worst, the answer would be easy: I’d turn in my scalpel. But what if I were a C? Working as I do in a city that’s mobbed with surgeons, how could I justify putting patients under the knife? I could tell myself, Someone’s got to be average. If the bell curve is a fact, then so is the reality that most doctors are going to be average. There is no shame in being one of them, right?

Except, of course, there is. Somehow, what troubles people isn’t so much being average as settling for it. Everyone knows that averageness is, for most of us, our fate. And in certain matters—looks, money, tennis—we would do well to accept this. But in your surgeon, your child’s pediatrician, your police department, your local high school? When the stakes are our lives and the lives of our children, we expect averageness to be resisted. And so I push to make myself the best. If I’m not the best already, I believe wholeheartedly that I will be. And you expect that of me, too. Whatever the next round of numbers may say. ♦

by Yuval Harari. The basic thesis of the book is that humans require ‘collective fictions’ so that we can collaborate in larger numbers than the 150 or so our brains are big enough to cope with by default. Collective fictions are things that don’t describe solid objects in the real world we can see and touch. Things like religions, nationalism, liberal democracy, or Popperian falsifiability in science. Things that don’t exist, but when we act like they do, we easily forget that they don’t.

by Yuval Harari. The basic thesis of the book is that humans require ‘collective fictions’ so that we can collaborate in larger numbers than the 150 or so our brains are big enough to cope with by default. Collective fictions are things that don’t describe solid objects in the real world we can see and touch. Things like religions, nationalism, liberal democracy, or Popperian falsifiability in science. Things that don’t exist, but when we act like they do, we easily forget that they don’t.

The detection of malicious software (malware) is an increasingly important cyber security problem for all of society. Single incidences of malware can cause millions of dollars in damage. The current generation of anti-virus and malware detection products typically use a signature-based approach, where a set of manually crafted rules attempt to identify different groups of known malware types. These rules are generally specific and brittle, and usually unable to recognize new malware even if it uses the same functionality.

The detection of malicious software (malware) is an increasingly important cyber security problem for all of society. Single incidences of malware can cause millions of dollars in damage. The current generation of anti-virus and malware detection products typically use a signature-based approach, where a set of manually crafted rules attempt to identify different groups of known malware types. These rules are generally specific and brittle, and usually unable to recognize new malware even if it uses the same functionality.