It is sometimes said that the odds you could get on Leicester winning the Premiership in 2016 was the single most mispriced bet in the history of bookmaking: 5000 to 1. To put that in perspective, the odds on the Loch Ness monster being found are a bizarrely low 500 to 1. (Another 5000 to 1 bet offered by William Hill is that Barack Obama will play cricket for England. I’d advise against that punt.) Nonetheless, 5000 to 1 pales in comparison with the odds you would have got in 2008 on a future world in which Donald Trump was president, Theresa May was prime minister, Britain had voted to leave the European Union, and Jeremy Corbyn was leader of the Labour Party – which to many close observers of Labour politics is actually the least likely thing on that list. The common factor explaining all these phenomena is, I would argue, the credit crunch and, especially, the Great Recession that followed.

Perhaps the best place to begin is with the question, what happened? Answering it requires a certain amount of imaginative work, because although ten years ago seems close, some fundamentals in the way we perceive the world have shifted. The most important component of the intellectual landscape of 2008 was a widespread feeling among elites that things were working fine. Not for everyone and not everywhere, but in aggregate: more people were doing better than were doing worse. Both the rich world and the poor world were measurably, statistically, getting richer. Most indices of quality of life, perhaps the most important being longevity, were improving. We were living through the Great Moderation, in which policymakers had finally worked out a way of growing economies at a rate that didn’t lead to overheating, and didn’t therefore result in the cycles of boom and bust which had been the defining feature of capitalism since the Industrial Revolution. Critics of capitalism had long argued that it had an inherent tendency towards such cycles – this was a central aspect of Marx’s critique – but policymakers now claimed to have fixed it. In the words of Gordon Brown: ‘We set about establishing a new economic framework to secure long-term economic stability and put an end to the damaging cycle of boom and bust.’ That claim was made when Labour first got into office in 1997, and Brown was still repeating it in his last budget as chancellor ten years later, when he said: ‘We will never return to the old boom and bust.’

I cite this not to pick on Gordon Brown, but because this view was widespread among Western policymakers. The intellectual framework for this overconfidence was derived from contemporary trends in macroeconomics. Not to put too fine a point on it, macroeconomists thought they knew everything. Or maybe not everything, just the most important thing. In a presidential address to the American Economic Association in 2003, Robert Lucas, Nobel prizewinner and one of the most prominent macroeconomists in the world, put it plainly:

Macroeconomics was born as a distinct field in the 1940s, as a part of the intellectual response to the Great Depression. The term then referred to the body of knowledge and expertise that we hoped would prevent the recurrence of that economic disaster. My thesis in this lecture is that macroeconomics in this original sense has succeeded: its central problem of depression prevention has been solved, for all practical purposes, and has in fact been solved for many decades.

Solved. For many decades. That was the climate of intellectual overconfidence in which the crisis began. It’s been said that the four most expensive words in the world are: ‘This time it’s different.’ We can ignore the lessons of history and indeed of common sense because there’s a new paradigm, a new set of tools and techniques, a new Great Moderation. But one of the things that happens in economic good times – a very clear lesson from history which is repeatedly ignored – is that money gets too cheap. Too much credit enters the system and there is too much money looking for investment opportunities. In the modern world that money is hotter – more rapidly mobile and more globalised – than ever before. Ten and a bit years ago, a lot of that money was invested in a sexy new opportunity created by clever financial engineering, which magically created high-yielding but completely safe investments from pools of risky mortgages. Poor people with patchy credit histories who had never owned property were given expensive mortgages to allow them to buy their first homes, and those mortgages were then bundled into securities which were sold to eager investors around the world, with the guarantee that ingenious financial engineering had achieved the magic trick of high yields and complete safety. That, in an investment context, is like claiming to have invented an antigravity device or a perpetual motion machine, since it is an iron law of investment that risks are correlated with returns. The only way you can earn more is by risking more. But ‘this time it’s different.’

The thing about debt and credit is that most of the time, in conventional economic thinking, they don’t present a problem. Every credit is a debt, every debt is a credit, assets and liabilities always match, and the system always balances to zero, so it doesn’t really matter how big those numbers are, how much credit or debt there is in the system, the net is always the same. But knowing that is a bit like climbing up a very, very long ladder and knowing that it’s a good idea not to look down. Sooner or later you inevitably do, and realise how exposed you are, and start feeling different. That’s what happened in the run-up to the credit crunch: people suddenly started to wonder whether these assets, these pools of mortgages (which by this point had been sold and resold all around the financial system so that nobody was clear who actually owned them, like a toxic version of pass the parcel in which nobody knows who is holding the parcel or what’s in it), were worth what they were supposed to be worth. They noticed just how high up the ladder they had climbed. So they started descending the ladder. They started withdrawing credit. What happened next was the first bank run in the UK since the 19th century, the collapse of Northern Rock in September 2007 and its subsequent nationalisation. Northern Rock had an unusual business model in that instead of relying on customer deposits to meet its operational needs it borrowed money short-term on the financial markets. When credit became harder to come by, that source of funding suddenly wasn’t there any more. Then, just as suddenly, Northern Rock wasn’t there any more either.

That was the first symptom of the global crisis, which reached the next level with the very similar collapse of Bear Stearns in March 2008, followed by the crash that really did take the entire global financial system to the brink, the implosion of Lehman Brothers on 15 September. Because Lehmans was a clearing house and repository for many thousands of financial instruments from around the system, suddenly nobody knew who owed what to whom, who was exposed to what risk, and therefore which institutions were likely to go next. And that is when the global supply of credit dried up. I spoke to bankers at the time who said that what happened was supposed to be impossible, it was like the tide going out everywhere on Earth simultaneously. People had lived through crises before – the sudden crash of October 1987, the emerging markets crises and the Russian crisis of the 1990s, the dotcom bubble – but what happened in those cases was that capital fled from one place to another. No one had ever lived through, and no one thought possible, a situation where all the credit simultaneously disappeared from everywhere and the entire system teetered on the brink. The first weekend of October 2008 was a point when people at the top of the global financial system genuinely thought, in the words of George W. Bush, ‘This sucker could go down.’ RBS, at one point the biggest bank in the world according to the size of its balance sheet, was within hours of collapsing. And by collapsing I mean cashpoint machines would have stopped working, and insolvencies would have spread from RBS to other banks – and no one alive knows what that would have looked like or how it would have ended.

The immediate economic consequence was the bailout of the banks. I’m not sure if it’s philosophically possible for an action to be both necessary and a disaster, but that in essence is what the bailouts were. They were necessary, I thought at the time and still think, because this really was a moment of existential crisis for the financial system, and we don’t know what the consequences would have been for our societies if everything had imploded. But they turned into a disaster we are still living through. The first and probably most consequential result of the bailouts was that governments across the developed world decided for political reasons that the only way to restore order to their finances was to resort to austerity measures. The financial crisis led to a contraction of credit, which in turn led to economic shrinkage, which in turn led to declining tax receipts for governments, which were suddenly looking at sharply increasing annual deficits and dramatically increasing levels of overall government debt. So now we had austerity, which meant that life got harder for a lot of people, but – this is where the negative consequences of the bailout start to be really apparent – life did not get harder for banks and for the financial system. In the popular imagination, the people who caused the crisis got away with it scot-free, and, as what scientists call a first-order approximation, that’s about right.

In addition, there were no successful prosecutions of anyone at the higher levels of the financial system. Contrast that with the savings and loan scandal of the 1980s, basically a gigantic bust of the US equivalent of mortgage companies, in which 1100 executives were prosecuted. What had changed since then was the increasing hegemony of finance in the political system, which brought the ability quite simply to rewrite the rules of what is and isn’t legal. One example I saw when I was researching Whoops!, my book on the crisis, was in Baltimore. There people going to buy houses for the first time would turn up at the mortgage company’s office and be told: ‘Look, I’m really sorry, I know we said we’d be able to get you a loan at 6 per cent, but something went wrong at the bank, so the number on here is 12 per cent. But listen, I know you want to come out of here owning a house today – that’s right isn’t it, you do want to leave this room owning your own house for the first time? – so what I suggest is, since there’s a lot of paperwork to get through, you sign it, and we sort out this issue with the loan later, it won’t be a problem.’ That is a flat lie: the loan was fixed and unchangeable and the contract legally binding, but under Maryland law, the principle is caveat emptor, so the mortgage broker can lie as much as they want, since the onus is on the other party to protect their own interests. The result, just in Baltimore, was tens of thousands of people losing their homes. The charity I talked to had no idea where many of those people were: some of them were sleeping in their cars, some of them had gone back to wherever they came from outside the city, others had just vanished. And all that predatory lending was entirely legal.

That impunity, the sense that these things had consequences for us but not for the people who caused the crisis, has been central to the story of the last ten years. It has also been central to the public anger generated by the crash and the Great Recession. In the summer of 2009, when I was writing Whoops!, I remember thinking that a huge storm of rage was coming towards governments once the public realised what a giant hole had been dug for them by the financial system in collusion with their leaders. Then the book came out, and I was giving talks about it all over the place from its publication in January 2010 through the spring and summer, and there was this mysterious lack of rage. People seemed numb and incredulous but not yet angry.

In July 2010 I was in Galway for the arts festival, giving a talk in a room where, I later learned, a former taoiseach was famous for accepting envelopes full of cash during Galway’s racing week. By that point in the publication process you normally have your talk down to a fine art, or as fine as it’s going to get, and my spiel consisted of basically comic points about how reckless and foolish the financial system had been. Normally when I gave the talk people would laugh at the various punchlines, but now there was complete silence in the room – the jokes weren’t landing at all. And yet I could tell people were actually listening. It felt strange. Then the questions began, and all of them were about blame, and I realised everyone in the room was furious. All the questions were about whose fault the crash was, who should be punished, how it was possible that this could have happened and how outrageous it was that the people responsible had got away with it and the rest of society was paying the consequences. I remember thinking that the difference between Ireland and the UK is just that they’re a few months ahead. This is what’s coming.

*

By now we’re eight years into that public anger. Remember that remark made by Robert Lucas, the macroeconomist, that the central problem of depression prevention had been solved? How’s that been working out? How it’s been working out here in the UK is the longest period of declining real incomes in recorded economic history. ‘Recorded economic history’ means as far back as current techniques can reach, which is back to the end of the Napoleonic Wars. Worse than the decades that followed the Napoleonic Wars, worse than the crises that followed them, worse than the financial crises that inspired Marx, worse than the Depression, worse than both world wars. That is a truly stupendous statistic and if you knew nothing about the economy, sociology or politics of a country, and were told that single fact about it – that real incomes had been falling for the longest period ever – you would expect serious convulsions in its national life.

Just as grim, life expectancy has stagnated too, which is all the more shocking because it is entirely unexpected. According to the Continuous Mortality Investigation, life expectancy for a 45-year-old man has declined from an anticipated 43 years of extra life to 42, for a 45-year-old woman from 45.1 more years to 44. There’s a decline for pensioners too. We had gained ten years of extra life since 1960, and we’ve just given one year back. These data are new and are not fully understood yet, but it seems pretty clear that the decline is linked to austerity, perhaps not so much to the squeeze on NHS spending – though the longest spending squeeze, adjusted for inflation and demographics, since the foundation of the NHS has obviously had some effect – but to the impacts of austerity on social services, which in the case of such services as Meals on Wheels and house visits function as an early warning system for illness among the elderly. As a result, mortality rates are up, an increase that began in 2011 after decades in which they had fallen under both parties, and it’s this that is causing the decline in life expectancy.

Life expectancy in the United States is also falling, with the first consecutive-year drop since 1962-63; infant mortality, the generally accepted benchmark for a society’s development, is rising too. The principal driver of the decline in life expectancy seems to be the opioid epidemic, which took 64,000 lives in 2016, many more than guns (39,000), cars (40,000) or breast cancer (41,000). At the same time, the income of the typical worker, the real median hourly income, is about the same as it was in 1971. Anyone time-travelling back to the early 1970s would have great difficulty explaining why the richest and most powerful country in the history of the world had four and a half decades without pandemic, countrywide disaster or world war, accompanied by unprecedented growth in corporate profits, and yet ordinary people’s pay remained the same. I think people would react with amazement and want to know why. Things have been getting consistently better for the ordinary worker, they would say, so why is that process about to stop?

It would be easier to accept all this, philosophically anyway, if since the crash we had made some progress towards reform in the operation of the banking system and international finance. But there has been very little. Yes, there have been some changes at the margin, to things such as the way bonuses are paid. Bonuses were a tremendous flashpoint in the aftermath of the crash, because it was so clear that a) bankers were insanely overpaid; and b) they had incentives for taking risks that paid them huge bonuses when the bets succeeded, but in the event they went wrong, all the losses were paid for by us. Privatised gains, socialised losses. The bonus system has been addressed legally, with new legislation enforcing delays before bonuses can be paid out, and allowing them to be clawed back if things go wrong. But overall remuneration in finance has not gone down. It’s an example of a change that isn’t really a change. The bonus pool in UK finance last year was £15 billion, the largest since 2007.

It’s not that there haven’t been changes. It’s just that it isn’t clear how much of a change the changes are. Bonuses are one example. Another concerns the ring-fencing being introduced in the UK to separate investment banking from retail banking – to separate banks’ casino-like activities on international markets from their piggy-bank-like activities in the real economy. In the aftermath of the crisis there were demands for these two functions to be completely separated, as historically they have been in many countries at many times. The banks fought back hard and as usual got what they wanted. Instead of separation we have a complicated, unwieldy and highly technical process of internal ring-fencing inside our huge banks. When I say huge, I’m referring to the fact that our four biggest banks have balance sheets that, combined, are two and a half times bigger than the UK economy. Mark Carney has pointed out that our financial sector is currently ten times the size of our GDP, and that over the next couple of decades it is likely to grow to 15 or even twenty times its size.

The ring-fence has been under preparation for several years and comes into effect from 2019. It increases the complexity of the system, and a very clear lesson from history is that complexity allows opportunities to game the rules and exploit loopholes. One way of describing modern finance is that it’s a mechanism for enabling very clever, very well paid, very highly incentivised people to spend all day every day thinking of ways to get around rules. Complexity works to their advantage. As for the question of whether ring-fencing makes the financial system safer, the answer again is that we don’t really know. As the financial historian David Marsh observed, the only way you can properly test a firewall is by having a fire.

I think the ring-fence is an opportunity missed. That goes for a lot of the small complicated rules designed to make banks and the financial system safer. Bankers complain about them a lot, which is probably a good sign from the point of view of the public, but it’s not clear they will actually make the system safer compared with the much simpler and cruder mechanism of increasing the amount of equity banks have to hold. At the moment banks operate almost entirely with leverage, meaning borrowed money. When they lose money, they mainly lose other people’s money. An increase in the statutory requirement for bank equity, and the resulting reduction in the amount of leverage they are allowed to employ, would make banks safe through a simple act of brute force: they’d have to lose a lot more of their own money before they started to lose any of anyone else’s. The new rules have made the banks hold more equity, but the systems for calculating how much are notoriously complex and in any case the improvement is a matter of degrees, not an order of magnitude. The plan for increased equity has been most rigorously advocated by the Stanford economist Anat Admati, and the banks absolutely hate the idea. It would make them less profitable, which in turn means bankers would be paid much less, and the system would with absolute certainty be much safer for the public. But that is not the direction we’ve taken, especially in the US, where Trump and the Republican Congress are tearing up all the post-crash legislation.

In some cases, it’s not so much a case of non-change change as of good old-fashioned no change. Take the notorious problem of banks that are too big to fail. That issue is unambiguously more serious than it was before the last crash. The failing banks were eaten by surviving banks, with the result that the surviving banks are now bigger, and the too big to fail problem is worse. Banks have been forced by statute to bring in ‘living wills’, as they’re known, to arrange for their own bankruptcy in the event of their becoming insolvent in the way they did ten years ago. I don’t believe those guarantees. These banks have balance sheets that are in some cases as big as the host country’s GDP – HSBC in the UK, or the seriously struggling Deutsche Bank in Germany – and the system simply could not sustain a bankruptcy of that size. Germany is more likely to introduce compulsory public nudity than it is to let Deutsche Bank fail.

In other areas, we’re in the territory that Donald Rumsfeld called known unknowns. The main example is shadow banking. Shadow banking is all the stuff banks do – such as lending money, taking deposits, transferring money, executing payments, extending credit – except done by institutions that don’t have a formal banking licence. Think of credit card companies, insurance companies, companies that let you send money overseas, PayPal. There are also huge institutions inside finance that lend money back and forwards to keep banks solvent, in a process known as the repo market. All these activities taken together make up the shadow banking system. The thing about this system is that it’s much less regulated than formal banks, and nobody is certain how big it is. The latest report from the Financial Stability Board, an international body responsible for doing what it says on the tin, estimates the size of the shadow system at $160 trillion. That’s twice the GDP of Earth. It’s bigger than the entire commercial banking sector. Shadow banking was one of the main routes for spreading and magnifying the crash ten years ago, and it is at least as big and as opaque as it was then.

This brings me to the main and I think least understood point about contemporary financial markets. The mental image of a market is misleading: the metaphor implies a single place where people meet to trade and where the transactions are open and transparent and under the aegis of a central authority. That authority can be formal and governmental or it can just be the relevant collective norms. There are inevitably some asymmetries of information – usually sellers know more than buyers – but basically what you see is what you get, and there is some form of supervision at work. Financial markets today are not like that. They aren’t gathered together in one place. In many instances, a market is just a series of cables running into a data centre, with another series of cables, belonging to a hedge fund specialising in high-frequency trading, running into the same computers, and ‘front-running’ trades by profiting from other people’s activities in the market, taking advantage of time differences measured in millionths of a second.[*] That howling, shrieking, cacophonous pit in which traders look up at screens and shout prices at each other is a stage set (literally so: the New York Stock Exchange keeps one going simply for the visuals). The real action is all in data centres and couldn’t be less like a market in any generally understood sense. In many areas, the overwhelming majority of transactions are over the counter (OTC), meaning that they are directly executed between interested parties, and not only is there no grown-up supervision, in the sense of an agency overseeing the transaction, but it is actually the case that nobody else knows what has been transacted. The OTC market in financial derivatives, for instance, is another known unknown: we can guess at its size but nobody really knows. The Bank for International Settlements, the Basel-based central bank of central banks, gives a twice yearly estimate of the OTC market. The most recent number is $532 trillion.

*

So that’s where we are with markets. Non-change change, in the form of bonus regulation and ring-fencing; no change or change for the worse in the case of complexity and shadow banking and too big to fail; and no overall reduction in the level of risk present in the system.

We are back with the issue of impunity. For the people inside the system that caused a decade of misery, no change. For everyone else, a decade of misery, magnified by austerity policies. Note that austerity policies were not recommended by mainstream macroeconomists, who predicted that they would lead to flat or shrinking GDP, as indeed they did. Instead politicians took the crisis as a political inflection point – a phrase used to me in private by a Tory in 2009, before the public realised what was about to hit them – and seized the opportunity to contract government spending and shrink the state.

The burden of austerity falls much more on the poor than on the better-off, and in any case it is a heavily loaded term, taking a personal virtue and casting it as an abstract principle used to direct state spending. For the top 1 per cent of taxpayers, who pay 27 per cent of all income tax, austerity means you end up better off, because you pay less tax. You save so much on your tax bill you can switch from prosecco to champagne, or if you’re already drinking champagne, you switch to fancier champagne. For those living in precarious circumstances, tiny changes in state spending can have direct and significant personal consequences. In the UK, these have been exacerbated by policies such as benefit sanctions, in which vulnerable people have their benefits withheld as a form of punishment – a self-defeating policy whose cruelty is hard to overstate.

We thus arrive at the topic that more than any other sums up the decade since the crash: inequality. For students of the subject there is something a little crude about referring to inequality as if it were only one thing. Inequality of income is not the same thing as inequality of wealth, which is not the same as inequality of opportunity, which is not the same as inequality of outcome, which is not the same as inequality of health or inequality of access to power. In a way, though, the popular use of inequality, although it may not be accurate in philosophical or political science terms, is the most relevant when we think about the last ten years, because when people complain about inequality they are complaining about all the above: all the different subtypes of inequality compacted together.

The sense that there are different rules for insiders, the one per cent, is global. Everywhere you go people are preoccupied by this widening crevasse between the people at the top of the system and everyone else. It’s possible of course that this is a trick of perspective or a phenomenon of raised consciousness more than it is a new reality: that this is what our societies have always been like, that elites have always lived in a fundamentally different reality, it’s just that now, after the last ten difficult years, we are seeing it more clearly. I suspect that’s the analysis Marx would have given.

The one per cent issue is the same everywhere, more or less, but the global phenomenon of inequality has different local flavours. In China these concerns divide the city and the country, the new prosperous middle class and the brutally difficult lives of migrant workers. In much of Europe there are significant divides between older insiders protected by generous social provision and guaranteed secure employment, and a younger precariat which faces a far more uncertain future. In the US there is enormous anger at oblivious, entitled, seemingly invulnerable financial and technological elites getting ever richer as ordinary living standards stay flat in absolute terms, and in relative terms, dramatically decline. And everywhere, more than ever before in human history, people are surrounded by images of a life they are told they should want, yet know they can’t afford.

A third driver of increased inequality, alongside austerity and impunity for financial elites, has been monetary policy in the form of Quantitative Easing. QE, as it’s known, is the government buying back its own debt with newly minted electronic money. It’s as if you could log into your online bank account and type in a new balance and then use that to pay off your credit card bill. Governments have used this technique to buy back their own bonds. The idea was that the previous bondholders would suddenly have all this cash on their balance sheets, and would feel obliged to put it to work, so they would spend it and then someone else would have the cash and they would spend it. As Merryn Somerset Webb recently wrote in the Financial Times, the cash is like a hot potato that is passed back and forwards between rich individuals and institutions, generating economic activity in the process.

The problem concerns what people do with that hot potato cash. What they tend to do is buy assets. They buy houses and equities and sometimes they buy shiny toys like yachts and paintings. What happens when people buy things? Prices go up. So the prices of houses and equities have been sustained, kept aloft, by quantitative easing, which is great news for people who own things like houses and equities, but less good news for people who don’t, because from their point of view, these things will become ever more unaffordable. A recent analysis by the Bank of England showed that the effect on house prices of QE had been to keep them 22 per cent higher than they would otherwise have been. The effect on equities was 25 per cent. (The analysis used data up to 2014, so both those numbers will have gone up.) We’re back to that question of whether something could be necessary and a disaster at the same time, because QE may well have played an important role in keeping the economy out of a more severe depression, but it has also been a direct driver of inequality, in particular of the housing crisis, which is one of the defining features of contemporary Britain, especially for the young.

Napoleon said something interesting: that to understand a person, you must understand what the world looked like when he was twenty. I think there’s a lot in that. When I was twenty, it was 1982, right in the middle of the Cold War and the Thatcher/Reagan years. Interest rates were well into double digits, inflation was over 8 per cent, there were three million unemployed, and we thought the world might end in nuclear holocaust at any moment. At the same time, the underlying premise of capitalism was that it was morally superior to the alternatives. Mrs Thatcher was a philosophical conservative for whom the ideas of Hayek and Friedman were paramount: capitalism was practically superior to the alternatives, but that was intimately tied to the fact that it was morally better. It’s a claim that ultimately goes back to Adam Smith in the third book of The Wealth of Nations. In one sense it is the climactic claim of his whole argument: ‘Commerce and manufactures gradually introduced order and good government, and with them, the liberty and security of individuals, among the inhabitants of the country, who had before lived almost in a continual state of war with their neighbours and of servile dependency on their superiors. This, though that has been the least observed, is by far the most important of all their effects.’ So according to the godfather of economics, ‘by far the most important of all the effects’ of commerce is its benign impact on wider society.

I know that the plural of anecdote is not data, but I feel that there has been a shift here. In recent decades, elites seem to have moved from defending capitalism on moral grounds to defending it on the grounds of realism. They say: this is just the way the world works. This is the reality of modern markets. We have to have a competitive economy. We are competing with China, we are competing with India, we have hungry rivals and we have to be realistic about how hard we have to work, how well we can pay ourselves, how lavish we can afford our welfare states to be, and face facts about what’s going to happen to the jobs that are currently done by a local workforce but could be outsourced to a cheaper international one. These are not moral justifications. The ethical defence of capitalism is an important thing to have inadvertently conceded. The moral basis of a society, its sense of its own ethical identity, can’t just be: ‘This is the way the world is, deal with it.’

I notice, talking to younger people, people who hit that Napoleonic moment of turning twenty since the crisis, that the idea of capitalism being thought of as morally superior elicits something between an eye roll and a hollow laugh. Their view of capitalism has been formed by austerity, increasing inequality, the impunity and imperviousness of finance and big technology companies, and the widespread spectacle of increasing corporate profits and a rocketing stock market combined with declining real pay and a huge growth in the new phenomenon of in-work poverty. That last is very important. For decades, the basic promise was that if you didn’t work the state would support you, but you would be poor. If you worked, you wouldn’t be. That’s no longer true: most people on benefits are in work too, it’s just that the work doesn’t pay enough to live on. That’s a fundamental breach of what used to be the social contract. So is the fact that the living standards of young people are likely not to be as high as they are for their parents. That idea stings just as much for parents as it does for their children.

This sense of a system gone wrong has led to political crises all across the developed world. From a personal point of view, looking back over the last ten years, some of this I saw coming and some of it I didn’t. I predicted the anger and the decade of economic hard times, and in general I thought life was going to become tougher. I thought it might well lead to a further crisis. But I was wrong about the nature of the crisis. I thought it was likely to be financial rather than political, in the first instance: a second financial crisis which fed into politics. Instead what has happened is Brexit, Trump, and variously startling electoral results from Italy, Hungary, Poland, the Czech Republic and elsewhere.

Part of what happened can be summed up by a British Telecom ad from the 1980s. Maureen Lipman rings up her grandson to congratulate him on his exam results. She has baked a cake and is decorating it but freezes when he tells her, I failed. She asks him what he failed and he says everything: maths English physics geography German woodwork art. But then he lets slip that he passed pottery and sociology and Lipman says: ‘He gets an ology and he says he’s failed? You get an ology, you’re a scientist.’

I suspect I got the wrong ology. Sociology would have been a better social science than economics for understanding the last ten years. Three dominos fell. The initial event was economic. The meaning of it was experienced in ways best interpreted by sociology. The consequences were acted out through politics. From a sociological point of view, the crisis exacerbated faultlines running through contemporary societies, faultlines of city and country, old and young, cosmopolitan and nationalist, insider and outsider. As a direct result we have seen a sharp rise in populism across the developed world and a marked collapse in support for established parties, in particular those of the centre-left.

Electorates turned with special venom against parties offering what was in effect a milder version of the economic consensus: free-market capitalism with a softer edge. It’s as if the voters are saying to those parties: what actually are you for? It’s not a bad question and it’s one that everyone from the Labour Party to the SPD in Germany to the socialists in France to the Democrats in the US are all struggling to answer. It’s worth noticing another phenomenon too: electorates are turning to very young leaders – a 43-year-old in Canada, a 37-year-old in New Zealand, a 39-year-old in France, a 31-year-old in Austria. They have ideological differences, but they have in common that they were all in metaphorical nappies when the crisis and the Great Recession hit, so they definitely can’t be blamed. Both France and the US elected presidents who had never run for office before.

In conclusion, it’s all doom and gloom. But wait! From another perspective, the story of the last ten years has been one of huge success. At the time of the crash, 19 per cent of the world’s population were living in what the UN defines as absolute poverty, meaning on less than $1.90 a day. Today, that number is below 9 per cent. In other words, the number of people living in absolute poverty has more than halved, while rich-world living standards have flatlined or declined. A defender of capitalism could point to that statistic and say it provides a full answer to the question of whether capitalism can still make moral claims. The last decade has seen hundreds of millions of people raised out of absolute poverty, continuing the global improvement for the very poor which, both as a proportion and as an absolute number, is an unprecedented economic achievement.

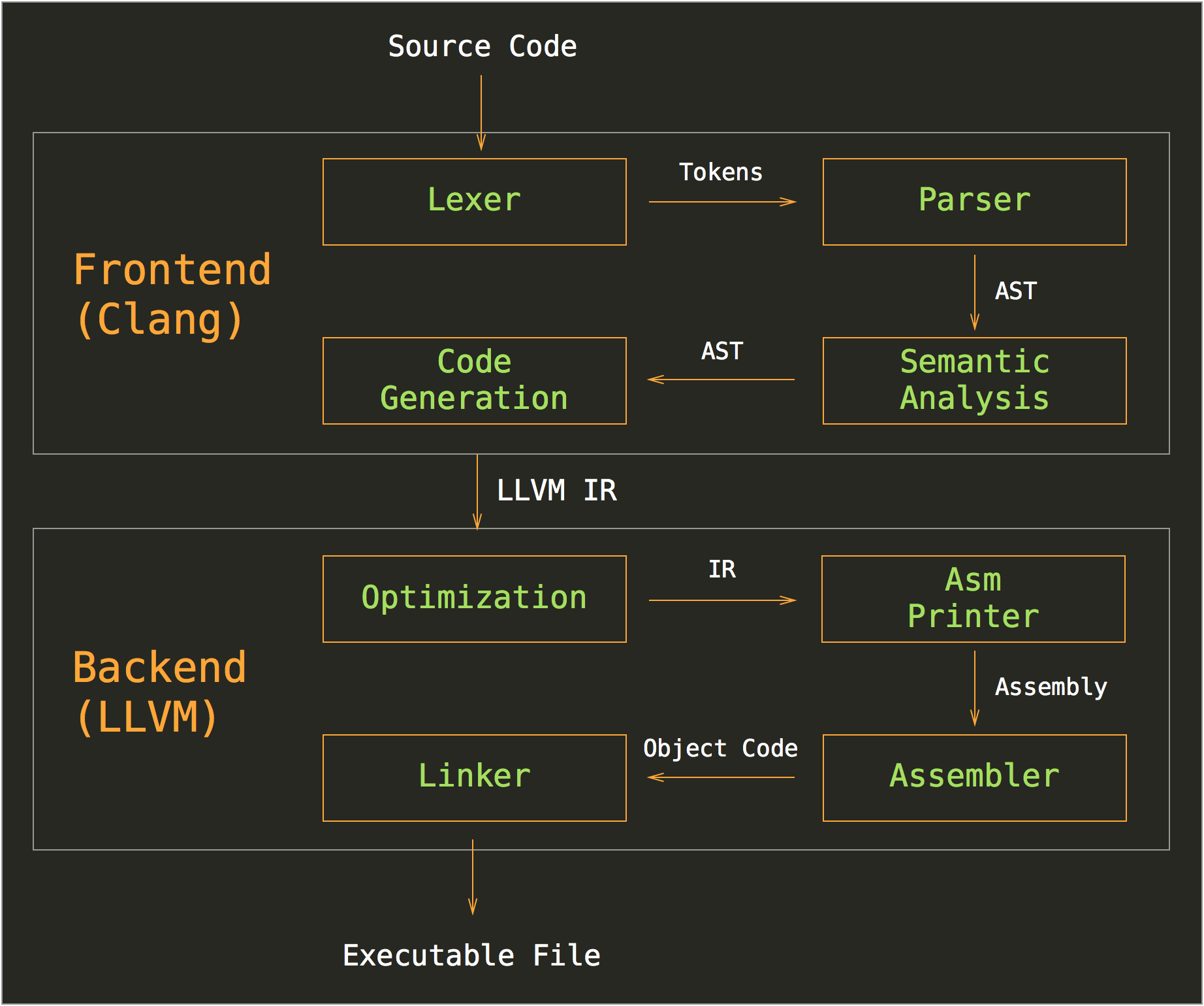

![]()

The economist who has done more in this field than anyone else, Branko Milanović at Harvard, has a wonderful graph that illustrates the point about the relative outcomes for life in the developing and developed world. The graph is the centrepiece of his brilliant book Global Inequality: A New Approach for the Age of Globalisation.[†] It’s called the ‘elephant curve’ because it looks like an elephant, going up from left to right like the elephant’s back, then sloping down as it gets towards its face, then going sharply upwards again when it reaches the end of its trunk. Most of the people between points A and B are the working classes and middle classes of the developed world. In other words, the global poor have been getting consistently better off over the last decades whereas the previous global middle class, most of whom are in the developed world, have seen relative decline. The elite at the top have of course been doing better than ever.

What if the governments of the developed world turned to their electorates and explicitly said this was the deal? The pitch might go something like this: we’re living in a competitive global system, there are billions of desperately poor people in the world, and in order for their standards of living to improve, ours will have to decline in relative terms. Perhaps we should accept that on moral grounds: we’ve been rich enough for long enough to be able to share some of the proceeds of prosperity with our brothers and sisters. I think I know what the answer would be. The answer would be OK, fine, but get rid of the trunk. Because if we are experiencing a relative decline why shouldn’t the rich – why shouldn’t the one per cent – be slightly worse off in the same way that we are slightly worse off?

The frustrating thing is that the policy implications of this idea are pretty clear. In the developed world, we need policies that reduce the inequality at the top. It is sometimes said these are very difficult policies to devise. I’m not sure that’s true. What we’re really talking about is a degree of redistribution similar to that experienced in the decades after the Second World War, combined with policies that prevent the international rich person’s sport of hiding assets from taxation. This was one of the focuses of Thomas Piketty’s Capital, and with good reason. I mentioned earlier that assets and liabilities always balance – that’s the way they are designed, as accounting equalities. But when we come to global wealth, this isn’t true. Studies of the global balance sheet consistently show more liabilities than assets. The only way that would make sense is if the world were in debt to some external agency, such as Venusians or the Emperor Palpatine. Since it isn’t, a simple question arises: where’s all the fucking money? Piketty’s student Gabriel Zucman wrote a powerful book, The Hidden Wealth of Nations (2015), which supplies the answer: it’s hidden by rich people in tax havens. According to calculations that Zucman himself says are conservative, the missing money amounts to $8.7 trillion, a significant fraction of all planetary wealth. It is as if, when it comes to the question of paying their taxes, the rich have seceded from the rest of humanity.

A crackdown on international evasion is difficult because it requires international co-ordination, but common sense tells us this would be by no means impossible. Effective legal instruments to prevent offshore tax evasion are incredibly simple and could be enacted overnight, as the United States has just shown with its crackdown on oligarchs linked to Putin’s regime. All you have to do is make it illegal for banks to enact transactions with territories that don’t comply with rules on tax transparency. That closes them down instantly. Then you have a transparent register of assets, a crackdown on trust structures (which incidentally can’t be set up in France, and the French economy functions fine without them), and job done. Politically hard but in practical terms fairly straightforward. Also politically hard, and practically less so, are the actions needed to address the sections of society that lose out from automation and globalisation. Milanović’s preferred focus is on equalising ‘endowments’, an economic term which in the context implies an emphasis on equalising assets and education. If changes benefit an economy as a whole, they need to benefit everyone in the economy – which by implication directs government towards policies focused on education, lifelong training, and redistribution through the tax and benefits system. The alternative is to carry on as we have been doing and just let divides widen until societies fall apart.