PostgreSQL contains a slew of great features. Many of them are very well known. Others can be incredibly useful but are not as widely appreciated. Here are our top picks for great PostgreSQL features you may not have taken a look at but really should, since they can help you get code into production faster, make ops easier and generally get things done with less code and sweat.

Pub/Sub Notifications

PostgreSQL comes with a simple non-durable topic-based publish-subscribe notification system. It’s no Kafka, but the features do support common use cases.

Messages on a specific topic can be broadcast to all connected subscribers who are listening for that topic. The messages are pushed by the Postgres server to the listening clients. Polling is not required, but your database driver should support delivery of notifications to the application asynchronously.

The notification consists of a topic name and a payload (upto about 8000 characters). The payload would typically be a JSON string, but of course it can be anything. You can send a notification using the NOTIFY command:

NOTIFY'foo_events''{"userid":42,"action":"grok"}'or the pg_notify() function:

SELECTpg_notify('foo_events','{"userid":42,"action":"grok"}');The subscription happens with the LISTEN command, but typically you’ve to use driver-specific APIs. Here’s the Go version for example.

Table Inheritance

Assume you have a table called “invoices”. You’ve to now support “government invoices”, which are invoices but have a few additional fields. How would you model this? Rather than adding nullable columns to invoices, or a single nullable JSON column to invoices, try the inheritance feature:

CREATETABLEinvoices(invoice_numberintNOTNULLPRIMARYKEY,issued_ondateNOTNULLDEFAULTnow());CREATETABLEgovernment_invoices(department_idtextNOTNULL)INHERITS(invoices);This reflects the situation that all government invoices are invoices, but have an extra attribute. The “government_invoices” table above has a total of 3 columns:

test=# \d invoices

Table "public.invoices"

Column | Type | Collation | Nullable | Default

----------------+---------+-----------+----------+---------

invoice_number | integer | | not null |

issued_on | date | | not null | now()

Indexes:

"invoices_pkey" PRIMARY KEY, btree (invoice_number)

Number of child tables: 1 (Use \d+ to list them.)

test=# \d government_invoices

Table "public.government_invoices"

Column | Type | Collation | Nullable | Default

----------------+---------+-----------+----------+---------

invoice_number | integer | | not null |

issued_on | date | | not null | now()

department_id | text | | not null |

Inherits: invoicesAdding rows work as though the tables are independent:

INSERTINTOinvoices(invoice_number)VALUES(100);INSERTINTOgovernment_invoices(invoice_number,department_id)VALUES(101,'DOD');But watch happens when you SELECT:

test=# SELECT * FROM government_invoices;

invoice_number | issued_on | department_id

----------------+------------+---------------

101 | 2018-06-19 | DOD

(1 row)

test=# SELECT * FROM invoices;

invoice_number | issued_on

----------------+------------

100 | 2018-06-19

101 | 2018-06-19

(2 rows)Invoice number 101 from the child table is also listed in the parent table. This facilitates algorithms that know only about invoices to work on the parent invoices table while ignoring any child tables.

You can learn more about table inheritance in PostgreSQL in the docs.

Foreign Data Wrappers

Did you know you can have virtual tables that actually serve data from another PostgreSQL instance? Or even SQLite files, MongoDB, Redis, and more? This feature is called Foreign Data Wrappers, which provides a standardized way to access and manipulate data stored externally to the Postgres server you’re connecting to. There are various FDW implementations available that let you connect to various different data sources. These are typically packaged as extensions.

The standard Postgres distribution comes with thepostgres_fdw extension, which lets you connect to other Postgres servers. For example, you can move a big table to another server, and setup a virtual table (the proper term is a “foreign table”) locally:

-- install the extension (required only once)

CREATE EXTENSION postgres_fdw;

-- big_server is our big, remote server with the migrated table

CREATE SERVER big_server FOREIGN DATA WRAPPER postgres_fdw

OPTIONS (host '10.1.2.3', dbname 'big_db');

-- create a user mapping for the app user

CREATE USER MAPPING FOR app_user SERVER big_server

OPTIONS (user 'remote_user', password 'remote_pass');

-- and finally create the virtual table invoices -> big_db.public.invoices

CREATE FOREIGN TABLE invoices (

invoice_num int NOT NULL PRIMARY KEY

-- other columns omitted for brevity

) SERVER big_server;This Wiki page has a good list of the many FDW implementations available.

Apart from accessing data from other servers, FDW has also been used to implement alternate storage layouts, like cstore_fdw.

There is also dblink, which is yet another method of accessing remote PostgreSQL (only) data.

Partitioned Tables

Starting with version 10, PostgreSQL natively supports sharding of a table into multiple child tables, based on a partition key value computed from one or more column values. This allows a single, large table to be physically stored as separate tables, for better DML performance and storage management.

Here’s how to create a partitioned table, with the intention of adding a new table each month:

-- the parent table

CREATE TABLE invoices (

invoice_number int NOT NULL,

issued_on date NOT NULL DEFAULT now()

) PARTITION BY RANGE (issued_on);

-- table for the month of May 2018

CREATE TABLE invoices_2018_05 PARTITION OF invoices

FOR VALUES FROM ('2018-05-01') TO ('2018-06-01');

-- table for the month of June 2018

CREATE TABLE invoices_2018_06 PARTITION OF invoices

FOR VALUES FROM ('2018-06-01') TO ('2018-07-01');The child tables have to be manually or programmatically created, it does not happen automatically.

You can query and insert at the parent level, and PostgreSQL will route it to the appropriate child table, have a look:

First, we insert two rows:

test=# INSERT INTO invoices VALUES (10042, '2018-05-15');

INSERT 0 1

test=# INSERT INTO invoices VALUES (43029, '2018-06-15');

INSERT 0 1We can see that the rows are actually inserted into the child tables:

test=# SELECT * FROM invoices_2018_05;

invoice_number | issued_on

----------------+------------

10042 | 2018-05-15

(1 row)

test=# SELECT * FROM invoices_2018_06;

invoice_number | issued_on

----------------+------------

43029 | 2018-06-15

(1 row)But queries can be done on the parent, returning combined result:

test=# SELECT * FROM invoices;

invoice_number | issued_on

----------------+------------

10042 | 2018-05-15

43029 | 2018-06-15

(2 rows)Partitioning is in some ways similar to inheritance (query at parent level), but there are differences too (partition parent contains no data, for example). You can read more about partitioning in the docs.

PostgreSQL 11, currently in beta, improves upon this feature. This article has an overview of the improvements.

Range Types

Ever worked with temperature ranges, calendar scheduling, price ranges and the like? Working with intervals are one of those deceptively simple things that gently lead you into hair-pulling and late night debugging.

Here’s a table with a range column, and some values:

CREATE TABLE prices (

item text,

price int4range -- int4range is a range of regular integers

);

INSERT INTO prices VALUES ('mouse', '[10,16)');

INSERT INTO prices VALUES ('keyboard', '[20,31)');

INSERT INTO prices VALUES ('joystick', '[35,56)');The numbers in the mismatched brackets represent a half-open interval.

Here is the query to find all items that are in the price range $15 to $30,

using the && operator (range overlap):

test=# SELECT * FROM prices WHERE price && int4range(15,30);

item | price

----------+---------

mouse | [10,16)

keyboard | [20,31)

(2 rows)If you are not impressed, try writing the query without ranges. (No really, just try it.)

Range types are quite powerful – there are more operators,functions, you can define your own range types, and even index them.

To learn more about ranges, try this presentation, and the docs.

Array Types

PostgreSQL has supported arrays for a long time. Array types can reduce the amount of boilerplate application code and simplify queries. Here is a table that uses an array column:

CREATE TABLE posts (

title text NOT NULL PRIMARY KEY,

tags text[]

);Assuming each row represents a blog post, each having a set of tags, here is how we can list all the posts that have both “postgres” and “go” tags:

test=# SELECT title, tags FROM posts WHERE '{"postgres", "go"}' <@ tags;

title | tags

-----------------------------------+------------------------

Writing PostgreSQL Triggers in Go | {postgres,triggers,go}

(1 row)The usage of the array type here makes for concise data modelling and simpler queries. Postgres arrays come with operators and functions, including aggregate functions. You can also create indexes on array expressions. Here isan article on using arrays with Go.

Triggers

You can ask PostgreSQL to execute a specific function when rows are inserted, updated or deleted from a table. The function can even alter the values during an insert. You can read more about triggers here. Just to whet your appetite, here is a trigger that sends out a notification and writes to an audit log when a user is created:

-- a table of users

CREATE TABLE users (

username text NOT NULL PRIMARY KEY

);

-- an audit log

CREATE TABLE audit_log (

at timestamptz NOT NULL DEFAULT now(),

description text NOT NULL

);

-- the actual function that is executed per insert

CREATE FUNCTION on_user_added() RETURNS TRIGGER AS $$

BEGIN

IF (TG_OP = 'INSERT') THEN

-- add an entry into the audit log

INSERT INTO audit_log (description)

VALUES ('new user created, username is ' || NEW.username);

-- send a notification

PERFORM pg_notify('usercreated', NEW.username);

END IF;

RETURN NULL;

END;

$$ LANGUAGE plpgsql;

-- set the function as an insert trigger

CREATE TRIGGER on_user_added AFTER INSERT ON users

FOR EACH ROW EXECUTE PROCEDURE on_user_added();Now if we try to add a new user, an audit log entry is automatically added:

test=# INSERT INTO users VALUES ('alice');

INSERT 0 1

test=# SELECT * FROM audit_log;

at | description

-------------------------------+-------------------------------------

2018-06-19 04:00:30.672947+00 | new user created, username is alice

(1 row)pg_stat_statements

pg_stat_statements is an extension that is present by default in your PostgreSQL distribution, but is not enabled. This extension records a wealth of information about each statement executed, including the time taken, the memory used and disk I/Os initiated. It’s indispensible for understanding and debugging query performance issues.

The overhead of installing and enabling this extension is small, it’s very simple to use, and there is no reason NOT to run it on your production servers! Read the docs for more info.

Hash, GIN and BRIN Indexes

The default index type in PostgreSQL is the B-Tree, but there are also other types, that are documented here. Other index types are very helpful in cases that aren’t actually uncommon. In particular, setting up indexes of the hash, GIN and BRIN type might just be the solution to your performance issues:

- Hash: Unlike B-Tree indexes which have inherent ordering, hash indexes are unordered and can only do equality matches (lookup). However, hash indexes occupy much lesser space and are faster than B-Trees for equality matches. (Also, note that prior to PostgreSQL 10 it was not possible to replicate hash indexes; they were unlogged.)

- GIN: GIN is an inverted index, which essentially allows for multiple values for a single key. GIN indexes are useful for indexing arrays, JSON, ranges, full text search etc.

- BRIN: If your data has a specific, natural order – for example, time series data – and your queries typically work only with a small range of it, then BRIN indexes can speed up your queries with very little overhead. BRIN indexes maintain ranges per block of data, allowing the optimizer to skip over blocks that contain rows that won’t be selected by the query.

Start reading about PostgreSQL index types here.

Full Text Search

PostgreSQL can do full text search very well, including in languages other than English. Here’s an [article] (https://www.opsdash.com/blog/postgres-full-text-search-golang.html) that walks you through the steps of creating a full text query search app over Postgres in Go.

About pgDash

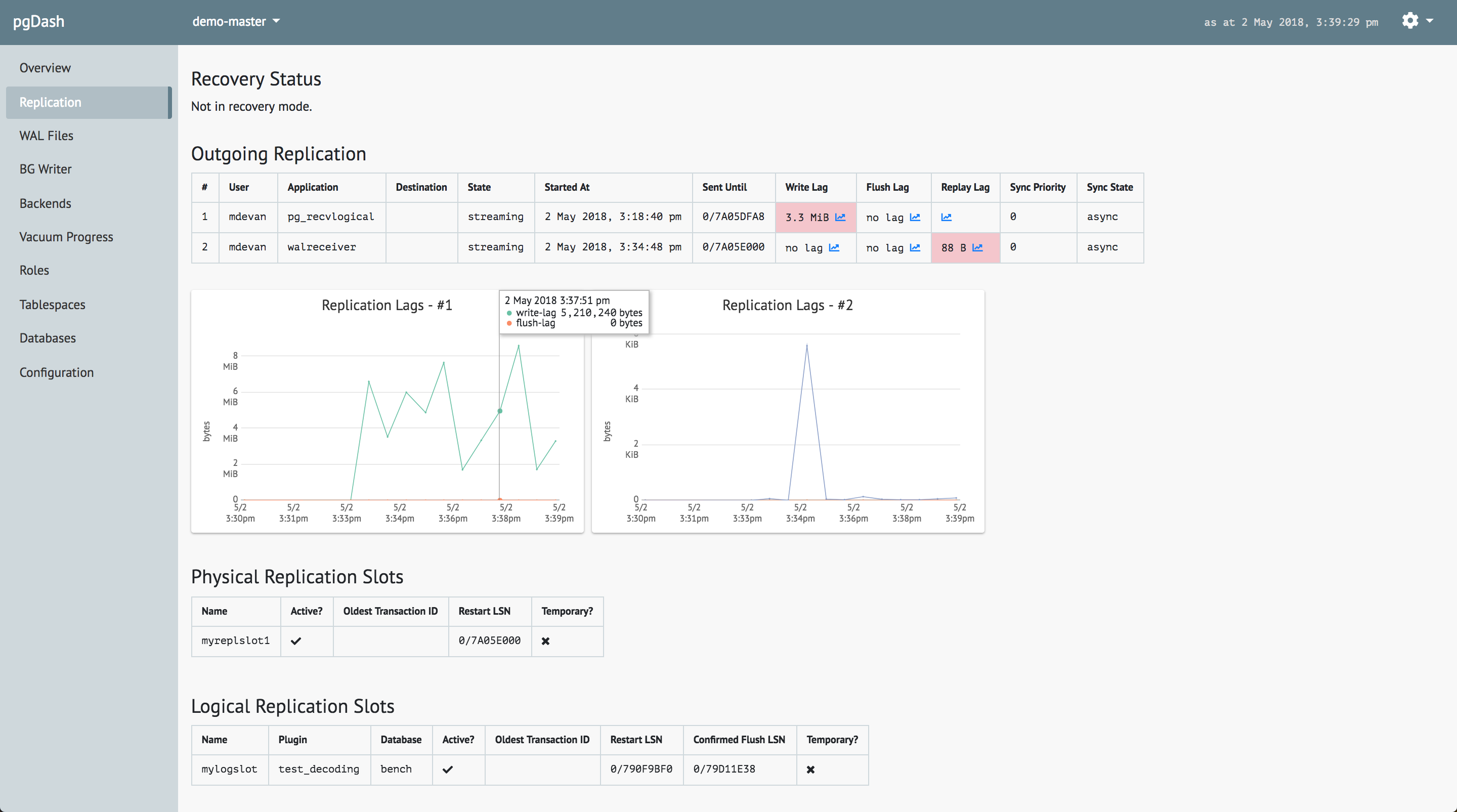

pgDash is an in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics.

pgDash is currently in Beta and provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, alerting and more.

Serverless is a new buzzword in the database industry. Even though it gets tossed around often, there is some confusion about what it really means and how it really works. Serverless architectures rely on third-party Backend as a Service (BaaS) services. They can also include custom code that is run in managed, ephemeral containers on a Functions as a Service (FaaS) platform. In comparison to traditional Platform as a Service (PaaS) server architecture, where you pay a predetermined sum for your instances, serverless applications benefit from reduced costs of operations and lower complexity. They are also considered to be more agile, allowing for reduced engineering efforts.

Serverless is a new buzzword in the database industry. Even though it gets tossed around often, there is some confusion about what it really means and how it really works. Serverless architectures rely on third-party Backend as a Service (BaaS) services. They can also include custom code that is run in managed, ephemeral containers on a Functions as a Service (FaaS) platform. In comparison to traditional Platform as a Service (PaaS) server architecture, where you pay a predetermined sum for your instances, serverless applications benefit from reduced costs of operations and lower complexity. They are also considered to be more agile, allowing for reduced engineering efforts.

When using a text file, create a line in pf.conf like

When using a text file, create a line in pf.conf like