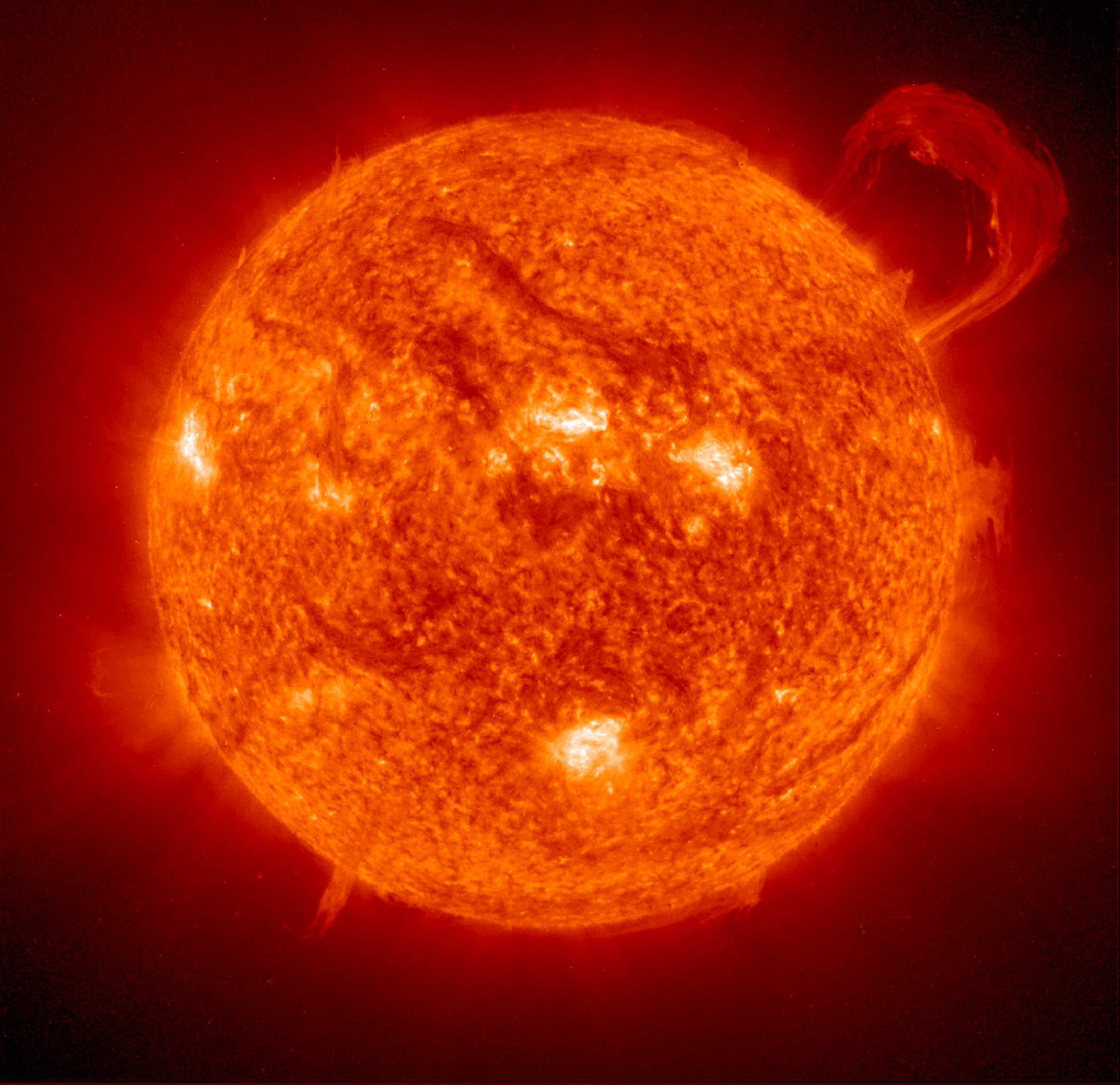

Those of us with the means to do as we pleased drove our vast resources into monumental construction. My grandest project was a Chinese city, tragically cut short by server reset. View from the north, inside the outer wall.

The first game I ever outsmarted was Final Fantasy 6. I was eight or nine. The rafting level after the Returners' hideout. You're shunted along on a rail, forced into a series of fights that culminate with a boss encounter. You have a temporary party member, Banon, with a zero-cost, group-target heal. The only status ailment the river enemies apply is Darkness, but as a consequence of an infamous bug with the game's evade mechanic, it does nothing. The river forks at two points, and the "wrong" choice at one of the forks sends the raft in a loop. There is a setting that makes the game preserve cursor positions for each character across battles.

I set everything up just right, placed a stack of quarters on the A button, turned off the television, and went to sleep.

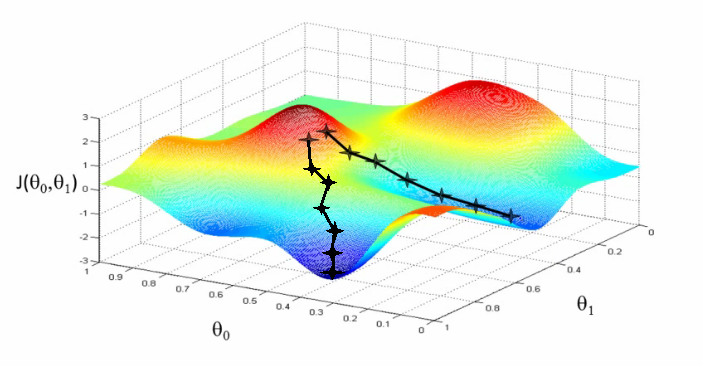

I've always loved knowable systems. People are messy and complicated, but systems don't lie to you. Understand how all the parts work, understand how all the parts interact, and you can construct a perfect model of the whole thing in your head. Of course it's more complicated than that. Many people can be understood well enough for practical purposes as mechanical systems, and actual mechanical systems can be impossibly complex and plenty inscrutable. There are entire classes of software vulnerabilities that leverage physical properties of the hardware they run on, properties sufficiently abstracted away that most programmers have never in their lives considered them. But the thought is nice. I dreamed of going into constitutional law as a kid, back when I thought law was a perfect system, with outputs purely a function of inputs, that could be learned and trusted. I got fairly decent at interacting with people basically the same way you train a neural net, dumbly adjusting weights to minimize a loss function until I stumbled into something "good enough." I have to physically suppress the urge to hedge nearly everything I say with, "Well, it's way more complicated than this, but..."

So I know what I like, at least. Games scratched for a while this itch I have. Outright cheating always kind of bored me. Any asshole could plug in a Game Genie and look up codes online. (It had not occurred to me, as a child, that these "codes" were actually modifications to learnable systems themselves.) What I liked was playing within the confines of the rules, building an understanding of how the thing worked, then finding some leverage and exploiting the hell out of it. It's an interesting enough pursuit on its own, but all that gets cranked up an order of magnitude online. You're still just tinkering with systems. Watching how they function absent your influence, testing some inputs and observing the outputs, figuring things out, and taking control. But now you have marks, competition, and an audience. And just, like, people. People affect the system and become part of the system and make things so much more complex that the joy of figuring it all out is that much greater.

After sinking 10-20k hours into a single MMO and accomplishing a lot of unbelievable things within the confines of its gargantuan ruleset, it is generally pretty easy for me to pick up another game and figure out what makes it tick. I'll tell the story about that whole experience sometime, but it's a long tale to tell. This is about one of those other games: Minecraft.

Classical Chinese cityplanning divided the space of a city into nine congruant squares, numbered to sum to 15 in all directions. Everything was oriented on a north-south axis, with all important buildings facing south (here toward bottom-right.) The palace sat in the center, protected by its own wall with gatehouses and corner towers more ornate than those on the outer. The court sat in front of the sanctum, the market behind, the temple to the ancestors to the left, and temples to agriculture on the right. This all derives from the Rites of Zhou, and is presumed to be the exact layout of their first capital, Chengzhou, before the flight to Wangcheng.

The Players (names changed)

- Alice

- Ok this name wasn't changed.

- Emma

- Breathtaking builder with nigh-limitless cash reserves. Often called the queen of the server and earned the hell out of the title. She'd buy items at three times market just because she needed lots fast, and she bought so much her price became the price. And the builds she made with them were truly remarkable. Living legend.

- Samantha

- Before the currency was backed by experience points, she built the fastest mob grinder on the server and made an ungodly amount of money selling enchantments. Would quit for months, come back, and shake the economy up like no one else.

- Victoria

- Chief architect of an extensive rail network in the nether. Kept a finger on the pulse of the economy and bled it for all it was worth. Played the market like a harp. A lot of our best schemes were Victoria's schemes.

By the time of the Crash, we four were among the most influential people in the economy. By the end of the recovery from it, we owned the economy. The cartel we formed to pull the market back from the brink had about a half-dozen other significant players, and everyone contributed plenty, but for the most part the four of us called the shots and had the capital to back it up. When the server was wiped for biome update, we vaulted every hurdle, most of which were put in place specifically because of us, and reclaimed our riches in a matter of months.

- Zel

- Market maker. Known for the Emporium, a massive store near the marketplace proper that also bought everything it sold, a rare practice. Got rather wealthy off the spread on items; I almost single-handedly bankrupted them off the price differences between items. Also wrote our IRC bot, so for a time !alice triggered a lighthearted joke about my ruthlessness.

- Lily

- Kicked off the wool bubble. Did quite well for herself, as she was a producer rather than a speculator.

- Charlotte

- Discovered an item duping glitch and crashed the entire economy. Never shared the existence of the vuln or her exploit for it with a soul, as far as I know. Was obvious to me what she was doing, but only because I understood the economy well enough to know it was impossible any other way. If she'd switched to a burner account and laundered the money, she probably would have gotten away with it. Good kid. Hope she learns to program.

- Jill, Frank

- Just as lovely as everyone else, but for our purposes, "two wool speculators."

My main shop in the market, the last in a series of four locations, trading up each time.

Starting Out

Working a game's economy is an interesting pursuit because it, like most interesting pursuits, requires your whole brain to get really good at it. It's both analytical and creative: devise general theories with broad applicability, but retain a willingness to disregard or reevaluate those theories when something contradicts them. And it's fun as hell. There's not much quite like the brainfeel of starting with nothing, carving out a niche, getting a foothold, and snowballing. Game economies are all radically different because there aren't any limits on weird things the designers can do with the game, but they're all fundamentally similar too. Here are the tricks to breaking any of them, as basic as they may be:

- Learn the game inside and out. You don't necessarily need to get good at it. I was a terrible player of my MMO for the first couple years I was involved in top-tier play. My primary role in my guild before I actually got good at the game itself was "in-house mechanics wonk," and it was an important role.) But you need to know what "good" is. It's hard to speculate on pork bellies without understanding why people care about pigs in the first place.

- Read the patch notes and keep them in mind. Read the upcoming changes until you know them by heart. Actually think about how changes to the game will change the market. This is as overpowered as insider trading is in the real economy, except the information is all right there in public. Most players never do this, and you can make a killing in any game by hoarding the things that will be more valuable when the patch hits than they are right now. You would be amazed how fast "I'm so excited about [useless item becoming incredibly useful]!!" turns to "omg why is [suddenly useful item] so expensive :( :(" the moment the patch drops.

- Study a tiny piece of the market. Don't touch it, just watch until you think you understand it. Make a small bet and see whether it pays off. Consider whether your hypothesis was right or whether you just got lucky. Slowly increase the size of your bets. Explore other tiny pieces. Think about how those pieces interact, how they are similar, how they differ. Manage your risk. Accrue capital so you can increase the size of your bets while decreasing your risk of ruin. It's a bit of art and a bit of science, but you can go from dabbling in a few niches to having a complete understanding of the entire market before you even know it.

- Study people. Know your competition and know what makes them tick. Know the major buyers, know the tendencies of the swarms of anonymous buyers.

- Overall just know a lot of things I guess.

I started on my server with only a rudimentary knowledge of the game itself and ipso facto zero understanding of its economy. Within six months or so, I had perhaps as detailed a mental model of it as one could get. I knew the price ranges of most of the items in the game and everything that all of them were used for. I knew how common they were on the market, who the major sellers were, what their supply chains looked like. I knew how fast they sold through, whether the price was stable or tacking a certain way, and I had tons of theories on ways to play all this to get what I needed and turn a profit while doing it, and nearly all of them were sound. Most of it I didn't even think about. I didn't need to contemplate why, for instance, lumber was both cheaper and more common than it should be, such that I could buy it all and hold, force the price up, corner the market, and keep it that way. I just kind of... knew, and did it. It's a wonderful feeling, weaving a system into your mind so tight that it's hard to find the stitches after awhile. Highly recommended.

Approaching the city from the north, outside the outer wall. In general I modelled the structures on the outer wall on the robust Song styles, with the inner more in the ornate Ming fashion. Terraced farms harvestable by water alongside large livestock pens provided all the food I needed.

Econ Infodump

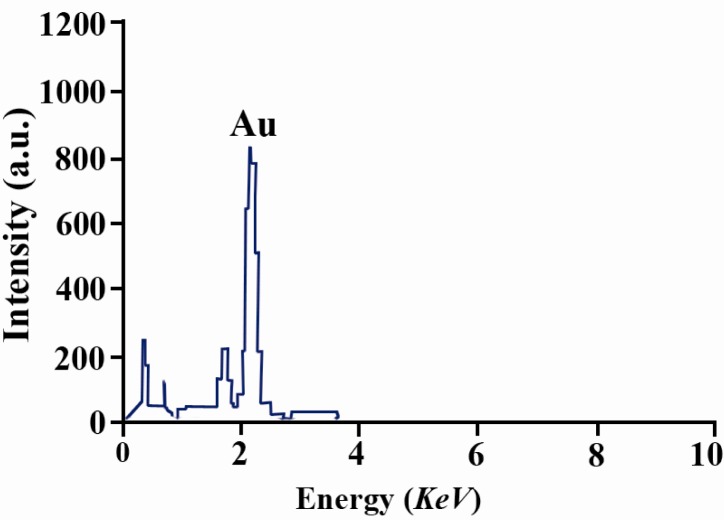

Our server had an economy plugin called QuickShop. It added to the game a currency called marbles. Functionally marbles were nothing more than a number tied to your account. Marbles were backed by experience points, freely convertible in both directions by command. Experience points had intrinsic value because they were used for item enchantments, for instance to increase the mining speed of a tool or the damage of a weapon. Thus all the properties of a proper medium of exchange.

QuickShop also added, as one might expect, shops. Shops were chests with signs on them that bought or sold a designated item at a designated price. The moderators reserved a tract of land close to spawn as the official marketplace and leased a limited number of plots there to the public. There were many attempts by the playerbase to create competing unofficial markets, to varying degrees of success. The official market also had an official store, which sold certain items that were nearly impossible (cracked stone brick) or literally impossible (spawn eggs, sponge) to get otherwise. There was a plugin to lock chests, a plugin to remove randomness from the enchanting process, a plugin to bound the size of the world, a plugin to warp you between spawn, market, and your designated home. Other than that the server was vanilla survival non-PVP, no weird items or mobs. (In most cases I'm talking about 1.5 unless context implies 1.6/1.7.)

One marble was equal to two levels of experience. A diamond was generally 18-23M depending on the economy, and as they were extremely useful and their price could generally be relied on to stay in this range, diamonds were an excellent alternative store of value. Stone and dirt were 0.01M, the smallest you could subdivide a marble. In practice currency divisibility was never a source of friction; most resources were bought and sold by the stack (64 blocks) or chest (1728 blocks). Beacons were the most valuable item and ranged anywhere from 2000M to 8000M. Max enchant for an item cost 40-60 levels, ie 80-120M. Anyone who mined diamond without Fortune 3 was a fool, anyone who broke any blocks at all without Efficiency 5 Unbreaking 3 was a scrub. The server had over a few hundred to under a thousand weekly active players, maybe more than a thousand during summer months. Only the top ~1-3% were wealthy enough that we didn't have to mine unless we felt like it and were free to devote all our playing time to Great Works.

Virtual economies can be quite unlike real-world ones because the physical laws of the space are different, but analogies can be drawn, and the similarities and differences are both fascinating. Due to the warp mechanism, other than near public warp points like spawn and market, land was abundant and low-value--it mattered little whether you were 5000 or 50000 blocks from spawn if you could /home there from anywhere. Property rights were enforced by the admins. Claims worked something like homesteading in that you couldn't just stick a sign in the dirt, but if you worked the land, it was yours. Land could be owned communally, but tenants had no rights except to their movable property. ("Let's build a town together" sometimes worked well. "Hey join my town" never did.) Vandalism and theft were mostly eliminated by the LogBlock panopticon. With suspensions and permabans being the only analogs for physical coercion, the admins derived their monopoly on violence from the fundamental properties of the universe. They used their power very carefully, however, kept in check by the fact that there were zero barriers to exit beyond social ties and time investment. The internet births interesting societies.

One property of Minecraft itself is items can be grouped into the disjoint sets "renewable" and "nonrenewable". Nonrenewable resources are just that: a fixed number of them are created when the world is genned, no more will ever exist. Diamond is the big one, both mechanically crucial and totally nonrenewable, hence its excellence as a store of value. The only way to get them is to dig them out of the ground. This isn't a statement on effort or rarity--dirt is nonrenewable, for instance--just the fact that they cannot be created out of thin air.

Renewable resources can be created out of thin air. A subset of renewable resources can be farmed; a subset of farmable resources can be autofarmed. Some examples: redstone is technically renewable through villager trading or witch farming, but the cost of the former and the hassle of the latter makes it unfeasible, especially given how commonly it's encountered while diamond mining. Wheat is both renewable and farmable: plant seeds, harvest wheat, get more seeds from that, repeat. Harvesting can be automated with machines that run water over the fields and send the products into hoppers that feed chests. Replanting, however, requires a right click. Cactus is completely autofarmable: cacti grow to three blocks tall, cactus blocks break and drop the item if directly adjacent to another block, cacti grow irrespective of that fact. So you float blocks next to where they'd grow, flowing water underneath to channel the output into a hopper, cactus blocks accumulate forever with zero human intervention.

The important bit is with farmable resources, you double the size of your farm, you double your output but spend less than double the time each harvest. But the only way to get twice as much diamond is to dig twice as long. Logarithmic vs. linear growth. Autofarmable resources take time to accumulate but time spent harvesting is for practical purposes zero.

The properties of diamond make it in many ways the most crucial and interesting item in the game. But I'll get to that. First, a story about sheep.

Approach from the south, audience hall before the main entrance to the inner city. The guardian lions are arranged as is traditional. Male, on the left (from a south-facing perspective), its paw on a ball representing dominance over the world. Female, on the right, its paw on a cub representing nature.

All's Wool That Ends Wool

Sheep function kind of like wheat. You can breed an arbitrary number of them and efficiency increases on a curve, but shearing hundreds of sheep is at least as time-consuming as replanting hundreds of seeds, probably more so. Wool is a much more interesting resource, though. Sixteen different colors, and when building with wool, you generally need particular colors and you need them in bulk. White wool blocks can be dyed individually, but dye cost makes this infeasible for most colors in large batches. You can dye a sheep any color, however, and it will produce that color wool indefinitely.

Colored wool was notoriously hard to get on the market, and most just took this as a fact of life. People tended to grow their own food because it was relatively low-effort and cost nothing. So it was common to just keep a pen of sheep near the food, dye them when you needed a particular color, shear them over the course of days or weeks until you had enough wool for your build. Market rate for white wool was 0.08M, stable supply and decent demand, perfectly natural price. Colored wool, however...

- You'd need sixteen shop chests rather than just the one, and floorspace in official market shops was valuable because they were size-limited by admin fiat.

- Colored wool sales were virtually impossible to predict. You'd sell out of blue in an instant because someone happened to need blue. Maybe you restock and don't sell another block of blue for a month. So you had to stock everything all the time, meaning you had to have a lot of sheep.

- You may be the only seller in the market, but you're competing against prospective buyers just doing it themselves. Since it was common knowledge that colored wool wasn't available on the market, people had already gotten used to doing it themselves, in part because...

- Shearing sheep was fucking annoying. Shearing sixteen different pens of sheep for 0.08M a block when most of those blocks would languish most of the time was plain stupid. Sellers lost interest fast, so buyers got used to here-today-gone-tomorrow wool stores and adapted to there never being a reliable source.

What should have been obvious though was that because of all this, colored wool was simply more valuable. Plenty of demand, virtually no supply, who gives a shit about the price of white wool, right? People just assumed because the blocks were mechanically the same, they were worth the same.

Emma realized this and spread word on the grapevine that colored wool was an untapped market with massive profit potential. (I'm pretty sure she did it because she needed a lot of wool and was hoping for someone to do the work for her.) And whenever Emma said there was money to be made, everyone listened.

Lily was the first. She bred up hundreds and hundreds of sheep (so many that she had to cull the herd a bit because her FPS cratered whenever she looked at them) and by week's end there was a brand new wool store in the market with a full chest of every single color, plus a sign promising there'd always be more where that came from. She sold for 0.12M and business was good.

Really good, in fact. Even with the 50% increase, she sheared sheep for hours and could barely stay in stock of any color, despite the received wisdom that colored wool sold erratically. That's because another player, Jill, was buying out her entire inventory and reselling it across the street for 0.2M. And Jill didn't do too bad for herself either. Pretty soon wool went from a backchannel convo topic to the hottest game in town.

On the heels of Jill's success, Frank opened his own wool store. Not only did he sell for 0.35M, he bought via the same doublechest at 0.2M. Lily raised her price to compensate. Zel got in on the action with their own buy/sell doublechests, and everyone said business was booming. Prices kept climbing, and at the height of it, Zel was buying at 0.5M a block and selling for even more, while all the normals who just wanted a bit of wool here and there complained about the "crazy" market. Sorry guys, they were told, that's what wool is actually worth nowadays.

Except not quite. No one who wanted wool for consumption was actually paying those prices, it was all wool merchants buying each other out. I knew better than to get involved--it felt a lot like a bubble, so any investment at all carried unacceptable risk. Unless, of course, there was a way to get instant money with zero risk.

Wool, again, is renewable. It's farmable. Farming it is annoying, sure, but it's dead easy. While the price was climbing on speculation, a new seller nobody had heard of set up his own shop, without much fanfare, selling every color at 0.1M. He'd built his own farm, like they used to back in the olden days of less than a month ago. Jill and I were the only ones who even knew he existed, because every day when he logged in, we kept one eye on the server map. When we saw him warp to market, we raced to his shop to buy out anything he'd restocked. I imagine anyone else who noticed his store just figured it was always empty.

Jill used his wool to refill her chests. She did decent business. I took everything I bought, however, and immediately dumped it into Zel's buy chests. Quintuple what I paid, not a bad deal at all. As I started to fill their chests up, I sold anything else to Frank and a few other small-time buyers, the objective always being to unload what I bought within the hour. After awhile, "haha where are you getting all this wool from :P" turned to "no seriously alice where are you getting all this wool from." The bottom fell out of the market as the speculators shifted from "turn a profit" to "cut my losses" to "sell sell sell." Colored wool corrected to the nice sensible price of 0.12M and all was right with the world.

Through the audience hall, a courtyard before the gatehouse guarding the inner city.

"It's Your Money, and I Need It Now!"

Buy chests can be a real bitch. Emma, Victoria, and I used them extensively, but we used them right. Most people didn't use them. Most everyone else who did used them very, very wrong.

If you set a chest to buy an item at some price, it is possible for anyone at any time to sell you 1728 of that item. If you do this, you'd better be damn sure you want 1728 of that item. If you don't, you can pad out the chest with garbage to reduce its capacity. Set up a buy chest for say diamonds, put dirt blocks in 26 of the slots, now you can't be sold more than 64 diamonds. No one ever did this. I'm not sure it even occurred to most people that it was possible. One thing I got very, very used to seeing was the helpful message, "Failed to sell N items: player can only afford M."

As long as you knew what you were doing though, buy chests were beautiful tools.

Emma liked to buy materials for her builds for more than what people were selling. (Naturally, she first bought out every seller in the market.) Her prices were so good that people would spend hours a day doing the boring work for her. That's how I got my start, too--sold her maybe 15000 blocks of clay at 1M per over the course of a couple weeks. Enough capital to get me established, and within a month or two after that, playing the market made me enough cash that I didn't have to mine for anything.

Buying for less than market rate worked great too, if you didn't mind waiting. This is what I tended to do, both for things I needed and for things I turned around and sold at a markup. I rarely set up a buy chest that I didn't intend to keep open for months, and I adjusted my prices to change my burn rate rather than ever stop buying. It worked beautifully because over time people came to rely on my buy chests and could trust they weren't going away. I accumulated a group of regulars who sold to me because they knew I was always buying, and word of mouth drove more to me too. "Are ink sacs worth anything?" the newbie asks. "Yep, Alice buys those," says the good samaritan, who then brings them right to my door. Splendid.

Victoria did plenty of that kind of business too, but my favorite hustle of hers was her farm. She had on her land wheat fields, livestock pens, a tree farm, and various other such things. I mean, we all did, but she set up buy chests for all those goods right there at something like a quarter market rate. I was way the hell in the middle of nowhere, so it wouldn't have worked for me, but she also had the nether rail. "Take the white line to the third stop and you're right there!" And people would go work her fields, shear her sheep, chop down her trees, replant everything, and immediately sell her the goods at rock-bottom prices.

But that was us. Most people who set up buy chests, they were just begging for someone to take all their money. Few angles were more profitable or more reliable. No one was as good at it as me, in large part because I knew the entire market. Once or twice a day I'd stroll through the marketplace peeking in all the stores to see what changed. I didn't just know how much everything was worth, I knew every item every store bought and sold at what price and how much they had and how all those things had changed over time. To a reasonable degree of accuracy, anyway.

Imagine: p-queue of every chest, prioritized by item importance, "importance" being some heuristic incorporating overall supply/demand, whether I personally needed it, what kind of margin I could expect to make flipping it, whose store it was, what kind of foot traffic that store got, which market it was in... few other things I suppose, it wasn't a system so much as a feeling. First few hundred chests in the queue I flat-out memorized. Next thousand or so I knew which store what item and around how much. All the rest I knew there was a store in a general area that bought or sold the item at a good bad or ok price. By "all the rest" I mean all. At the height of it there was Market East, Market West, Market 2 (don't ask), Zel's Emporium, The Mall, and a couple dozen minor destinations in distant locales most players didn't even know about. Ballpark 16 chests/shop * 16 shops/row * 4 rows/market * 3 markets + Zel ~= 3200 chests and a couple thousand more in the hinterlands.

Most of the people in the top tier I knew their stores better than they did. It wasn't uncommon, for instance, for Zel to tell someone in chat, "I sell X item for P marbles," only for me to interject, "You sell X for Q but you've been out of stock for a week. Market East, second left, third shop on the right sells for R." One time I caught someone who had been using a hopper to siphon emeralds out of one of Victoria's shop chests. I didn't witness it or anything, I just noticed her supply had steadily dropped over the course of a week at a rate that was highly unusual given how the emerald market normally flowed. Summoned a mod to check the history of the blocks underneath, and my suspicions were confirmed. Victoria hadn't realized anything was even missing.

(A little while later someone scooped a beacon from me in the same manner; I'd since learned who did Victoria's shop, so I private messaged the likely culprit with a few choice words. They apologized profusely, swore to mend their ways... and a few hours later hoppered three beacons back to replace the one they took. A happy ending for all involved, I'd say. After this I replaced the blocks underneath my chests with locked furnaces.)

Anyway. It's easy to cash out on buy chests when you know every shop on the server. And no one had more buy chests than Zel.

The largest structure in the inner city, a palace meant for reception of honored guests and scholar-officials. Similar in layout to a traditional siheyuan, though on a much grander scale. The front structure was more open to vistors (though of course access to the inner city was strictly controlled) while the ruler's living quarters were tucked behind. All in all I didn't leave myself enough space to do a full complex. If I were to do it again, I'd make the city much larger and worry less about leveling terrain (which ended up taking an incredible amount of time).

Ethics in Video Game Commercialism

Zel's Emporium was truly a wonderland. Three stories, couple hundred chests, and every item they sold, they also bought. From the same chest. In theory, the arbitrage business is a good one: set up your shops, keep an eye on the prices, collect free money off the spread with very little effort. The Emporium's stock was so diverse that it did plenty of business in both directions, and Zel had enough cash reserves to bounce back from most setbacks.

Well, most. Let me tell you about lilypads. Lilypads gen on water in swamp biomes. Very common and fairly easy to gather but don't have much use besides decoration. People who wanted them usually just needed a handful to decorate a pond or two, people building in swamps only ever harvested them incidental to other activities, and most people didn't like to build in swamps anyway. Lilypads were garbage. Zel, being the long tail merchant that they were, sold them for 5M. Overpriced relative to their commonness in the world, but fine considering their scarcity in the market. One of their hundreds of tiny rivlets of income.

Zel bought lilypads for 3M. This was easily one of the most absurd prices I had ever seen on the server for anything and I spent days stripping swamp biome after swamp biome for the things just to take advantage of it. I emerged from the swamps and with no warning sold Zel around 3500 lilypads for just over 10000M. Zel later told me they knew the price was high but never in a million years thought anyone would be insane enough to do what I did.

It sounds like I picked on Zel a lot. I really did like them and felt bad about abusing their store so much. Not bad enough not to do it though. Managing a couple hundred chests is hard as hell, and market conditions shifted prices faster than they could keep up. It became almost routine that I'd find something in the market Zel bought for more than the seller sold, buy it out, warp over, free money.

Eventually, to my shock, I completely tapped them out. I didn't think it could be done--that they were always able to buy anything was the defining feature of the store, and their reserves seemed deep enough that I never thought I'd drain them. They widened their spreads even further, dropped their sale prices a bit, and tried to recoup. They still had huge stores of goods, so it's not like they were flat on their ass. They brought in a partner and revamped things a bit, and I even sent letters from time to time when they got a price so egregiously wrong that I felt it would be dishonorable to exploit.

Obviously though, the game was up. I couldn't sell them anything if they had no money.

...so I started running back-to-back transactions where I'd buy just enough of their valuable items to give them the precise number of marbles that I planned to reclaim by selling them junk. All's fair, y'know.

The upstairs of my shop. After a few weeks gathering for Emma to get myself established, I started keeping clay for myself. By time 1.6 came I had five or six doublechests and solid stocks of or supplychains for every dye. I was the only seller and did pretty well.

Clays 'n Saddles

I don't think I can stress this enough: even if you suck at playing the market, even if you don't have much time to invest into the game, you can blow any virtual economy wide open just by reading the upcoming changes, predicting how those changes will shift supply/demand curves, and investing in items based on those predictions. Huge, complicated MMOs, it can often be hard to make accurate predictions without an encyclopedic knowledge of game mechanics. Often it's pretty simple though. People want cool shit.

Minecraft 1.6 was colloquially known as The Horse Update. It added such things as:

- Horses

- Hardened clay

- Coal blocks

- Stained clay

- Carpets

It was a popular topic of conversation on our 1.5 server for some time; we always got updates several months late since we needed to wait for Bukkit and our core plugins to update. (I'm not sure if things have changed since, but back then, every Minecraft update was a breaking one. Modders had to dump the jars every release and work from the decompiled artifacts directly. It is a testament to how enjoyable a game Minecraft is to play that it even has a mod community at all.) Here are all the new 1.6 features people on our server talked about:

- Horses

- Horses

- Omg horses

- Guys I can't wait for horses

- Horses!!!!

To ride a horse you need a saddle. Prior to 1.6, saddles could only be used to ride pigs, and pigs are terrible mounts, so no one used them. Several shops stocked saddles at 20-30M. Some people sold for more, since saddles were uncraftable and pretty rare, but no one ever paid that much. For about a month leading up to 1.6, I bought any saddle I could find under 60M. In theory most players would only need one or two of the things, so I didn't want to spend absurd amounts on them. I ended up with several dozen, figured they'd go up to maybe 80-90M and I'd turn a decent profit.

Then 1.6 hit and people absolutely lost their minds. Turns out, only a minority of players so excited about the horses had made sure to get ahold of a saddle in preparation for the patch. Most all of them just assumed they could buy a saddle on patch day so why bother getting one early. Several rich players had stockpiled plenty but had zero intention of selling them. Every saddle in the market vanished within a matter of hours: 80M, 120M, 150M, it didn't matter, and after they were all gone, there was much wailing and gnashing of teeth. I knew the price would rise, but I didn't think it would rise that high. You can usually get a saddle or two from a solid day of dungeon crawling. But no one wanted to go explore boring old dungeons. They wanted to ride horses, dammit.

I placed a chest in the center of my main store, atop a nice little diamond block pedestal, selling one saddle, for 750M. Most people laughed at the price, some cursed my greed, plenty sent me private messages trying to haggle or find out if I had more. A few hours later, it sold. I let the chest sit empty until word got around, then put another saddle in it.

All told I moved ten or twelve at 500-750M apiece, average profit per around 1000-2000%. The pricepoint proved unsustainable, but because of a rather mysterious supplier (which I will get to soon) I had a steady stream of saddles that I could sell quite briskly at a modest markup. People were starting to pour hours into extracting the things from dungeons too, hoping to cash in on market conditions they didn't realize had already evaporated. But I managed to outsell them anyway, even as they tried to compete on price, because I had something they didn't: horses.

My horse farm. I considered at one point digging out a space underneath to build a track on which to clock their run speeds. Never ended up doing it, though, because aside from Emma, no one actually cared how fast the horses were.

Horse of a Different Color

Normally, horses spawn naturally in grasslands, just like any other animal. But because we were on an old world, or because of a bug in some plugin we used, they didn't. So the admins sold horse eggs for 150M, single-use items normally unobtainable in survival that spawned a horse (90%) or donkey (10%). Horses came in seven colors and five patterns for a total of 35 different appearances, and people had strong opinions about which one they wanted. Average players could afford one or two, but with such a low chance of getting what they wanted, many found themselves disappointed or else didn't even bother.

I scouted out locations close to spawn (a difficult task given how overdeveloped the land was), eventually discovering a small mountain someone had built their home atop who probably wouldn't notice or mind that overnight I'd hollowed it out and stuck a couple doors on the side. Set up pens with fences in my cavern under the mountain, bought fifteen or so eggs, popped them all and started breeding the output. Breeding took an item of negligible cost given to each horse and produced a foal with close to a 50-50 chance of inheriting one of the parents' colors and one of their patterns, with a very small chance of getting a random one instead. There's a cooldown of some minutes before they can be bred again. Foals can be raised into adults instantly by spamming them with wheat. It took a few days of cross-breeding and culling, but eventually I had 70 horses, two of each combination, separated into seven pens by color, along with a handful of donkeys and mules.

I sold horses for 100M, two-thirds the price of an egg, but unlike the crapshoot that was, I could offer any style the buyer wanted, no risk and no wait. (And at zero marginal cost.) Buy a saddle with the horse and get an extra discount. This proved to be a nice side business for some time.

My warehouse, in the basement of the palace. The view from the opposite corner is much the same. Some of the ladders descend to more stacks of doublechests underneath. Organizing and labeling all of this is probably one of the most autistic things I've ever done. Though I'm pretty sure everything in this post qualifies.

She Went to Jared

Or, How I Learned to Stop Worrying and Love Catastrophic Economic Meltdown

So an interesting thing happened during the whole saddle episode. As I became well-known for having the only consistently available, if rather pricey, stock of the item, a player Charlotte messaged me asking if I'd like to buy more. They were selling rather briskly now, I was starting to run low, and especially since I was planning on getting into the horse business too, I needed a steady supplier. She'd sell me six or seven at a time for 50 or 60M, saying that was all she had, but whenever I went back, she had more. A bit strange but nothing too out of the ordinary. She had a few orbiters, so I figured maybe they were working together to excavate the things. Anyway, it was good for me.

Then she opened her store in the far corner of the market. It was truly insane. Diamonds for 6M, when I sold for 20M and Emma for 23M. Emeralds for 5M, gold blocks for 3M. Wither skulls for 120M--three makes a beacon, and beacons sold for thousands when they could be got at all. Enormous quantities of everything, no one could have harvested all this if they spent years, and Charlotte was nowhere near a savvy enough player to have acquired it through the economy. And if she was, she wouldn't have been selling for those prices anyway.

I went to one of the admins and told her someone had discovered a dupe glitch. She told me this was impossible. I explained the evidence, that it was the only likely explanation, that I didn't think anyone should be punished necessarily, but it should at least be corrected and the items deleted. She continued to insist that there was no way this could possibly happen. (She was a programmer herself, and as such probably should have known better.) Wary of being tagged an accomplice then, I asked if it should turn out that these items were in fact duped, would I be punished for trading in them. She assured me that no one would be banned for buying and selling in good faith.

So I went to work.

Diamonds being not the most valuable but certainly the most valued item in the game, both for their utility and their price stability, the server was littered with buy chests for them. These were mostly of the fling and a prayer sort, offering prices low enough that anyone selling to them was a noob or a fool. But not so low that I couldn't sell them Charlotte's. I bought from her all I could afford, bankrupted every single person who had a buy chest at any price, then went back for more. Buy chests in the market shops, scattered on the roadsides, nestled in secluded towns no one remembered the names of, I hit them all. If you were buying diamonds at the bottom of the ocean, I would find you and take all of your money.

At the same time, I dropped my sell price in the market to 16M and did pretty good business for a few weeks. I had the advantage of one of the two best plots there were, the other belonging to Emma. (This I'd gotten via inside knowledge that Zel's to-be partner was shuttering his store and gifting the plot to a friend. I offered to swap my plot as the gift, help with the deconstruction process, and advise on pricing in the Emporium in exchange, thus getting the prized location without it ever going up for sale.) QuickShop provided a console command to show the closest shop selling an item, and these two plots, though behind hedge walls and not immediately visible, were the closest as the crow flies to the market's warp-in point. So anyone using the command--and this was most people, traipsing through the market looking for deals being a rare activity mostly limited to speculators--got directed to me or Emma for anything either of us sold.

This all made me a lot of money. I drove a portion of profits into bolstering my diamond and beacon reserves, bought basically any building material I thought I'd ever need in bulk, and still watched my marble balance grow. Up til the diamond bonanza, I'd been making money on a dozen different side hustles. A bit here, a bit there, doing better than most, but regardless the day-in day-out of working the market took up the majority of my time on the game. That made me rich; this is what made me wealthy.

But soon 16M became 14M, and 14M became 12M. A few people started to notice Charlotte's store, and she restocked faster than I, or anyone, could recoup enough to buy out. Mostly though, it was clear to everyone the price of diamond was falling, even if they had no idea why. I diversified into selling enchanted diamond equipment of all types, priced just so that I could break even on the enchant and move the component diamonds at the same price I sold them for raw. A few of the buy chest people I'd tanked tried recovering some of their money by putting up at a loss the diamonds I'd sold them, but they still couldn't move product faster than a trickle. Eventually even Charlotte had to cut her prices to keep selling. It was bad.

Not long after, the admin I'd spoken to before came back to me saying she discovered the dupe glitch, Charlotte was tempbanned and her items revoked, and it would be greatly appreciated if I could please turn over any diamonds I got from her that I had left in exchange for the price I'd paid so they may be destroyed. Of course I agreed. I'd made out like a bandit already, and at that point, like poor old JP Morgan during the Panic of 1907, was more concerned with the state of the economy as a whole, that left uncorrected it might render everything I now held worthless. (I did however neglect to mention the wither skulls.) I could not resist telling her I told her so.

But the damage was done. The only reason you couldn't say the economy was in freefall was because all that remained was a stain on the ground. Many players who'd harvested and traded only did so to reduce the time they spent mining for diamond, and the game's equivalent of middle-class affluence was steady access to diamond tools. At first the abundance of diamond must have seemed like a boon to people who long had to struggle to get enough to sustain their needs. But mining diamonds to sell was also the primary way most knew to make money with which to buy building materials, thus the purchasing power of the vast majority plummeted alongside its price. (Diamonds are rare in the ground and as such have a Skinner box sort of reward-feedback loop when uncovered, which makes them for many players the most enjoyable thing to farm. The things I did to make my first tens of thousands--digging clay out of riverbeds, gathering lilypads from swamps--were more lucrative but less exciting, and as such I was the only one who did them.)

In this way, diamond was the linchpin of the entire system, so when its price bottomed out, everything else went with it. Nothing you could gather and sell was worth the money you'd get for it. And even if it was, nothing you'd want to buy with that money was available for purchase. Everyone on the server was reduced to subsistence, forced to harvest everything they might need. Even those of us with real money, once our stockpiles of raw materials started to dwindle, had to dig more out the dirt like a bunch of scrubs. The entire market was as illiquid as a Weichselian glacier.

And then Samantha came back.

Temple to the ancestors, just east of the inner city.

Gonna Buy With a Little Help From My Friends

Samantha, naturally, was horrified by the state of affairs upon her return. I mean, we all were. We thought the problem was just too massive to manage on our own, that the only thing we could do was keep playing the game and hope it worked itself out over time. Samantha didn't.

Aside from our vast reserves of raw goods, Emma and I each had several hundred thousand marbles, Victoria a bit less, Samantha a bit more. Samantha intimated to us that she intended to spend her entire fortune clearing the market of diamond and that we should join her in this endeavor. What she understood immediately, which we were initially wary to gamble on, was that while it seemed like there was more diamond out there than anyone could buy, much of it was already in plain view. No one but us was holding onto serious reserves, not like us, so all we had to do was shoulder the initial investment. We could swoop in and acquire all that there was to be had before anyone knew what was happening. They'd dump what they held once they saw there were buyers again, seeing it as a rare opportunity, not understanding our aim was to push the price past what we were paying. Then we'd become the primary suppliers for the server and quickly start making our money back, which we could then use to force up the prices of everything else. Anyway, the worst that could happen is we'd end up with too much of the most useful item in the game.

We set up a private group on a messaging app and invited a half dozen or so other people. Zel, Lily, people with some amount of assets who we knew worked the market strategically and had a vested interest in dragging it back from the brink. Minor players by comparison to us four, but it was good to have everyone on the same page. Diamond was still in the 6M range; we decided the new price would be 18M.

Samantha went first. She swept through the market buying out every single person with diamond to sell, then set up a buy chest at 12M and announced it to the server. People flocked to it, fighting to fill it up, and each time they did, she happily emptied it out so they could do it again. They all thought this was a windfall, a once in a lifetime shot to offload the accursed stones for more than they were worth, a boon offered by a wealthy eccentric just off a long break and looking to throw her fortune away. Soon enough, Samantha was tapped out, down to her last marble.

We waited a few days for the people she'd bought out to restock, the people who thought they missed out to put all they had up for sale in the hopes that it might move after all. At the designated time, we all moved our price to 18M and picked up where she left off, snapping up anything less than that and ferreting it away for later. It took just about all the money we had. I was down below 10k for the first time since a couple months after I started playing, and I wasn't the only one. We barely managed to pull it off, but we did it.

When it was just Samantha buying, it looked like an individual whim. Now that it was all of us, it was obvious to those paying attention that this was organized. But it didn't matter. By time people figured out they'd been had, we had all there was to be had. They went through their stages of grief, then they started buying from us again. Just like Samantha said.

Rumors swirled about a cabal of players manipulating the market, abusing their wealth to force a change that everyone else could only go along with. We coyly denied the whole thing, a wink and a smile, "Wow, wouldn't that be something if there was, hm?" They called us the diamond cartel. We called ourselves the Minecraft Illuminati.

Once we started making diamond money again, there was nothing that we couldn't do. No other item was duped so prolifically, and nothing available in comparable quantities came near its per-item cost. We were free to set the prices we pleased and had both the resources and the hubris to enforce them over any objection. Gold and coal up fivefold, wood and obsidian up ten. And every time we raised a price, our daily incomes went up higher.

There were no restraints anymore. We could do whatever we wanted. It was our server. Everyone else was just playing on it.

View of the market district from above.

A Whole New World

Eventually enough plugins updated for 1.7 that the admins decided it was time to update. This was known as the biome update, so named because it added dozens of new environment types and radically altered worldgen. Which meant that to take advantage of the new content, the server would be wiped, and everyone would start over.

In an attempt to prevent a repeat of the previous world, where a tiny clique of players achieved dizzying wealth at the expense of all the others, some measures were put in place to stymie our ambitions. An aggressive tax, scaling to multiple percent per day, on anyone holding more than a few tens of thousands of marbles. I warned this would backfire, that it would lead to pervasive hoarding and diamond as de facto alternative money, wildly distorting the market all to avoid taxation. This is exactly what happened: the price of diamond shot up relative to everything else, chronic shortages meant most players couldn't buy it at all, and those of us making big trades preferred to denominate in it rather than the official currency. I considered even standardizing a redstone contraption that would dispense a selectable number of items for every diamond inserted in, but never got around to it.

Meanwhile, in part to deal with the often intolerable lag of thousands of shop chests in one place, in part to reduce its grip on the daily flow of the game, the market was split up into four smaller areas. On the old server it had been one large, flat area, with square plots arranged in a grid, easy to browse and all conveniently located in one place. The new markets were pre-built structures laid out like model villages, with upstairs and downstairs, back entrances, none with the same layout, confusing to navigate and widely dispersed. Victoria came up with the idea of building our own market, that unlike the old server, we could build something with more utility than the official option and thus supplant it. We called it the Skymall: a pair of giant discs perched up at cloud level atop two massive pillars, shops arranged inside around their circumferences, connected by skybridge and easily accessible via nether portal just outside of spawn. Once we got established on the new server (which didn't take long) we plowed all our resources into this project for weeks. The Skymall opened to great fanfare; we sold out of storefronts within days, and it soon became the destination of choice for anyone heading to market.

But none of it had the same savor. The joy of my first run was starting from zero, knowing nothing of the server and little of the game, building my knowledge graph, learning, experimenting, getting results. The diamond cartel was our most audacious gamble, but it was still an unknown until we pulled it off. On the new server, everything felt rote. I scouted out a skeleton spawner and built an experience grinder my first day or two, got my enchanted diamond, constructed a 50-furnace autosmelter and a passive iron farm. I felt as a dreary Harappan, building things I'd already built time and again, without any inventiveness, any spark.

Once, when I wrote about all this in a different format, someone mentioned that online games don't necessarily have the same sort of stagnancy and barriers put up offline by entrenched, generational wealth. You can roll everyone back to zero and the selfsame people will get rich all over again. This is quite true. Part of it surely is many people don't like to play games the way we do. But much of it is you either have the time, skill, knowledge, and drive to work the angles and make your way to the top, or you don't. Making back our money wasn't just easy, it was trivial. Even with a leveled playing field, we raced ahead of everyone else. And then, one by one, we started to lose interest and drop off.

There's a skill curve to games like Europa Universalis where you start off bewildered by the multitudes of inscrutable systems laid out in front of you. Over time you learn to manage them, and the game shifts from something that seems to happen to you, to something you can participate in and compete with. But eventually you learn it well enough to find the cracks. With so much complexity, there are always oversights, always gamebreaking tactics, ways to grind the AI into dust. You have complete control over the game world, can effect any end you want. And then it becomes about building a story.

With Minecraft, with the economy, for us, there was no story to tell. There was only money.

[deleted]